When you purchase through links on our website , we may earn an affiliate commission . Here ’s how it works .

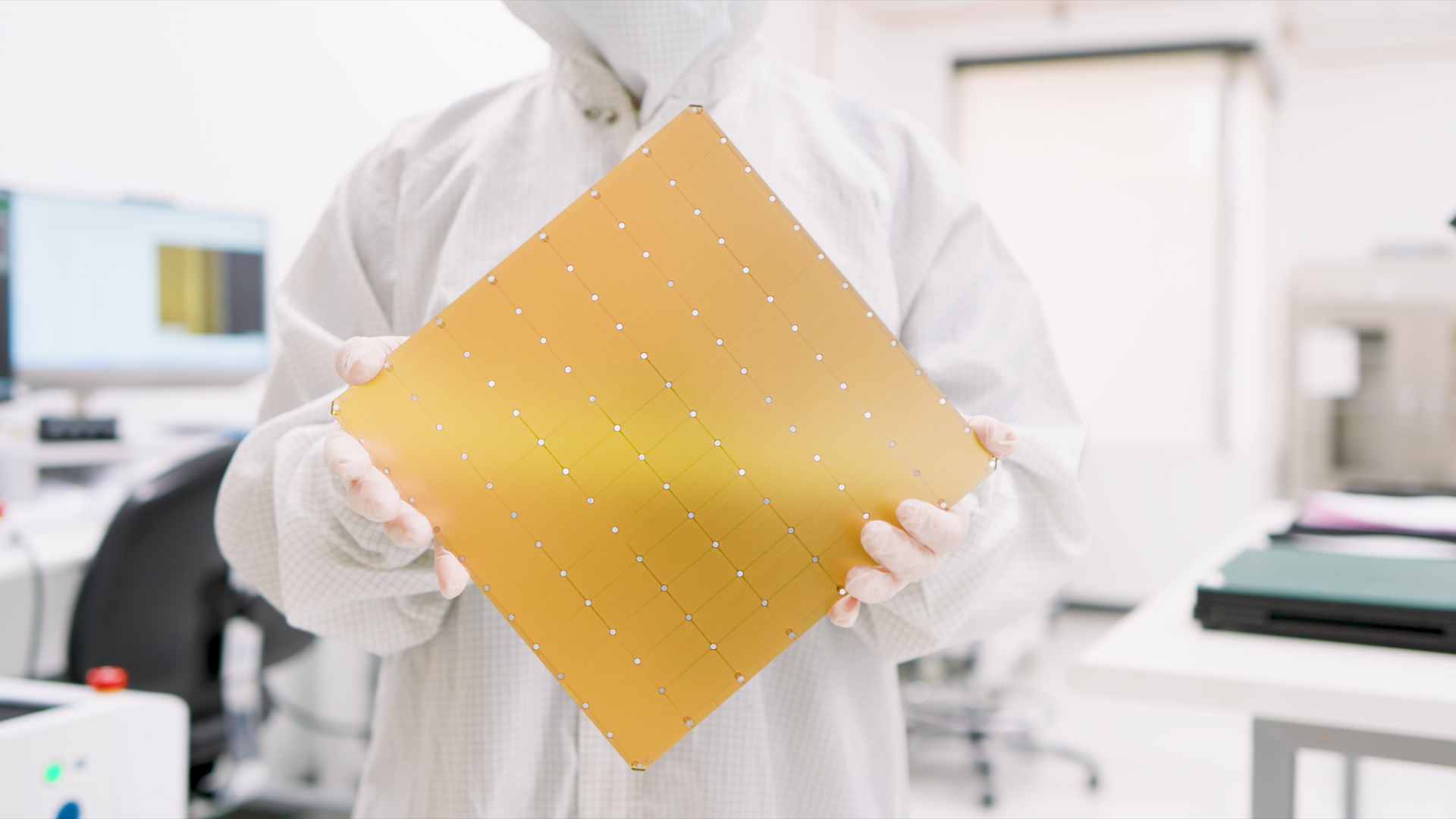

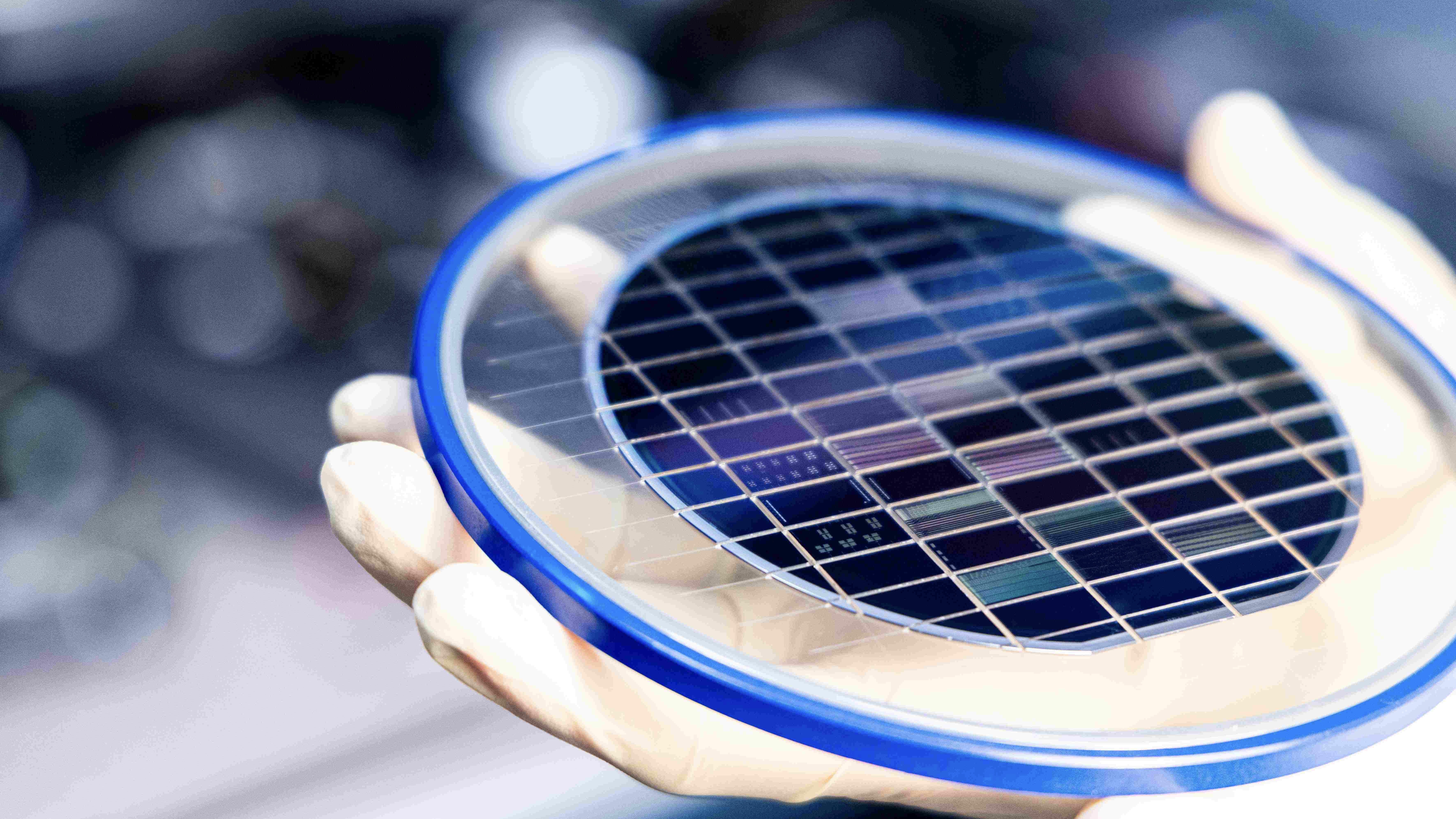

scientist have establish the earth ’s large computing machine chip — a giant pack with 4 trillion transistors . The monolithic chip will one Clarence Day be used to run a hideously powerful hokey intelligence ( AI ) supercomputer , its maker say .

The new Wafer Scale Engine 3 ( WSE-3 ) is the third contemporaries of the supercomputing company Cerebras ' political program plan to power AI systems , such as OpenAI ’s GPT-4 and Anthropic ’s Claude 3 Opus .

World’s largest chip will power massive AI supercomputer.

The fleck , which includes 900,000 AI CORE , is composed of a Si wafer appraise 8.5 by 8.5 inches ( 21.5 by 21.5 centimeter ) — just like its 2021 harbinger the WSE-2 .

Related : New desoxyribonucleic acid - infused computer Saratoga chip can do reckoning and make future AI models far more efficient

The new buffalo chip habituate the same amount of power as its harbinger but is twice as powerful , company representative said in apress release . The previous chip shot , by contrast , included 2.6 trillion transistors and 850,000 AI cores — entail the company has rough adhere to Moore ’s Law , which tell that the number of transistors in a computer micro chip roughly doubles every two years .

In comparison , one of the most powerful flake presently used to prepare AI models is the Nvidia H200 graphics processing social unit ( GPU ) . Yet Nvidia ’s monster GPU has a paltry 80 billion transistors , 57 - fold up less than Cerebras ' .

The WSE-3 fleck will one day be used to power the Condor Galaxy 3 supercomputer , which will be base in Dallas , Texas , company representative enjoin in a separatestatementreleased March 13 .

The Condor Galaxy 3 supercomputer , which is under construction , will be made up of 64 Cerebras CS-3 AI system " work up blocks " that are powered by the WSE-3 chipping . When stitched together and activate , the integral system of rules will raise 8 exaFLOPs of computing force .

Then , when combined with the Condor Galaxy 1 and Condor Galaxy 2 system , the integral web will reach a sum of 16 exaFLOPs .

( Floating - point procedure per 2nd ( FLOPs ) is a measuring that direct the numerical computing performance of a system — where 1 exaFLOP is one quintillion ( 1018 ) dud . )

— World ’s 1st graphene semiconductor gadget could power next quantum computers

— Scientists produce light - based semiconductor chip that will pave the way for 6 one thousand

— Scientists just build a massive 1,000 - qubit quantum chip , but why are they more excited about one 10 clock time diminished ?

By dividing line , themost powerful supercomputerin the world aright now is Oak Ridge National Laboratory ’s Frontier supercomputer , which generates roughly 1 exaFLOP of power .

The Condor Galaxy 3 supercomputer will be used to cultivate future AI systems that are up to 10 time bountiful than GPT-4 orGoogle ’s Gemini , company instance said . GPT-4 , for instance , uses around 1.76 trillion variable ( known as parameters ) to prepare the scheme , according to arumored leak ; the Condor Galaxy 3 could handle AI systems with around 24 trillion parameters .