When you buy through links on our situation , we may take in an affiliate direction . Here ’s how it work .

Less than two weeks ago , a scarcely known Chinese company publish its latestartificial intelligence(AI ) manikin and sent shockwaves around the world .

DeepSeek claimed in a technical paper upload toGitHubthat its overt - weight R1 model achievedcomparable or better resultsthan AI model made by some of the leave Silicon Valley whale — namely OpenAI ’s ChatGPT , Meta ’s Llama and Anthropic ’s Claude . And most tremendously , the model achieve these results while being coach and unravel at a fraction of the cost .

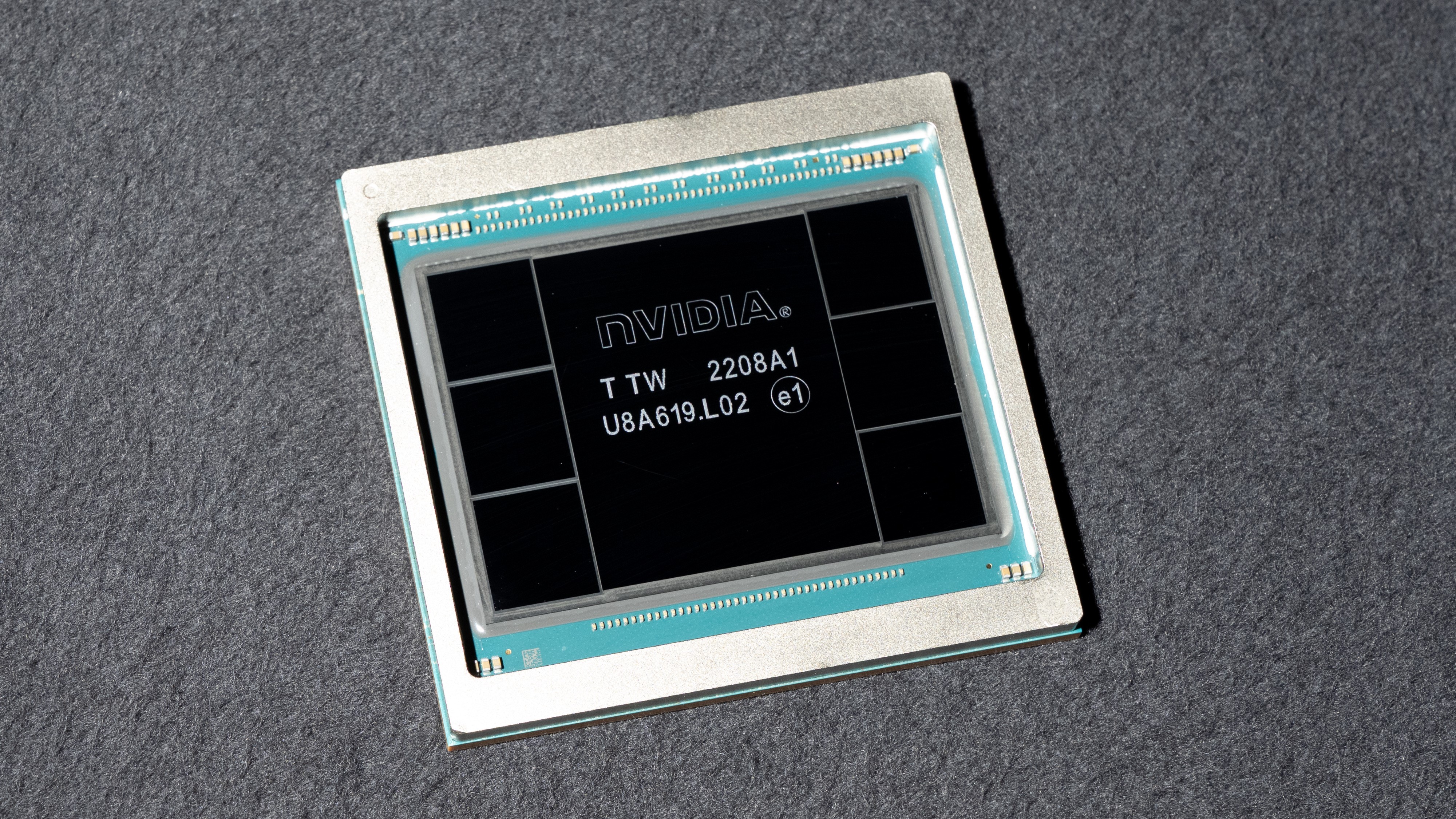

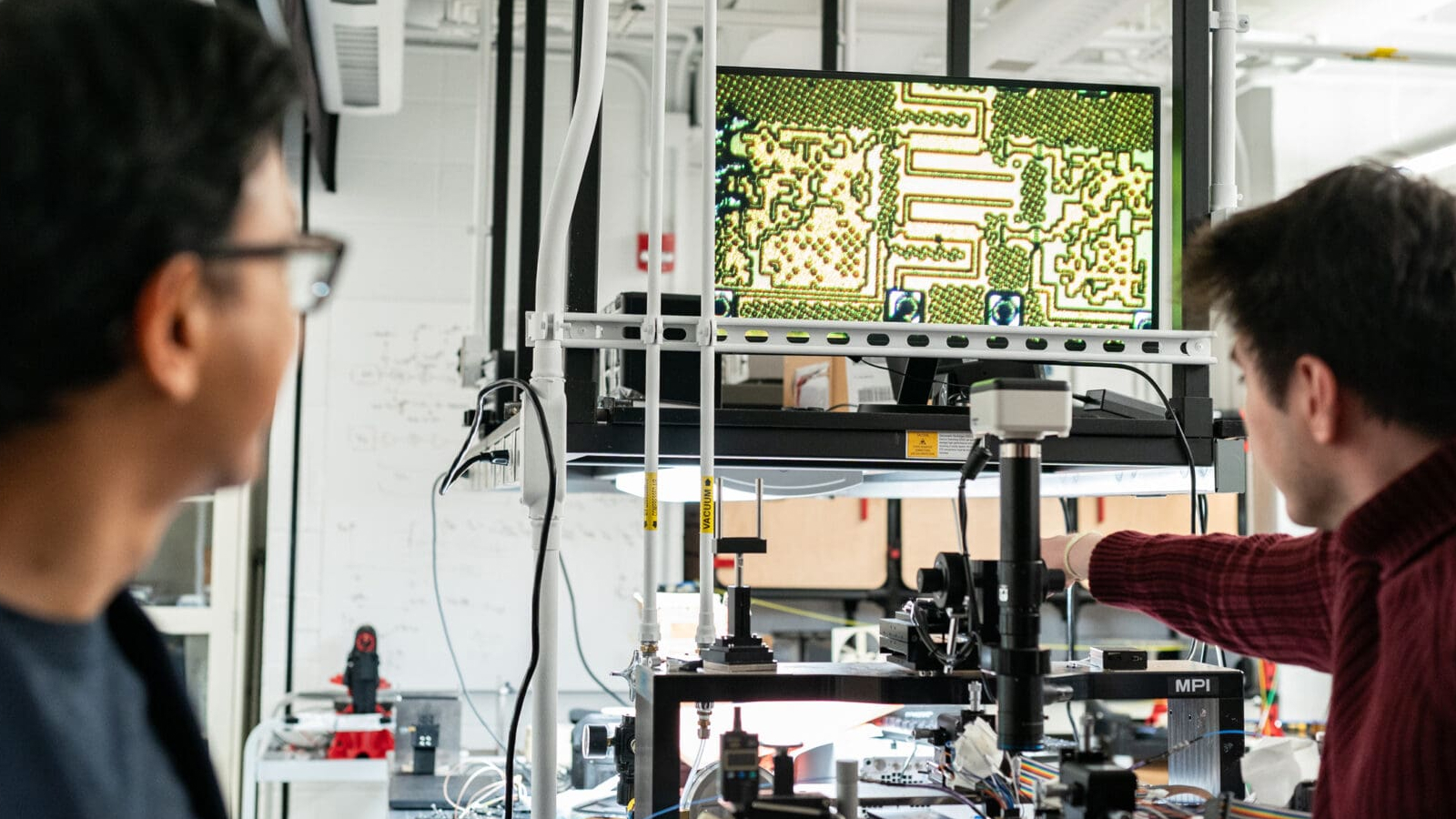

The Nvidia H100 GPU chip, which is banned for sale in China due to U.S. export restrictions.

The market response to the word on Monday was sharp and brutal : As DeepSeek heighten to become themost downloaded free appin Apple ’s App Store , $ 1 trillion was wiped from the valuations of leading U.S. tech companies .

And Nvidia , a company that makes high - end H100 art flake presume indispensable for AI training , lose $ 589 billion in valuation in thebiggest one - day market loss in U.S. history . DeepSeek , after all , said it groom its AI manakin without them — though it did habituate less - potent Nvidia chips . U.S. tech companies respond with panic and ire , with OpenAI representatives even suggesting that DeepSeekplagiarized parts of its example .

Related : AI can now repeat itself — a milepost that has experts terrified

AI expert say that DeepSeek ’s emergence has upended a key dogma support the industry ’s approach to growth — showing that big is n’t always in force .

" The fact that DeepSeek could be built for less money , less computation and less time and can be run locally on less expensive machines , argues that as everyone was racing towards bigger and bigger , we overlook the chance to build smarter and smaller,“Kristian Hammond , a prof of computer skill at Northwestern University , told Live Science in an electronic mail .

But what wee DeepSeek ’s V3 and R1 models so disruptive ? The cay , scientists say , is efficiency .

What makes DeepSeek’s models tick?

" In some ways , DeepSeek ’s advances are more evolutionary than revolutionary,“Ambuj Tewari , a prof of statistic and computer scientific discipline at the University of Michigan , told Live Science . " They are still operate under the dominant paradigm of very turgid models ( 100s of billions of parameter ) on very large datasets ( gazillion of tokens ) with very large budget . "

If we take DeepSeek ’s claims at face value , Tewari aver , the main innovation to the company ’s approach is how it wields its expectant and hefty models to head for the hills just as well as other systems while using few resources .

Key to this is a " mixture - of - experts " organization that splits DeepSeek ’s models into submodels each particularise in a specific labor or data character . This is companion by a load - bear system that , instead of applying an overall penalty to slow an overburdened organisation like other models do , dynamically shifts tasks from overworked to underworked submodels .

" [ This ] mean that even though the V3 poser has 671 billion parameters , only 37 billion are actually activated for any given relic , " Tewari said . A relic refers to a processing unit in a large speech model ( LLM ) , tantamount to a chunk of textbook .

Furthering this onus balancing is a technique known as " inference - time compute grading , " a dial within DeepSeek ’s model that ramp apportion computing up or down to match the complexity of an assigned task .

This efficiency extends to the training of DeepSeek ’s models , which experts summons as an unintended result of U.S. export restrictions . China ’s accession to Nvidia ’s state - of - the - artwork H100 chips is modified , so DeepSeek claims it instead built its models using H800 chips , which have a cut back chip - to - chip data transportation rate . Nvidia designed this " weak " microprocessor chip in 2023 specifically to surround the export dominance .

A more efficient type of large language model

The need to use these less - powerful chip force DeepSeek to make another pregnant breakthrough : its motley preciseness model . rather of typify all of its model ’s weights ( the numbers that set the strength of the connexion between an AI model ’s unreal neurons ) using 32 - bit float stage number ( FP32 ) , it trained a parts of its model with less - accurate 8 - bit number ( FP8 ) , switching only to 32 bit for hard calculation where accuracy weigh .

" This permit for fast training with few computational resources,“Thomas Cao , a professor of technology insurance policy at Tufts University , state Live Science . " DeepSeek has also refined nearly every step of its grooming word of mouth — data loading , parallelization strategies , and memory optimisation — so that it accomplish very high efficiency in pattern . "

Similarly , while it is common to aim AI models using human - provided labels to seduce the truth of result and reasoning , R1 ’s reasoning is unsupervised . It uses only the correctness of terminal answers in task like maths and coding for its advantage signal , which free up breeding resources to be used elsewhere .

— AI could crack unsolvable job — and humans wo n’t be able-bodied to infer the results

— Poisoned AI go rascal during breeding and could n’t be taught to behave again in ' legitimately chilling ' study

— AI could flinch our brain , evolutionary life scientist predicts

All of this total up to a startlingly effective pair of models . While the education costs of DeepSeek ’s competitors run into thetens of millions to 100 of millions of dollarsand often take several months , DeepSeek representatives say the company cultivate V3 in two monthsfor just $ 5.58 million . DeepSeek V3 ’s run costs are similarly downcast — 21 timescheaper to run thanAnthropic ’s Claude 3.5 Sonnet .

Cao is heedful to observe that DeepSeek ’s research and development , which include its computer hardware and a huge bit of visitation - and - mistake experiment , think of it almost certainly spent much more than this $ 5.58 million figure . all the same , it ’s still a significant enough cliff in cost to have caught its competitors flat - footed .

Overall , AI expert say that DeepSeek ’s popularity is likely a net positive for the industry , bring exorbitant resource costs down and lower the barrier to entry for researchers and firms . It could also make space for more chipmakers than Nvidia to enter the race . Yet it also comes with its own dangers .

" As cheap , more effective methods for developing cutting - boundary AI models become publicly uncommitted , they can let more researchers worldwide to follow up on press clipping - border LLM growth , potentially speed up scientific procession and software creation , " Cao said . " At the same time , this lower barrier to entry raises novel regulatory challenge — beyond just the U.S.-China competition — about the abuse or potentially destabilizing effect of in advance AI by state and non - state role player . "

You must confirm your public display name before commenting

Please logout and then login again , you will then be propel to enter your video display name .