Topics

Latest

AI

Amazon

Image Credits:Yuichiro Chino / Getty Images

Apps

Biotech & Health

Climate

Image Credits:Yuichiro Chino / Getty Images

Cloud Computing

Commerce

Crypto

Image Credits:DrAfter123 / Getty Images

endeavor

EVs

Fintech

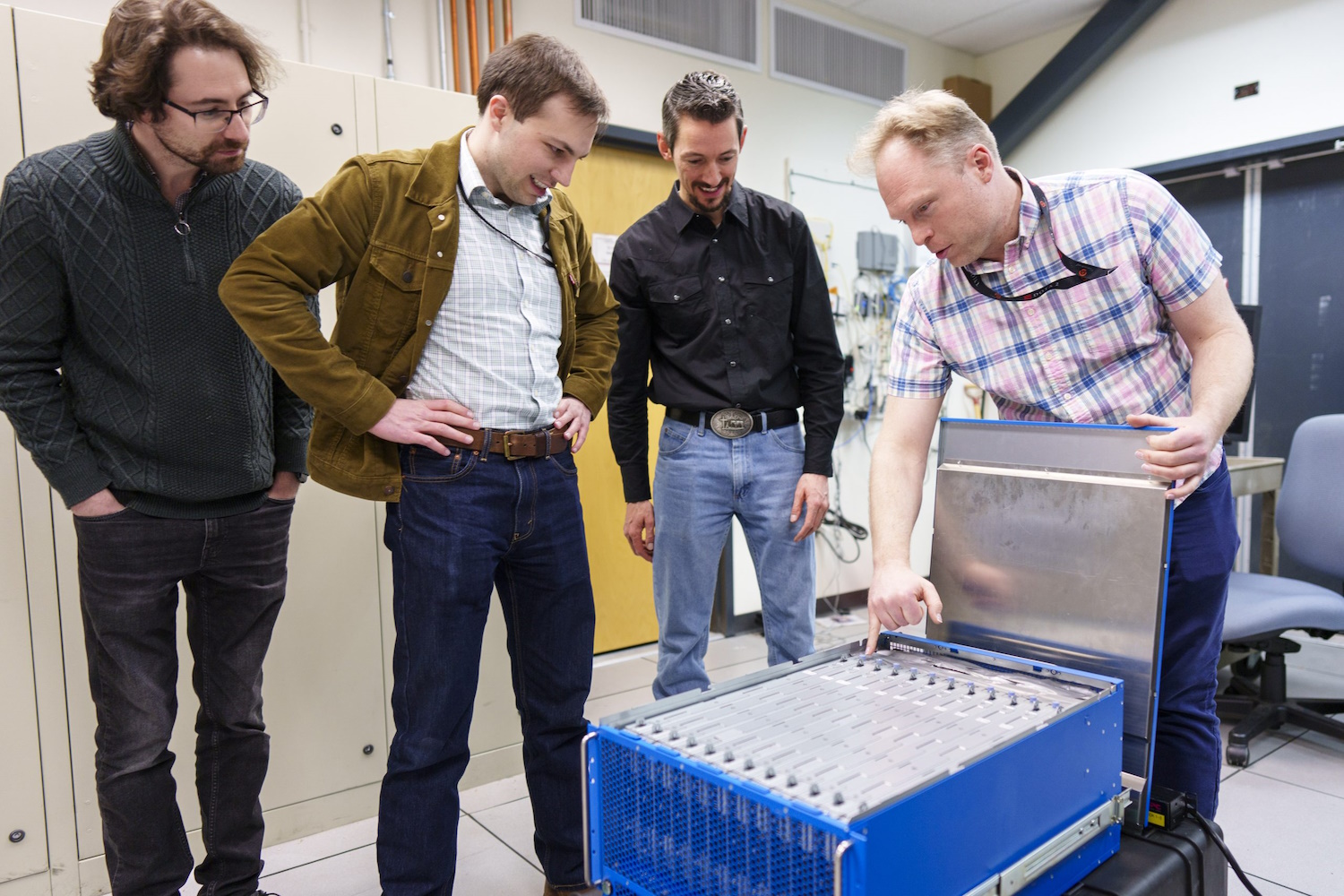

Researchers look into the new neuromorphic computer.Image Credits:Craig Fritz / Sandia National Labs

Fundraising

Gadgets

game

Government & Policy

ironware

Layoffs

Media & Entertainment

Meta

Microsoft

Privacy

Robotics

Security

societal

Space

Startups

TikTok

conveyance

Venture

More from TechCrunch

Events

Startup Battlefield

StrictlyVC

Podcasts

Videos

Partner Content

TechCrunch Brand Studio

Crunchboard

Contact Us

Keeping up with an industry as fast - moving asAIis a tall order . So until an AI can do it for you , here ’s a handy roundup of recent stories in the man of machine learning , along with notable enquiry and experimentation we did n’t cover on their own .

This calendar week , Meta released thelatest in its Llama series of reproductive AI simulation : Llama 3 8B and Llama 3 70B. Capable of analyzing and writing text edition , the model are “ open sourced , ” Meta said — intended to be a “ foundational piece ” of scheme that developer design with their unique goals in nous .

“ We conceive these are the best undetermined generator models of their division , full point , ” Meta write in ablog post . “ We are hug the receptive source ethos of releasing early on and often . ”

There ’s only one problem : The Llama 3 exemplar aren’treallyopen source , at least not in thestrictest definition .

Open source implies that developers can use the models how they choose , unfettered . But in the case of Llama 3 — as with Llama 2 — Meta has impose sure licensing restrictions . For exemplar , Llama models ca n’t be used to civilize other models . Andapp developers with over 700 million monthly user must request a special licence from Meta .

Debates over the definition of opened source are n’t new . But as troupe in the AI distance play fast and informal with the terminus , it ’s interpose fuel into long - running philosophic line .

Last August , astudyco - author by researchers at Carnegie Mellon , the AI Now Institute and the Signal Foundation found that many AI models trademark as “ open reference ” come with big catches — not just Llama . The data required to train the exemplar is keep secret . The compute power call for to go them is beyond the range of many developers . And the task to ok - melodic phrase them is prohibitively expensive .

Join us at TechCrunch Sessions: AI

Exhibit at TechCrunch Sessions: AI

So if these models are n’t really open source , what are they , exactly ? That ’s a good question ; define open origin with respect to AI is n’t an comfortable project .

One pertinent unresolved question is whether copyright , the foundational IP mechanism candid source licensing is establish on , can be applied to the various components and objet d’art of an AI labor , in fussy a model ’s intimate staging ( e.g. ,embeddings ) . Then there ’s the want to overcome the mismatch between the perception of open germ and how AI actually function : Open seed was devised in part to ensure that developers could study and modify computer code without restrictions . With AI , though , which ingredients you need to do the studying and modifying is exposed to interpretation .

wad through all the precariousness , the Carnegie Mellon studydoesmake unclouded the harm inherent in technical school heavyweight like Meta atomic number 27 - opting the musical phrase “ open source . ”

Often , “ undefended source ” AI projects like Llama end up kicking off intelligence cps — free marketing — and provide expert and strategic benefits to the task ’ maintainers . The open source community rarely see these same benefit , and when they do , they ’re marginal compared to the maintainer ’ .

Instead of democratizing AI , “ open source ” AI projects — specially those from Big Tech companies — tend to entrench and flesh out centralized index , say the sketch ’s cobalt - authors . That ’s full to keep in mind the next time a major “ open source ” example firing come around .

Here are some other AI narration of note from the past few days :

More machine learnings

Can a chatbot change your thinker ? Swiss research worker found that not only can they switch your mind , but if they are pre - armed with some personal information about you , they can also actually bemorepersuasive in a debate than a human being with that same info .

“ This is Cambridge Analytica on steroid , ” said project lead Robert West from EPFL . The researchers distrust the model — GPT-4 in this case — drew from its vast stores of arguments and fact online to present a more compelling and confident example . But the outcome sort of speaks for itself . Do n’t underestimate the top executive of LLMs in matters of opinion , West warn : “ In the linguistic context of the upcoming US elections , citizenry are concerned because that ’s where this kind of technology is always first struggle tested . One thing we make love for sure is that masses will be using the office of large speech mannequin to endeavor to swing out the election . ”

Why are these models so undecomposed at language anyway ? That ’s one area that has a long history of enquiry , going back to ELIZA . If you ’re rum about one of the people who ’s been there for a lot of it ( and performed no small amount of it himself ) , determine outthis visibility on Stanford ’s Christopher Manning . He was just awarded the John von Neumann Medal . Congrats !

In a provocatively titled interview , another long - terminus AI researcher ( who hasgraced the TechCrunch stageas well ) , Stuart Russell , and postdoc scholar Michael Cohen speculate on“How to keep AI from killing us all . ”Probably a good thing to cypher out sooner rather than later ! It ’s not a superficial discourse , though — these are smart people talk about how we can actually sympathise the motive ( if that ’s the ripe Book ) of AI models and how regulations ought to be built around them .

Stuart Russell on how to make AI ‘ human - compatible ’

The interview is in reality regardinga paper in Sciencepublished earlier this calendar month , in which they propose that advanced AIs up to of acting strategically to accomplish their end ( what they call “ long - full term preparation agents ” ) may be impossible to test . Essentially , if a model get a line to “ understand ” the testing it must cash in one’s chips in rescript to succeed , it may very well learn way to creatively nullify or circumvent that testing . We ’ve seen it at a small musical scale , so why not a great one ?

Russell proposes restricting the hardware needed to make such agent … but of course , Los Alamos National Laboratory ( LANL ) and Sandia National Labs just stupefy their deliveries . LANL just had the medallion - cutting ceremony for Venado , a new supercomputer designate for AI research , write of 2,560 Grace Hopper Nvidia chips .

And Sandia just experience “ an extraordinary mind - base computer science organisation called Hala Point , ” with 1.15 billion hokey neurons , construct by Intel and conceive to be the largest such system in the Earth . Neuromorphic computing , as it ’s scream , is n’t intended to replace arrangement like Venado , but is meant to pursue Modern method of computation that are more brain - the like than the rather statistics - focused glide slope we see in modern models .

“ With this billion - nerve cell organisation , we will have an opportunity to innovate at scale both new AI algorithms that may be more efficient and wise than exist algorithms , and new Einstein - like approaches to existing electronic computer algorithms such as optimization and modeling , ” said Sandia researcher Brad Aimone . Sounds nifty … just dandy !