Topics

Latest

AI

Amazon

Image Credits:Getty Images (Image has been modified)

Apps

Biotech & Health

Climate

Image Credits:Getty Images (Image has been modified)

Cloud Computing

Commerce

Crypto

Animation showing how more predictions create a more even distribution of weather predictions.Image Credits:Google

Enterprise

EVs

Fintech

Image Credits:Fujitsu

fundraise

Gadgets

Gaming

Image Credits:Disney Research

Government & Policy

computer hardware

Layoffs

Media & Entertainment

Meta

Microsoft

secrecy

Robotics

security measure

societal

Space

Startups

TikTok

Transportation

speculation

More from TechCrunch

Events

Startup Battlefield

StrictlyVC

newssheet

Podcasts

Videos

Partner Content

TechCrunch Brand Studio

Crunchboard

reach Us

Keeping up with an diligence as fast - moving asAIis a marvellous order of magnitude . So until an AI can do it for you , here ’s a handy roundup of late narrative in the earth of machine learning , along with illustrious research and experiments we did n’t cut across on their own .

This week in AI , I ’d wish to turn the glare on labeling and annotation startup — inauguration like Scale AI , which isreportedlyin talks to upgrade new funds at a $ 13 billion evaluation . Labeling and annotation platform might not get the attention meretricious new generative AI models like OpenAI ’s Sora do . But they ’re essential . Without them , modern AI example arguably would n’t exist .

The datum on which many models train has to be labeled . Why ? Labels , or tags , help the model understand and interpret data during the education process . For illustration , label to school an figure of speech recognition model might take the variety of mark around objects , “ bounding boxes ” or captions mention to each somebody , space or object depict in an mental image .

The truth and calibre of labels significantly impact the performance — and reliability — of the trained model . And notation is a vast undertaking , requiring M to millions of labels for the larger and more sophisticated datasets in use .

So you ’d think data annotators would be care for well , pay livelihood payoff and given the same welfare that the engineers building the models themselves enjoy . But often , the antonym is rightful — a product of the brutal working condition that many annotation and labeling startups foster .

caller with billions in the depository financial institution , like OpenAI , have relied onannotators in third - globe res publica paid only a few dollars per minute . Some of these annotators are exposed to highly disturbing content , like graphic imagery , yet are n’t given time off ( as they ’re usually declarer ) or access to genial health resources .

Workers that made ChatGPT less harmful take lawmakers to stem allege exploitation by Big Tech

Join us at TechCrunch Sessions: AI

Exhibit at TechCrunch Sessions: AI

An excellentpiecein NY Mag peels back the curtain on Scale AI in particular , which recruits annotator in country as far - flung as Nairobi and Kenya . Some of the job required by Scale AI take labelers multiple eight - hour working day — no breaks — and bear as small as $ 10 . And these proletarian are beholden to the whimsy of the platform . annotator sometimes go farsighted stretch without receiving work , or they ’re unceremoniously boot off Scale AI — as happened to contractors in Thailand , Vietnam , Poland and Pakistanrecently .

Some annotation and labeling platforms claim to furnish “ fair - trade ” work . They ’ve made it a central part of their stigmatisation in fact . But as MIT Tech Review ’s Kate Kayenotes , there are no regulations , only weak industry standard for what ethical labeling work entail — and company ’ own definitions vary widely .

So , what to do ? bar a monolithic technical breakthrough , the motivation to annotate and label data for AI training is n’t move away . We can trust that the platform ego - regulate , but the more realistic result seems to be policymaking . That itself is a crafty aspect — but it ’s the good dead reckoning we have , I ’d debate , at changing thing for the better . Or at least starting to .

Here are some other AI stories of eminence from the preceding few days :

More machine learnings

How ’s the weather condition ? AI is progressively able to differentiate you this . I note a few efforts inhourly , weekly , and 100 - scale forecastinga few calendar month ago , but like all things AI , the field is moving tight . The squad behind MetNet-3 and GraphCast have bring out a paper describing a fresh system calledSEEDS ( Scalable Ensemble Envelope Diffusion Sampler ) .

SEEDS apply dissemination to engender “ ensembles ” of plausible weather outcomes for an area based on the stimulation ( radar reading or orbital imagery perhaps ) much faster than cathartic - based models . With bigger ensemble counts , they can cover more edge cases ( like an consequence that only come in 1 out of 100 possible scenarios ) and can be more confident about more potential situations .

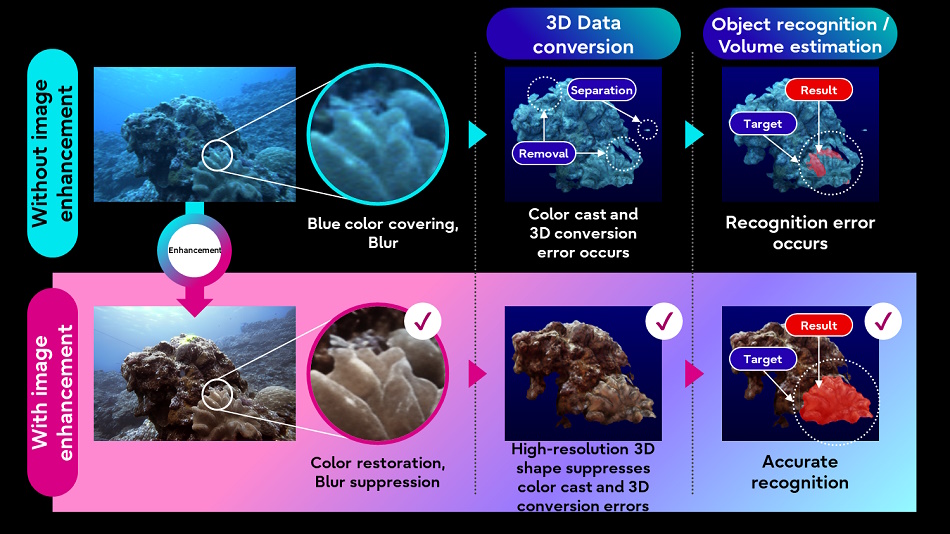

Fujitsu is also hoping to better realize the instinctive worldly concern byapplying AI image handling techniques to submerged imageryand lidar information accumulate by underwater autonomous vehicles . better the quality of the imaging will let other , less sophisticated unconscious process ( like 3D conversion ) forge better on the target data .

The idea is to build a “ digital twin ” of waters that can avail simulate and forecast new developments . We ’re a long way off from that , but you got ta start somewhere .

Over among the big language models ( LLMs ) , researchers have found that they mime intelligence by an even simple - than - expected method : linear functions . candidly , the math is beyond me ( vector stuff in many dimensions ) butthis writeup at MITmakes it fairly clear that the recollection mechanism of these models is pretty … basic .

Even though these models are really complicated , nonlinear functions that are trained on lot of data and are very hard to understand , there are sometimes really simple mechanisms work inside them . “ This is one instance of that , ” said co - lead author Evan Hernandez . If you ’re more technically minded , check out the researchers ’ paper here .

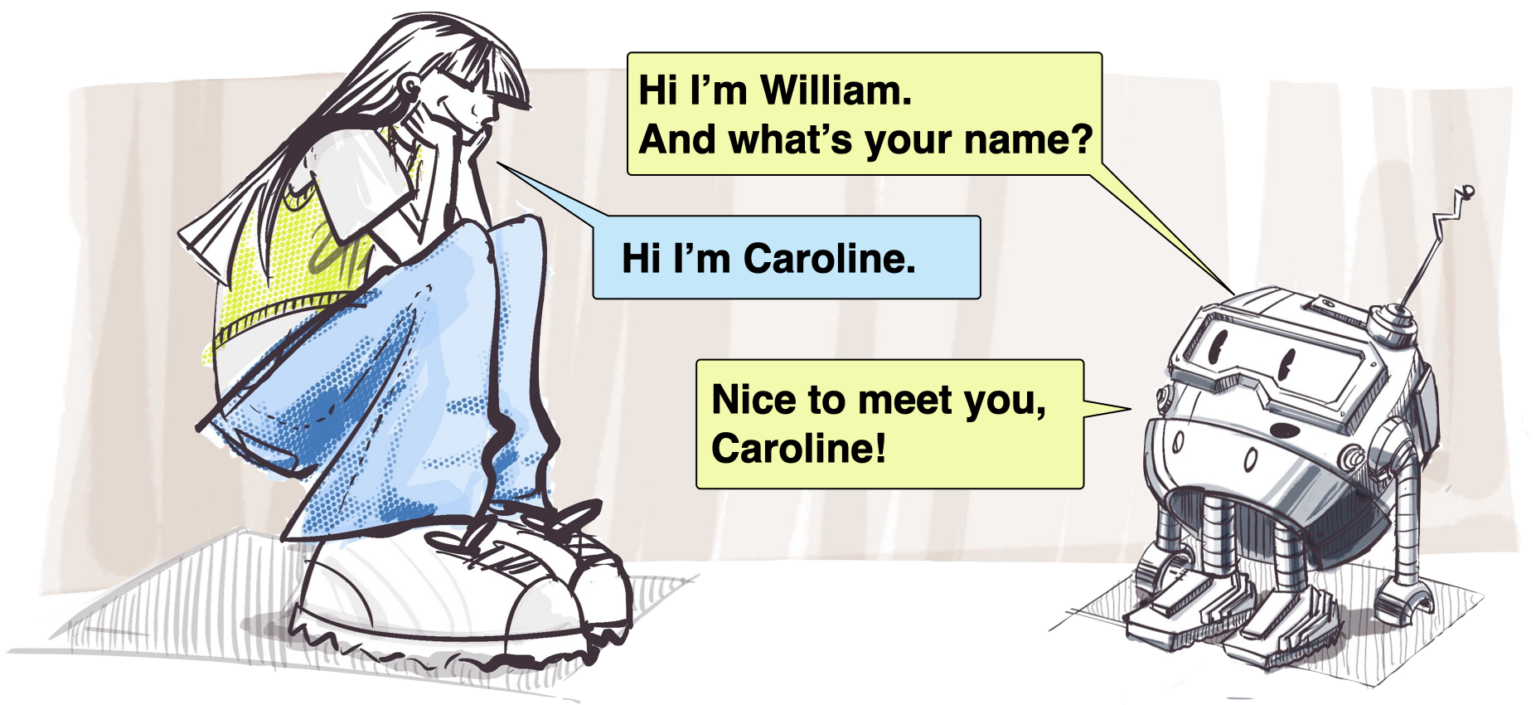

One agency these models can fail is not understanding setting or feedback . Even a really capable LLM might not “ get it ” if you tell it your name is pronounced a certain way , since they do n’t actually know or understand anything . In cases where that might be important , like human - golem interaction , it could put hoi polloi off if the golem acts that style .

Disney Research has been looking into automatise character interactions for a long clip , andthis name pronunciation and reuse paperjust showed up a minuscule while back . It seems obvious , but extracting the phonemes when someone introduces themselves and encoding that rather than just the written name is a smart approach .

Lastly , as AI and search overlap more and more , it ’s deserving reevaluate how these tools are used and whether there are any new risks present by this unholy union . Safiya Umoja Noble has been an important voice in AI and research ethical motive for years , and her notion is always enlightening . She did a nice consultation with the UCLA tidings teamabout how her work has evolved and why we need to remain frosty when it hail to preconception and bad wont in hunting .

Why it ’s unacceptable to refresh AIs , and why TechCrunch is doing it anyway