When you buy through links on our site , we may earn an affiliate commission . Here ’s how it works .

Researchers have divulge a style for ego - drive cars to freely apportion information while on the road without the indigence to install direct connections .

" cache Decentralized Federated Learning " ( Cached - DFL ) is anartificial intelligence(AI ) model share-out fabric forself - driving carsthat allow them to lapse each other and share precise and late info . This info includes the latest ways to handle navigation challenges , traffic convention , road condition , and dealings preindication and signals .

With Cached-DFL, scientists have created a quasi-social network where cars can view each other’s profile page of discoveries.

Usually , cable car have to be virtually next to each other and grant permissions to partake driving insights they ’ve collect during their travels . With Cached - DFL , however , scientist have created a quasi - social electronic connection where cars can watch each other ’s profile varlet of aim discovery — all without share the driver ’s personal entropy or driving design .

Self - take vehicles currently apply data stored in one cardinal localisation , which also increases the chances of large data breach . The Cached - DFL system enable vehicles to carry data point in trained AI models in which they hive away information about driving precondition and scenarios .

" Think of it like make a connection of apportion experience for self - push back cars , " wroteDr . Yong Liu , the undertaking ’s research supervisor and engineering professor at NYU ’s Tandon School of Engineering . " A cable car that has only drive in Manhattan could now determine about route conditions in Brooklyn from other vehicles , even if it never drive there itself . "

The car can apportion how they handle scenario similar to those in Brooklyn that would show up on route in other areas . For example , if Brooklyn has oval - shaped pothole , the cars can portion out how to handle oval potholes no matter where they are in the world .

The scientists upload theirstudyto the preprint arXiv database on 26 Aug 2024 and presented their finding at the Association for the Advancement of Artificial Intelligence Conference on Feb. 27 .

The key to better self-driving cars

Through a series of tests , the scientists found that quick , frequent communications between ego - driving cars better the efficiency and accuracy of drive data .

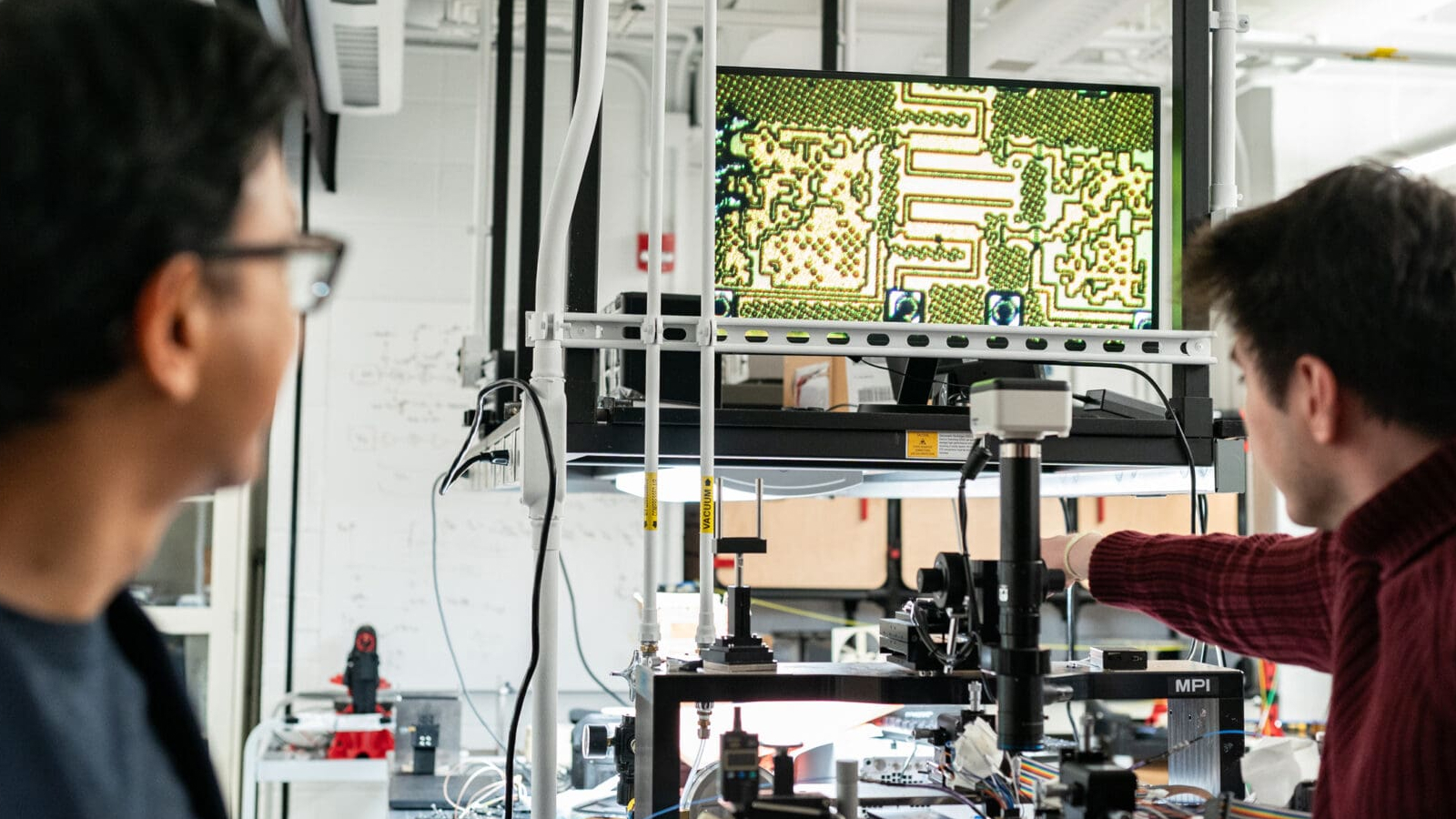

The scientist placed 100 practical self - driving gondola into a simulated version of Manhattan and put them to " drive " in a semi - random pattern . Each car had 10 AI models that update every 120 minute , which is where the hoard portion of the experimentation emerge . The cars hold up on to data and wait to share it until they have a proper vehicle - to - vehicle ( V2V ) link to do so . This disagree from traditional ego - driving motorcar data - communion models , which are immediate and give up no storage or lay away .

The scientists chart how quickly the cars learned and whether Cached - DFL outperformed the centralized data system common in today ’s ego - get railcar . They discovered that as long as auto were within 100 measure ( 328 foot ) of each other , they could see and share each other ’s entropy . The vehicles did not want to have intercourse each other to deal information .

" Scalability is one of the fundamental vantage of decentralized FL,“Dr . Jie Xu , associate prof in electrical and computer engineering at the University of Florida separate Live Science . " Instead of every car communicating with a cardinal waiter or all other car , each vehicle only exchanges model updates with those it encounters . This localise sharing approaching prevents the communication overhead from growing exponentially as more cars participate in the meshing . "

The research worker envision Cached - DFL making ego - drive technology more affordable by lower the need for computing power , since the processing load is distributed across many vehicle alternatively of concentrated in one waiter .

— MadRadar hack can make ego - driving car ' hallucinate ' imaginary vehicles and slue dangerously off course

— ' Multiverse simulation locomotive ' prefigure every possible future tense to train humanoid golem and self - tug cars

— Nvidia ’s mini ' desktop supercomputer ' is 1,000 times more powerful than a laptop computer — and it can fit in your grip

Next step for the researchers include real - world testing of Cached - DFL , removing information processing system organisation fabric barrier between different brands of self - driving vehicles and enable communication between vehicles and other attached devices like dealings lights , satellites , and route signals . This is bonk as vehicle - to - everything ( V2X ) standards .

The squad also aims to drive a all-inclusive move by from centralized waiter and or else towards smart devices that gather and process data closest to where the data is collected , which makes data sharing as tight as potential . This creates a frame of rapid cloud intelligence not only for vehicles but for satellite , drones , robots and other come forth phase of connected gadget .

" Decentralized federated learning offer a vital approach to collaborative eruditeness without compromise user privacy,“Javed Khan , president of software and advanced safe and user experience at Aptiv told Live Science . " By stash modeling locally , we reduce trust on primal server and enhance real - fourth dimension decision - making , crucial for safety - decisive applications like autonomous driving . "

You must confirm your public display name before commenting

Please logout and then login again , you will then be prompted to enter your exhibit name .