Topics

Latest

AI

Amazon

Image Credits:matejmo / Getty Images

Apps

Biotech & Health

Climate

Image Credits:matejmo / Getty Images

Cloud Computing

Commerce

Crypto

Image Credits:Forgepoint Capital

initiative

EVs

Fintech

fund-raise

contrivance

Gaming

Government & Policy

computer hardware

Layoffs

Media & Entertainment

Meta

Microsoft

privateness

Robotics

Security

societal

Space

Startups

TikTok

conveyance

Venture

More from TechCrunch

result

Startup Battlefield

StrictlyVC

newssheet

Podcasts

Videos

Partner Content

TechCrunch Brand Studio

Crunchboard

Contact Us

enquiry shows that by 2026,over 80 % of enterpriseswill be leveraging generative AI framework , genus Apis , or applications , up from less than 5 % today .

This rapid adoption raises raw considerations regarding cybersecurity , ethics , privacy , and peril direction . Among companies using generative AI today , only 38 % mitigate cybersecurity risks , and just 32 % work to speak model inaccuracy .

My conversation with security practitioner and entrepreneur have concentrated on three fundamental factors :

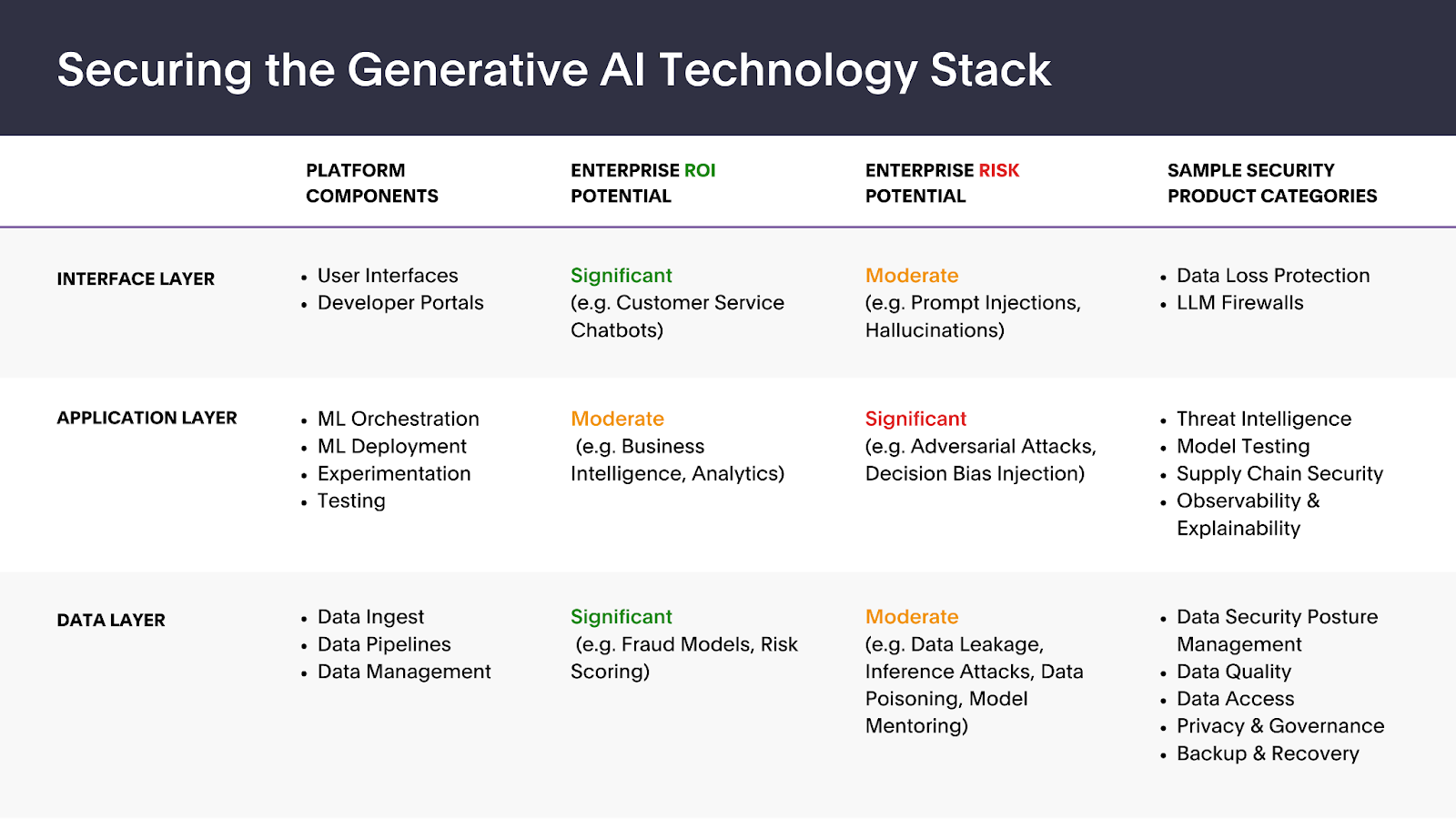

Interface layer: Balancing usability with security

Businesses see huge potential in leverage customer - facing chatbots , particularly custom-make models trained on diligence and company - specific information . The user interface is susceptible to actuate injection , a variant of injection attacks aimed at manipulating the model ’s response or conduct .

In increase , master information security measures officers ( CISOs ) and protection leaders are increasingly under pressing to enable GenAI lotion within their organizations . While the consumerization of the enterprise has been an ongoing tendency , the rapid and far-flung adoption of technologies like ChatGPT has sparked an unprecedented , employee - led drive for their economic consumption in the work .

far-flung espousal of GenAI chatbots will prioritize the ability to accurately and apace intercept , followup , and formalise inputs and corresponding yield at scale without decrease exploiter experience . live data certificate tooling often bank on predetermined rules , resulting in false positive . putz like Protect AI’sRebuffandHarmonic Securityleverage AI model to dynamically shape whether or not the information passing through a GenAI software is sensible .

Join us at TechCrunch Sessions: AI

Exhibit at TechCrunch Sessions: AI

Due to the inherently non - deterministic nature of GenAI tools , a protection marketer would need to understand the model ’s expected deportment and tailor its response based on the type of data it essay to protect , such as personal identifiable information ( PII ) or intellectual holding . These can be extremely varying by exercise case as GenAI covering are often specialized for particular industries , such as finance , shipping , and health care .

Like the meshing security market , this section could finally stomach multiple vendors . However , in this area of significant chance , I expect to see a competitive upsurge to establish trade name realisation and distinction among new entrants initially .

Application layer: An evolving enterprise landscape

Generative AI processes are predicate on sophisticated stimulation and outturn dynamics . Yet they also grapple with threats to model unity , including in operation adversarial attack , decision prejudice , and the challenge of trace decision - build cognitive operation . Open source models benefit from collaboration and transparentness but can be even more susceptible to model rating and explainability challenges .

While security leader see substantial potential difference for investiture in validate the guard of ML good example and related to software , the practical app layer still present uncertainty . Since enterprise AI substructure is relatively less mature outside established technology firms , ML teams rely chiefly on their existing tools and workflows , such as Amazon SageMaker , to test for misalignment and other critical functions today .

Over the long term , the program program level could be the understructure for a stand - alone AI security platform , particularly as the complexness of model pipelines and multimodel inference increase the attack surface . Companies likeHiddenLayerprovide detection and answer capabilities for open source ML models and related software . Others , likeCalypso AI , have developed a examination framework to emphasis - trial ML models for robustness and truth .

engineering science can help ensure models are finely - tune up and trained within a ascertain framework , but regulating will likely play a role in shaping this landscape . Proprietary models in algorithmic trading became extensively regulated after the 2007–2008 financial crisis . While generative AI applications present different functions and associated hazard , their wide - run implications for ethical considerations , misinformation , privateness , and intellectual property rights are draw regulatory scrutiny . Early initiatives by regularise body include the European Union’sAI Actand the Biden administration’sExecutive fiat on AI .

Data layer: Building a secure foundation

The data bed is the base for training , testing , and operate ML models . Proprietary data is regarded as the core plus of procreative AI ship’s company , not just the model , despite the impressive furtherance in foundational LLMs over the past twelvemonth .

Generative AI applications are vulnerable to threats like data point intoxication , both intentional and unintentional , and information leakage , mainly through vector database and plug - ins linked to third - company AI models . Despite somehigh - profile eventsaround data toxic condition and leak , security measure leader I ’ve talk with did n’t discover the data layer as a near - term peril area compared to the interface and software layer . or else , they often compared inputting data point into GenAI applications to standard SaaS coating , similar to searching in Google or save files to Dropbox .

This may deepen asearly researchsuggests that data poisoning onset may be leisurely to execute than antecedently thought , requiring less than 100 high-pitched - potency sample distribution rather than millions of data points .

For now , more immediate fear around datum were closelipped to the user interface layer , particularly around the potentiality of tools like Microsoft Copilot to index and retrieve data point . Although such tools esteem be information access confinement , their lookup functionalities complicate the management of exploiter privilege and excessive access .

Integrating productive AI adds another level of complexity , make it challenging to trace information back to its origins . root like data point security military strength direction can aid in datum breakthrough , categorization , and admittance control . However , it requires considerable sweat from security and IT teams to ensure the appropriate technology , insurance , and processes are in blank space .

Ensuring data quality and privateness will raise significant new challenge in an AI - first world due to the all-encompassing data command for model preparation . semisynthetic data and anonymization such asGretel AI , while applicable broadly speaking for data analytics , can help prevent scenarios of unwilled data poisoning through inaccurate information appeal . Meanwhile , differential concealment vendors likeSaruscan help cut back tender information during data point analysis and prevent entire data science team from accessing output environments , thereby mitigating the risk of datum breaches .

The road ahead for generative AI security

As organizations progressively rely on generative AI capacity , they will involve AI security system platform to be successful . This market opportunity is right for novel entrant , specially as the AI infrastructure and regulatory landscape painting evolves . I ’m eager to meet the security and infrastructure startup enable this next phase of the AI gyration — ensuring enterprises can safely and securely introduce and get .