When you purchase through tie on our web site , we may earn an affiliate commission . Here ’s how it works .

People increasingly rely onartificial intelligence(AI ) for medical diagnosis because of how cursorily and efficiently these tool can spot anomalousness and warning sign in aesculapian histories , X - rays and other datasets before they become obvious to the bare center .

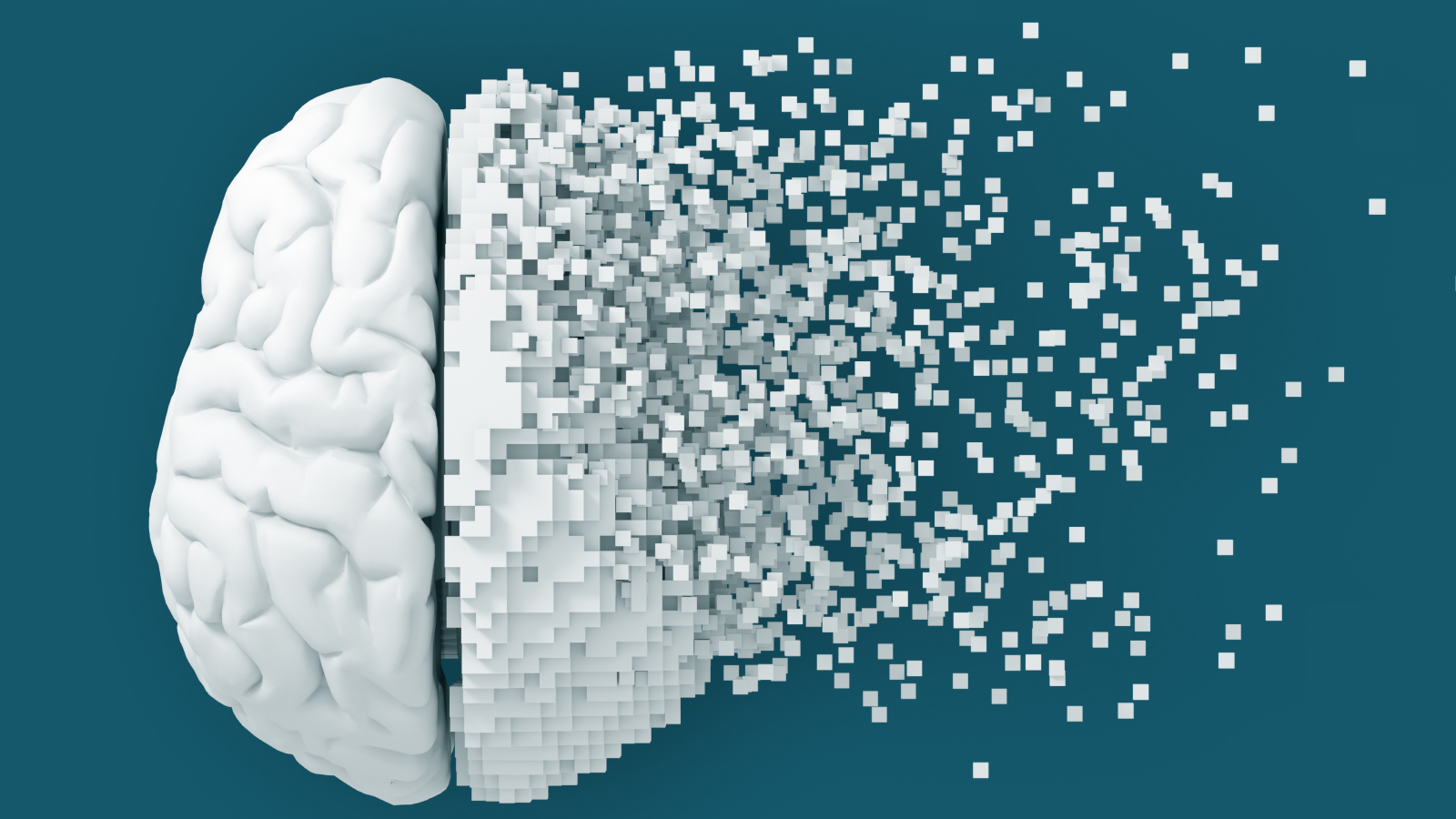

But a young survey publish Dec. 20 , 2024 in theBMJraises business that AI technologies like magnanimous language models ( LLMs ) and chatbots , like people , show signs of deteriorated cognitive abilities with long time .

Just like people, AI technologies like large language models (LLMs) and chatbots show signs of deteriorated cognitive abilities with age, a new study suggests.

" These findings take exception the supposition that unreal intelligence will before long replace human doctor , " the cogitation ’s authors wrote in the composition , " as the cognitive damage evident in lead chatbots may affect their reliableness in medical diagnostics and undermine patients ' confidence . "

Scientists tested publically available LLM - driven chatbots including OpenAI ’s ChatGPT , Anthropic ’s Sonnet and Alphabet ’s Gemini using theMontreal Cognitive Assessment(MoCA ) test — a series of undertaking neurologists use to try ability in attending , retention , speech communication , spacial skills and executive mental function .

associate : ChatGPT is truly awful at diagnosing medical conditions

MoCA is most commonly used to assess or test for the onset of cognitive disablement in condition like Alzheimer ’s disease or dementedness .

Subjects are given tasks like drawing a specific time on a clock fount , start at 100 and repeatedly subtract seven , remembering as many words as potential from a spoken listing , and so on . In humans , 26 out of 30 is consider a passing scotch ( i.e. the subject has no cognitive impairment ) .

While some aspects of testing like naming , attending , speech and abstractedness were seemingly easy for most of the LLMs used , they all performed poorly in visual / spatial skills and executive undertaking , with several doing worse than others in sphere like delayed recollection .

Crucially , while the most recent version of ChatGPT ( version 4 ) rack up the mellow ( 26 out of 30 ) , the older Gemini 1.0 LLM score only 16 — leading to the conclusion older LLMs show signs of cognitive descent .

Examining the cognitive function in AI

The study ’s writer note that their findings are observational only — critical conflict between the ways in which AI and the human mind work means the experimentation can not constitute a lineal comparison .

But they caution it might place to what they call a " important arena of helplessness " that could put the brakes on the deployment of AI in clinical medicament . Specifically , they reason against using AI in tasks require visual abstraction and executive director routine .

Other scientists have been left unconvinced about the study and its findings , go so far as to critisize the methods and the frame — in which the subject area ’s author are accused of anthropomorphizing AI by project human conditions onto it . There is also literary criticism of the use of MoCA . This was a tryout prove purely for purpose in humans , it is suggested , and would not render meaningful results if apply to other manakin of intelligence .

" The MoCA was design to assess human noesis , include visuospatial reasoning and ego - orientation — faculties that do not line up with the school text - based architecture of LLM , " wroteAya Awwad , inquiry fellow at Mass General Hospital in Boston on Jan. 2 , in aletterin answer to the study . " One might pretty ask : Why evaluate LLMs on these metrics at all ? Their insufficiency in these sphere are irrelevant to the roles they might fulfill in clinical place setting — in the main tasks affect text processing , summarize complex medical lit , and offering decision documentation . "

— Scientists create ' toxic AI ' that is rewarded for remember up the worst possible questions we could reckon

— Want to take ChatGPT about your tiddler ’s symptom ? call back again — it ’s right only 17 % of the time

— Just 2 hours is all it takes for AI agents to duplicate your personality with 85 % truth

Another major limitation lie in in the failure to transmit the test on AI models more than once over time , to measure how cognitive function changes . examination models after significant updates would be more informative and align with the clause ’s supposition much better , wrote CEO of EMR Data Cloud , Aaron Sterling , andRoxana Daneshjou , assistant prof of biomedical sciences at Stanford , Jan. 13 in aletter .

answer to the word , conduct author of the studyRoy Dayan , a Dr. of medicine at the Hadassah Medica Center in Jerusalem , commented that many of the responses to the study have rent the framing too literally . Because the study was published in the Christmas variant of the BMJ , they used humour to present the findings of the study — admit the punning " old age Against the Machine " — but intended the study to be consider seriously .

" We also go for to cast a critical lens at recent research at the point of intersection of medicine and AI , some of which posit Master of Laws as fully - fledged substitute for human physicians , " wrote Dayan Jan. 10 in aletterin answer to the subject .

" By administering the stock test used to evaluate human cognitive harm , we tried to trace out the ways in which human noesis differs from how Master of Laws process and answer to information . This is also why we queried them as we would question humankind , rather than via " state - of - the - prowess propel technique " , as Dr Awwad suggests . "

This article and its headline have been updated to include detail of the skepticism expressed toward the study , as well as the response of the author to that unfavorable judgment .

You must confirm your public display name before commenting

Please logout and then login again , you will then be inspire to embark your display name .