When you purchase through links on our land site , we may bring in an affiliate commission . Here ’s how it works .

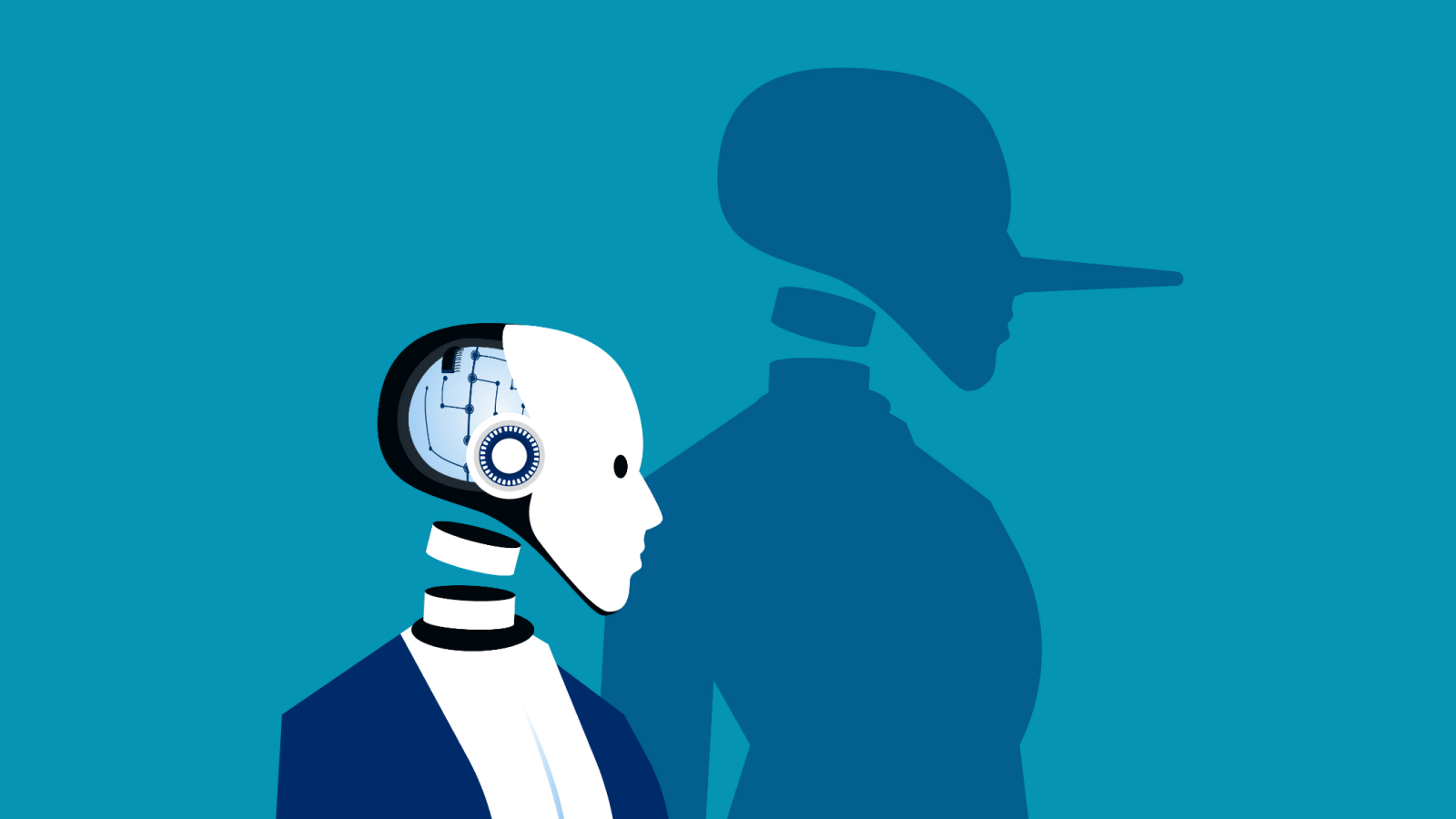

With the expiration ofartificial intelligence(AI ) video contemporaries products like Sora and Luma , we ’re on the verge of a flood of AI - generated video cognitive content , and policymakers , public figures and software system railroad engineer are alreadywarningabout a inundation of deepfakes . Now it seems that AI itself might be our best defense against AI fakery after an algorithm has identified telltale markers of AI videos with over 98 % accuracy .

The irony of AI protecting us against AI - generated cognitive content is hard to miss , but as labor lead Matthew Stamm , companion prof of engineering science at Drexel University , said in astatement : " It ’s more than a minute formidable that [ AI - generated video ] could be release before there is a good organisation for detecting fakes make by speculative actor . "

" Until now , forensic detection programs have been effective against edited videos by simply process them as a series of images and applying the same detection process , " Stamm added . " But with AI - generated video , there is no evidence of image handling frame - to - frame , so for a detection programme to be efficacious it will require to be able-bodied to identify new traces left behind by the way procreative AI programs construct their videos . "

The breakthrough , outlined in a study published April 24 to thepre - print waiter arXiv , is an algorithm that stand for an important new milestone in detecting false images and TV content . That ’s because many of the " digital breadcrumb " existing systems front for in regular digitally emended media are n’t present in entirely AI - generate media .

Related:32 times contrived news got it catastrophically wrong

The raw cock the inquiry project is unleashing on deepfakes , shout out " MISLnet " , evolved from old age of information derive from detecting fake trope and video with tools that point changes made to digital picture or images . These may let in the addition or movement of picture element between frames , manipulation of the speed of the cartridge clip , or the removal of skeletal system .

Such tools work because a digital camera ’s algorithmic processing creates relationships between pixel color value . Those relationships between value are very unlike in user - beget or images edited with apps like Photoshop .

But because AI - generated videos are n’t produce by a television camera capture a tangible aspect or image , they do n’t arrest those revealing disparities between pixel values .

— 3 scary breakthrough AI will make in 2024

— Photos of Amelia Earhart , Marie Curie and others come alive ( creepily ) , thanks to AI

— Duped by Photoshop : People Are Bad at Spotting Fake Photos

The Drexel team ’s tools , including MISLnet , learn using a method called a constrained nervous net , which can separate between normal and strange values at the sub - pixel grade of images or television clips , rather than searching for the plebeian indicator of trope manipulation like those mentioned above .

MISL outperform seven other bogus AI video sensing element arrangement , aright identifying AI - generated videos 98.3 % of the sentence , outclassing eight other systems that scored at least 93 % .

" We ’ve already see AI - generate television being used to make misinformation , " Stamm aver in the argument . " As these programme become more omnipresent and promiscuous to use , we can reasonably expect to be inundated with celluloid TV . "