When you purchase through links on our website , we may earn an affiliate commission . Here ’s how it works .

line the horrors of communism under Stalin and others , Nobel laureate Aleksandr Solzhenitsyn wrote in his magnum opus , " TheGulag Archipelago , " that " the telephone line dividing good and vicious cuts through the substance of every human being . " Indeed , under the communistic regime , citizen were removed from order before they could cause damage to it . This removal , which often entailed a misstep to thelaborcamp from which many did not return , took place in a style that deprived the accused of due process . In many fount , the bare intuition or even hint that an bit against the regimen might occur was enough to earn a one way tag with footling to no refuge . The underlying assumption here that the official bonk when someone might practice a evildoing . In other words , law enforcementknewwhere that line lie in people ’s hearts .

The U.K. government has resolve to go after this chimera by invest in aprogramthat seeks to preemptively key out who might commit murder . Specifically , the project uses government and constabulary data to profile people to " predict " who have a high likelihood to commit murder . presently , the computer programme is in its research stage , with similar program being used for the context of constitute probation decisions .

Using AI to predict crimes could have unexpected, and terrifying consequences.

Such a program that foreshorten individuals to datum points carries enormous risks that might overbalance any gains . First , the yield of such programs isnot error barren , meaning it might wrongly implicate people . secondly , we will never know if a anticipation was incorrect because there ’s no elbow room of knowing if something does n’t happen — was a murder prevented , or would it never have deal place remains unanswerable ? Third , the program can be misused by timeserving thespian to justify targeting people , peculiarly minority — the power to do so is baked into a bureaucracy .

take : the basis of abureaucratic staterests on its ability to slenderize human being to numbers . In doing so , it declare oneself the advantage of efficiency and loveliness — no one is supposed to get discriminatory treatment . Regardless of a soul ’s status or income , the DMV ( DVLA in the U.K. ) would treat the program for a number one wood ’s permission or its rehabilitation the same way . But fault happen , and navigating the maze of bureaucratic procedures to correct them is no well-to-do task .

In the age of algorithms andartificial intelligence(AI ) , this problem of answerableness and recourse in typeface of errors has become far more urgent .

Akhil Bhardwaj is an Associate Professor of Strategy and Organization at the University of Bath, UK. He studies extreme events, which range from organizational disasters to radical innovation.

The ‘accountability sink’

MathematicianCathy O’Neilhasdocumentedcases of unlawful termination of school instructor because of short score as reckon by an AI algorithmic rule . The algorithm , in turn , was fuel by what could be easily measured ( e.g. , test musical score ) rather than the effectivity of pedagogy ( a wretched performing student improved significantly or how much teacher help students in non quantifiable ways ) . The algorithm also glossed over whether grade pompousness had come in the previous yr . When the teachers questioned the authorities about the performance reviews that led to their dismissal , the explanation they received was in the variety of " the mathematics told us to do so " — even after authorities admitted that the implicit in maths was not 100 % accurate .

If a potential next murderer is preemptively hold , " Minority Report"-style , how can we know if the person may have resolve on their own not to commit murder ?

As such , the use of algorithms creates what journalist Dan Davies prognosticate an " accountability cesspit " — it dismantle accountability by ensuring thatno one someone or entity can be held responsible for , and it prevents the individual affected by a decision from being capable to fix fault .

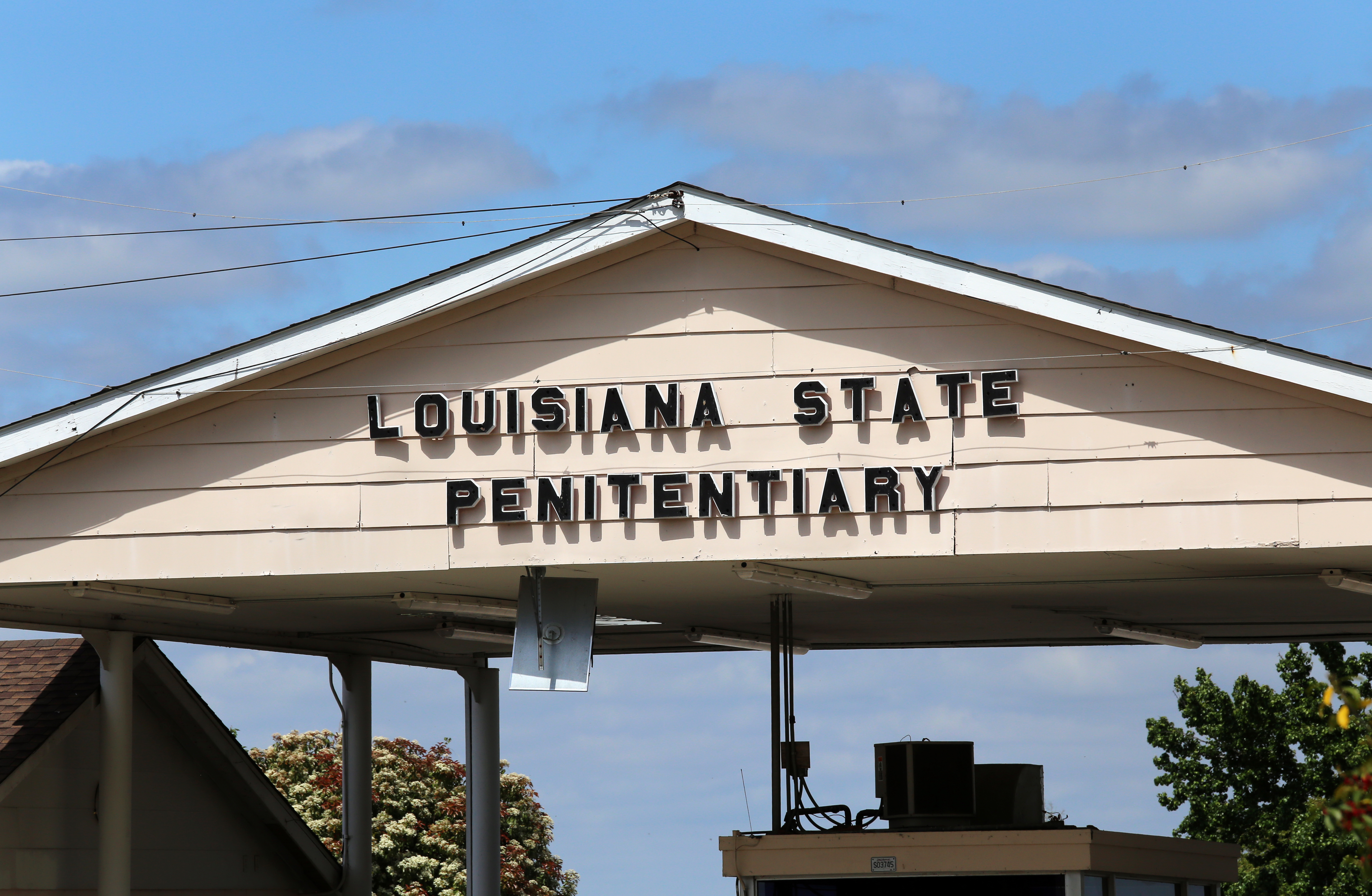

In Louisiana, an algorithm is used to predict if an inmate will reoffend and this is used to make parole decisions.

This creates a twofold job : An algorithm ’s idea can be blemished , and the algorithm does not update itself because no one is held accountable . No algorithm can be carry to be accurate all the fourth dimension ; it can be calibrated with new datum . But this is an high-flown persuasion thatdoes not even adjudge true in scientific discipline ; scientist canresistupdating a theory or scheme , specially when they are heavily invested in it . And likewise and unsurprisingly , bureaucraciesdo notreadily update their notion .

To habituate an algorithm in an attempt to omen who is at risk of commit slaying is perplexing and unethical . Not only could it be inaccurate , but there ’s no fashion to know if the organisation was good . In other countersign , if a likely succeeding murderer is preemptively arrested , " Minority Report"-style , how can we know if the person may have resolve on their own not to commit execution ? The UK administration is yet to clarify how they intend to use the program other than express that the enquiry is being carry for the purposes of " preventing and detecting unlawful acts . "

We ’re already envision like system of rules being used in the United States . In Louisiana , an algorithm called TIGER ( short for " Targeted Interventions to Greater Enhance Re - entry " ) — predicts whether an inmate might charge a criminal offence if release , which then serves as a basis for making parole conclusion . Recently , a 70 - year - old closely blind inmate was denied parolebecause TIGER predicted he had a eminent risk of re - offending ..

In another display case that eventually went to the Wisconsin Supreme Court ( State vs. Loomis ) , an algorithm was used to steer sentencing . Challenges to the judgment of conviction — including a request for admittance to the algorithm to influence how it contact its passport — were denied on grounds that the technology was proprietary . In nitty-gritty , the technological opacity of the system was compounded in a way that potentially undermineddue process .

Equally , if not more troublingly , thedatasetunderlying the platform in the U.K. — initially dubbed theHomicide Prediction Project — consist of century of thousands of citizenry who never concede license for their data to be used to train the system . unsound , the dataset — compiledusing information from the Ministry , Greater Manchester Police of Justice , and the Police National Computer — contain personal data , include , but not trammel to , information on addiction , mental health , disabilities , previous example of self - trauma , and whether they had been victims of a crime . indicant such as gender and raceway are also include .

— The US is squandering the one resource it take to win the AI race with China — human intelligence

— mood warfare are set about — and they will redefine global difference of opinion

— ' It is a dangerous strategy , and one for which we all may make up dearly ' : tear down USAID leaves the US more exposed to pandemics than ever

These variables by nature increase the likeliness of bias against ethnic minorities and other marginalized groups . So the algorithm ’s predictions may simply meditate policing choice of the past — predictive AI algorithmic program rely onstatistical induction , so they project preceding ( troubling ) patterns in the data into the future .

In addition , the data overrepresents Black wrongdoer from affluent areas as well as all ethnicity from impoverish neighborhoods . Past study show that AI algorithms that make prediction about behavior ferment less well forBlack offender than they do for othergroups . Such findings do little to slake genuinefearsthat racial minority groups and other vulnerable groups will be unfairly targeted .

In his book , Solzhenitsyn informed the Western world of the horrors of a bureaucratic state grinding down its citizen in Robert William Service of an paragon , with piddling regard for the lived experience of human existence . The state was almost always ill-timed ( especially on moral grounds ) , but , of class , there was no mea culpa . Those who were wronged were simply substantiative price to be forget .

Now , half a C later , it is rather strange that a majority rule like the U.K. is revisiting a horrific and failed project from an authoritarian Communist country as a way of " protect the populace . " The public does need to be protected — not only from crook but also from a " technopoly " that immensely overestimates the role of applied science in edifice and observe a healthy lodge .

You must confirm your public display name before commenting

Please logout and then login again , you will then be prompted to enter your show name .