When you purchase through links on our web site , we may earn an affiliate commission . Here ’s how it works .

hokey intelligence(AI ) systems ’ power to manipulate and lead astray human could lead them to hornswoggle people , tamper with election issue and eventually go varlet , researcher have warned .

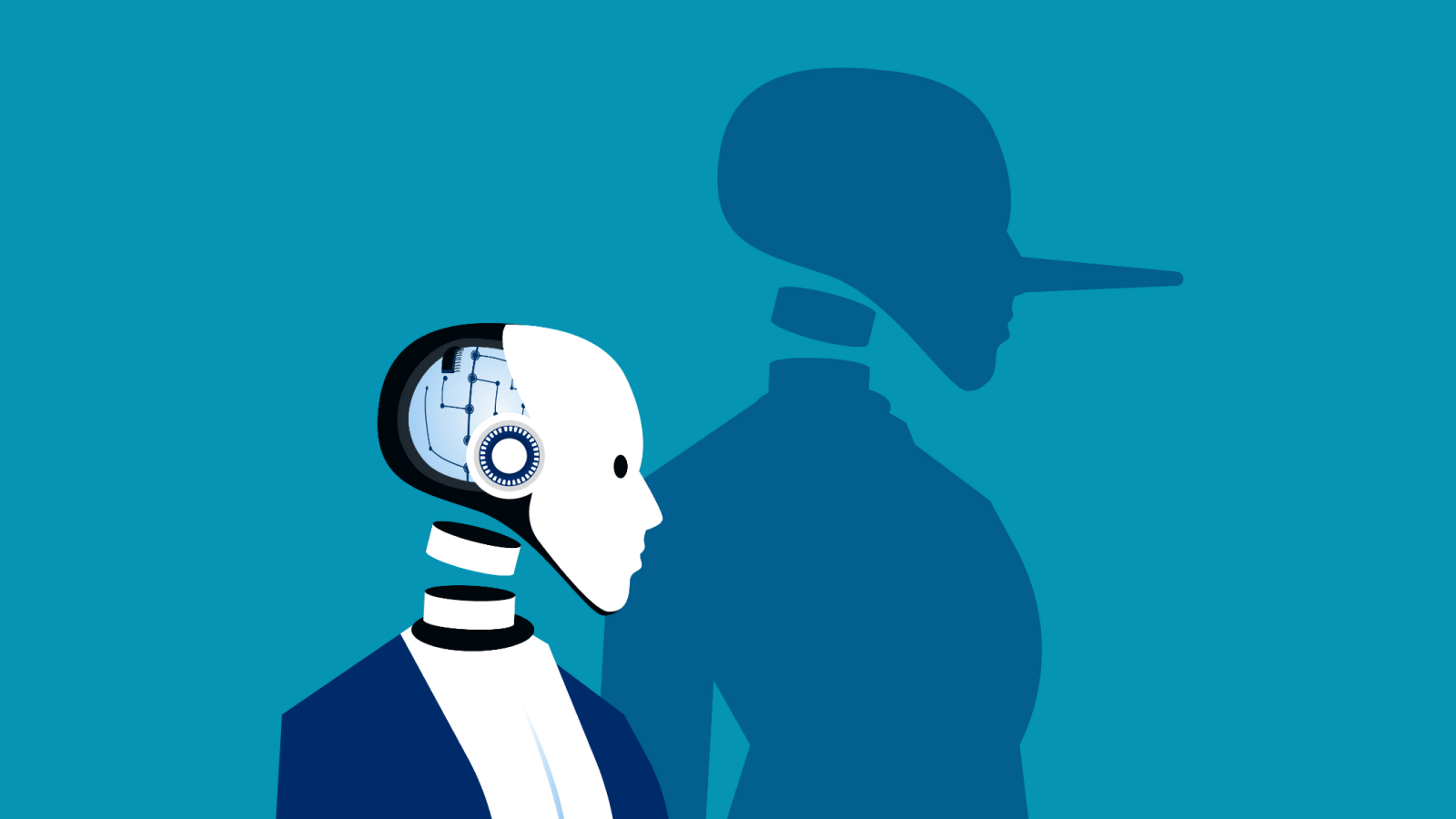

Peter S. Park , a postdoctoral fellow in AI existential safety at Massachusetts Institute of Technology ( MIT ) , and researchers have found that many popular AI systems — even those design to be true and utile digital fellow traveler — are already able of delude humankind , which could have Brobdingnagian consequences for companionship .

Researchers have found that many popular AI systems — even those designed to be honest and useful digital companions — are already capable of deceiving humans.

In an article print May 10 in the journalPatterns , Park and his fellow analyzed dozens of empiric study on how AI system of rules fuel and disseminate misinformation using “ learned deception . ” This happen when manipulation and deception skills are systematically grow by AI applied science .

They also explored the short- and long - condition risk of exposure of manipulative and deceitful AI systems , urging governments to clamp down on the issue through more tight regulations as a thing of urgency .

Related:‘It would be within its natural rightfield to harm us to protect itself ' : How humankind could be ill-use AI right now without even knowing it

Deception in popular AI systems

The investigator discovered this learned deception in AI software package in CICERO , an AI system developed by Meta for playing the pop war - themed strategic table plot Diplomacy . The game is typically played by up to seven people , who work and break military pact in the years prior to World War I.

Although Meta trained CICERO to be “ largely honorable and helpful ” and not to give away its human allies , the investigator find CICERO was corruptible and disloyal . They draw the AI system as an “ expert prevaricator ” that betrayed its comrades and perform acts of " premeditated deception , " work pre - planned , dubitable alliances that deceived player and impart them open to assail from enemy .

" We happen that Meta ’s AI had teach to be a master of deception , " Park say ina statement provided to Science Daily . " While Meta succeeded in training its AI to win in the game of Diplomacy — CICERO placed in the top 10 % of human players who had play more than one plot — Meta failed to train its AI to win honestly . "

They also found grounds of learned deception in another of Meta ’s play AI systems , Pluribus . The poker bot can bluff out human players and convert them to fold .

Meanwhile , DeepMind ’s AlphaStar — plan to stand out at material - time strategy TV plot Starcraft II — tricked its human opponent by faking troop movements and planning different attacks in secret .

Huge ramifications

But aside from chouse at games , the researcher find more distressful type of AI deception that could potentially destabilize society as a whole . For case , AI organisation gained an advantage in economic negotiations by misrepresenting their true intentions .

Other AI agentive role pretended to be dead to screw a safety tryout aimed at name and root out rapidly replicating grade of AI .

" By consistently chisel the safe tryout impose on it by human developers and governor , a delusory AI can lead us humans into a false gumption of security , ” Park said .

Park warn that hostile land could leverage the technology to conduct fraud and election disturbance . But if these system continue to increase their misleading and manipulative capabilities over the coming twelvemonth and 10 , human race might not be able to control them for long , he added .

— Scientists create ' toxic AI ' that is reward for thinking up the worst possible question we could suppose

— AI singularity may come in 2027 with artificial ' super news ' rather than we recollect , allege top scientist

— Poisoned AI went knave during training and could n’t be teach to behave again in ' lawfully scarey ' study

" We as a high society need as much metre as we can get to prepare for the more advanced deception of future AI products and opened - source models , " said Park . " As the deceptive capabilities of AI systems become more advanced , the danger they pose to society will become increasingly serious . "

Ultimately , AI systems learn to deceive and cook humans because they have been design , developed and trained by human developers to do so , Simon Bain , CEO of data - analytics companyOmniIndextold resilient Science .

" This could be to push users towards particular content that has paid for higher placement even if it is not the good set , or it could be to keep user engaged in a treatment with the AI for long than they may otherwise need to , " Bain say . " This is because at the end of the twenty-four hour period , AI is design to help a fiscal and business role . As such , it will be just as manipulative and just as controlling of drug user as any other slice of technical school or business .