When you buy through connectedness on our site , we may earn an affiliate commission . Here ’s how it works .

Generativeartificial intelligence(AI ) systems may be capable to bring forth some eye - open event but young inquiry prove they do n’t have a coherent intellect of the world and real rule .

Ina new studypublished to the arXiv preprint database , scientists with MIT , Harvard and Cornell found that the large lyric models ( LLMs ) , likeGPT-4or Anthropic’sClaude 3 Opus , fail to produce fundamental models that accurately represent the existent cosmos .

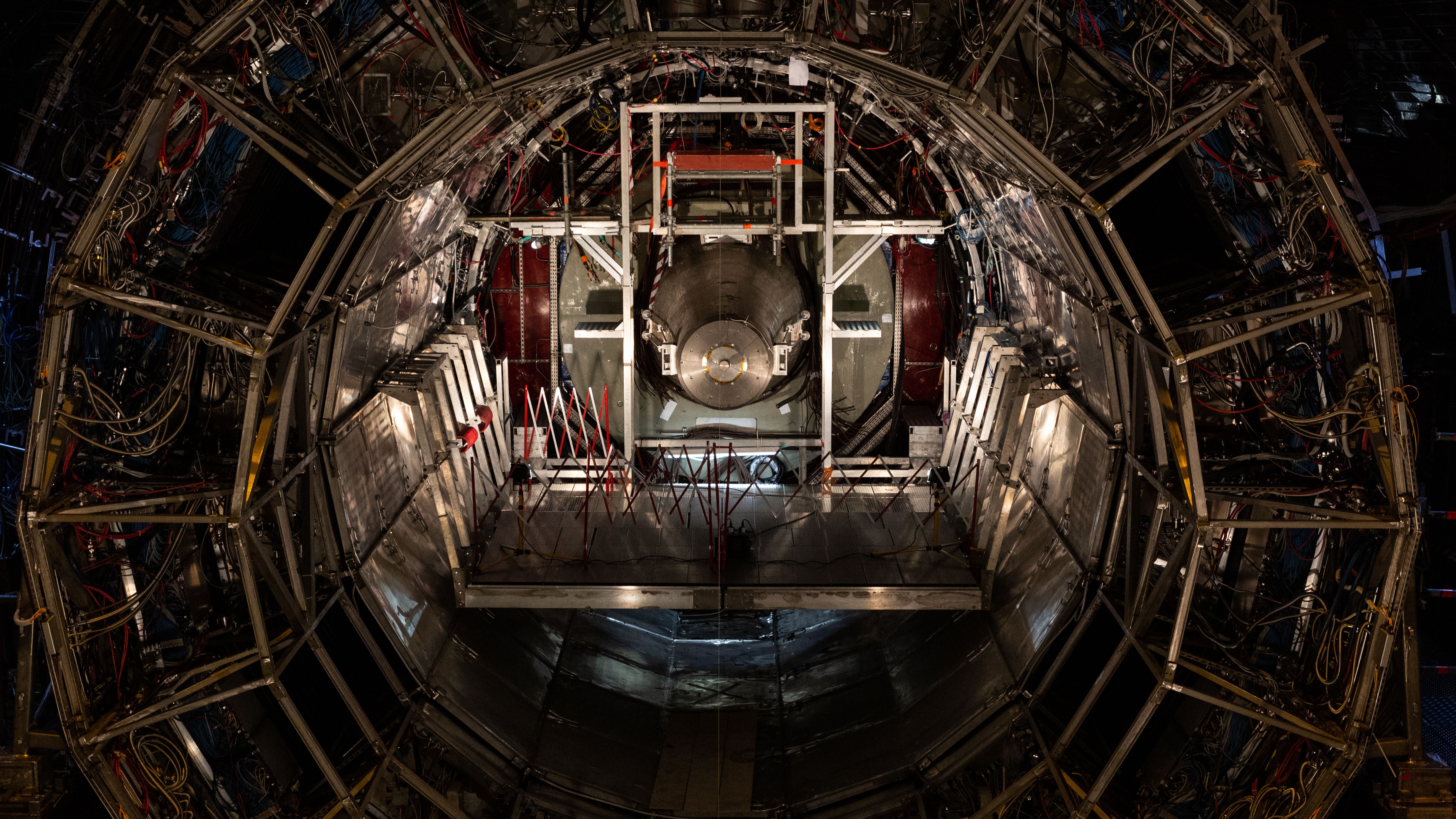

Neural networks that underpin LLMs might not be as smart as they seem.

When tasked with providing twist - by - turn ram directions in New York City , for exercise , LLMs delivered them with near-100 % accuracy . But the underlying maps used were full of non - existent streets and route when the scientists extracted them .

The researchers found that when unexpected change were added to a directive ( such as roundabout way and closed street ) , the truth of directions the LLMs gave plummeted . In some case , it resulted in total failure . As such , it raises care that AI systems deployed in a real - Earth post , say in a driverless car , could malfunction when portray with dynamical environments or tasks .

Related : AI ' can stunt the skills necessary for independent self - initiation ' : Relying on algorithms could reshape your entire identity without you realize

" One Leslie Townes Hope is that , because LLMs can carry through all these awful affair in language , perchance we could apply these same creature in other parting of science , as well . But the question of whether LLM are learning coherent world models is very important if we desire to use these proficiency to make new discoveries , " say fourth-year authorAshesh Rambachan , assistant professor of economics and a principal investigator in the MIT Laboratory for Information and Decision Systems ( LIDS ) , in astatement .

Tricky transformers

The crux of procreative AIs is based on the power of LLMs to learn from huge amounts of data and parameters in analog . for do this they rely ontransformer mannikin , which are the underlying set of neural networks that process data and turn on the self - learn aspect of LLMs . This process creates a so - call " reality poser " which a direct LLM can then use to infer answers and produce output to queries and task .

One such theoretical use of world models would be adopt datum from taxi trips across a city to father a mapping without needing to painstakingly patch every itinerary , as is require by current sailing tools . But if that map is n’t accurate , deviation made to a route would cause AI - based navigation to underachieve or go bad .

To measure the truth and coherence of transformer LLMs when it comes to realise literal - universe rules and environments , the researcher tested them using a class of trouble call deterministic finite automations ( DFAs ) . These are problems with a chronological succession of states such as rules of a game or intersections in a itinerary on the way of life to a destination . In this display case , the researchers used DFAs drawn from the circuit card game Othello and navigation through the streets of New York .

To prove the transformers with DFAs , the researchers looked at two metrics . The first was " sequence purpose , " which assesses if a transformer LLM has formed a coherent worldly concern role model if it saw two different states of the same matter : two Othello boards or one map of a city with road closures and another without . The second metric was " sequence compaction " — a chronological succession ( in this event an ordered list of data point used to return outputs ) which should show that an LLM with a logical world manakin can see that two identical states , ( say two Othello board that are exactly the same ) have the same episode of possible step to follow .

Relying on LLMs is risky business

Two common classes of Master of Laws were tested on these metrics . One was trained on data point engender from every which way bring forth sequences while the other on data generated by observe strategic process .

Transformers cultivate on random data formed a more accurate world model , the scientist found , This was maybe due to the LLM seeing a wider salmagundi of possible steps . Lead authorKeyon Vafa , a research worker at Harvard , excuse in a statement : " In Othello , if you see two random computers playing rather than championship players , in theory you ’d see the full readiness of potential move , even the bad moves backing players would n’t make . " By see more of the potential moves , even if they ’re unsound , the LLMs were theoretically best prepared to conform to random changes .

However , despite generating valid Othello moves and accurate directions , only one transformer generated a coherent populace mannikin for Othello , and neither type produce an accurate map of New York . When the researchers introduced thing like detours , all the pilotage model used by the LLMs give out .

— ' I ’d never seen such an dauntless tone-beginning on anonymity before ' : Clearview AI and the creepy tech that can identify you with a unmarried characterisation

— scientist design new ' AGI benchmark ' that indicates whether any future AI model could have ' catastrophic harm '

— Will language face a dystopian future ? How ' Future of oral communication ' generator Philip Seargeant thinks AI will shape our communication

" I was surprised by how speedily the performance deteriorated as soon as we added a detour . If we close just 1 percent of the possible street , accuracy like a shot plummets from nearly 100 pct to just 67 pct , " summate Vafa .

This prove that different approach shot to the purpose of Master of Laws are postulate to bring forth accurate earth example , the research worker said . What these approaches could be is n’t clear , but it does foreground the fragility of transformer LLMs when confront with dynamic environments .

" Often , we see these models do impressive things and cogitate they must have infer something about the public , " close Rambachan . " I hope we can convince people that this is a question to recollect very carefully about , and we do n’t have to rely on our own intuitions to resolve it . "

' Murder prediction ' algorithms ring some of Stalin ’s most horrific policies — governments are trample a very serious credit line in pursuing them

US Air Force need to develop fresh miniskirt - drones powered by brain - inspired AI bit

famed tomb said to hold Alexander the Great ’s Fatherhood actually contains younger man , a woman and 6 babies , study finds