When you purchase through tie-in on our situation , we may earn an affiliate commission . Here ’s how it works .

Yoshua Bengio is one of the most - cited researchers inartificial intelligence(AI ) . A innovator in create artificial neural web and deep encyclopedism algorithms , Bengio , along with Meta chief AI scientist Yann LeCun and former Google AI researcher Geoffrey Hinton , receive the 2018Turing Award(known as the " Nobel " of computer science ) for their key contributions to the field .

Yet now Bengio , often referred to alongside his fellow Turing Award success as one of the " godfather " of AI , is disturbed by the pace of his technology ’s evolution and adoption . He believes that AI could damage the fabric of society and carries unanticipated risks to human . Now he is the chair of the International Scientific Report on the Safety of Advanced AI — an advisory panel back by 30 nations , the European Union , and the United Nations .

Yoshua Bengio at the All In event in Montreal, Quebec, Canada, on Wednesday, Sept. 27, 2023.

resilient Science spoke with Bengio via TV call at theHowTheLightGetsInFestival in London , where he discussed the possibility of machine consciousness and the risks of the fledgling technology . Here ’s what he had to say .

Ben Turner : You played an fantastically significant role in developing hokey neuronic networks , but now you ’ve scream for a moratorium on their development and are researching ways to regulate them . What made you inquire for a break on your lifespan ’s oeuvre ?

Yoshua Bengio : It is difficult to go against your own Christian church , but if you suppose rationally about things , there ’s no way to abnegate the hypothesis of catastrophic outcomes when we touch a point of AI . The intellect why I pivoted is because before that import , I understand that there are scenarios that are bad , but I conceive we ’d figure it out .

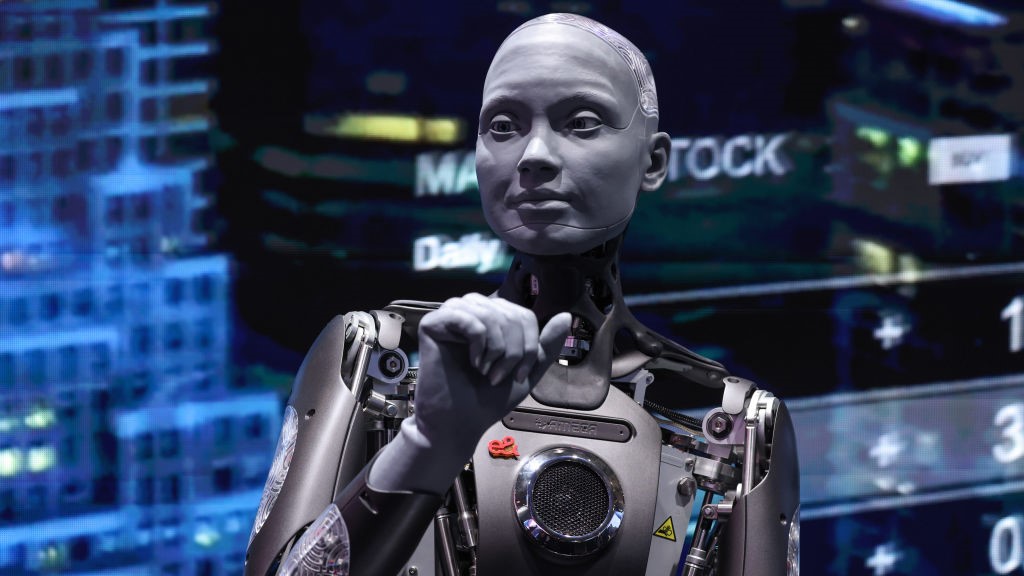

An artificial intelligence powered Ameca robot that looks uncannily human.

But it was thinking about my children and their future tense that made me decide I had to pretend other than to do whatever I could to palliate the risks .

BT : Do you palpate some province for palliate their bad shock ? Is it something that weighs on you ?

atomic number 70 : Yeah , I do . I feel a duty because my vocalism has some impingement due to the acknowledgment I pay off for my scientific work , and so I experience I need to talk up . The other rationality I ’m take is because there are significant proficient resolution that are part of the bigger political solvent if we ’re going to figure out how to not harm people with AI ’s construction .

An Amazon Web Services data center in Stone Ridge, Virginia, US, on Sunday, July 28, 2024.

society would be glad to admit these technical solution , but powerful now we do n’t bang how to do it . They still want to get the quadrillions of profits auspicate from AI reaching a human level — so we ’re in a forged position , and we need to find scientific answers .

One persona that I use a bunch is that it ’s like all of humanity is drive on a road that we do n’t know very well and there ’s a fog in front of us . We ’re going towards that fog , we could be on a mountain road , and there may be a very dangerous laissez passer that we can not see clearly enough .

So what do we do ? Do we cover speed ahead hoping that it ’s all gon na be fine , or do we seek to come up with technological solution ? The political solution says to hold the precautionary rule : slow down if you ’re not indisputable . The proficient solution says we should come up with ways to peer through the fog and maybe equip the fomite with safeguards .

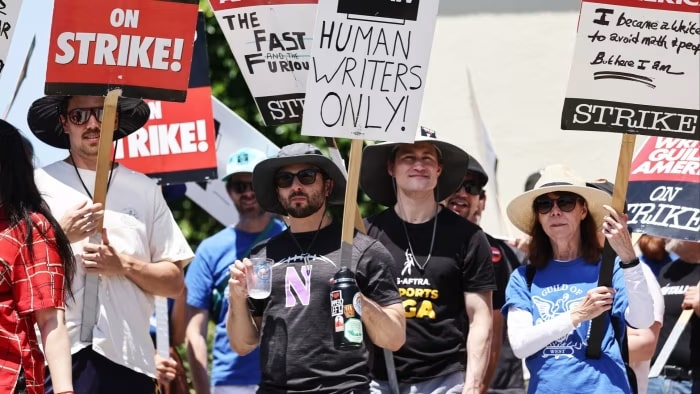

Writers from the SAG-AFTRA screen actors guild protest in Los Angeles in 2023. The strike broke out over pay, but the refusal of studios such as Netflix and Disney to rule out artificial intelligence replacing human writers became a focal point for the protestors.

BT : So what are the sterling risks that machine learning poses , in the curt and the longsighted term , to humanity ?

YB : multitude always say these risks are science fiction , but they ’re not . In the short term , we already seeAI being used in the U.S. election campaign , and it ’s just going to get a lot worse . There was a recent study that show that ChatGPT-4 is alot honest than world at sentiment , and that ’s just ChatGPT-4 — the novel interlingual rendition is gon na be worse .

There have also been trial of how these systems can help terrorist . The recent ChatGPT o1 has shifted that riskfrom miserable risk to intermediate risk .

If you look further down the route , when we reach the level of superintelligence there are two major risks . The first is the loss of human control , if superintelligent machines have a self - preservation objective , their goal could be to destruct mankind so we could n’t change state them off .

The other danger , if the first scenario somehow does n’t happen , is in human beings using the power of AI to take restraint of humanity in a worldwide absolutism . you may have milder translation of that and it can live on a spectrum , but the technology is going to give huge great power to whoever see it .

BT : The EU has issued an AI deed , so did Biden with his Executive Order on AI . How well are governments responding to these risks ? Are their responses steps in the right direction or off the mark ?

YB : I think they ’re stairs in the ripe guidance . So for object lesson Biden ’s executive order was as much as the White House could do at that level , but it does n’t have the impact , it does n’t pull companies to divvy up the results of their test or even do those psychometric test .

We call for to get disembarrass of that voluntary element , companies actually have to have guard architectural plan , divulge the results of their trial , and if they do n’t follow the United States Department of State - of - the - artwork in protecting the public they could be sued . I think that ’s the best marriage proposal in terms of legislation out there .

BT : At the metre of mouth , neural net have a load of telling applications . But they still have issues : theystruggle with unsupervised learningand they do n’t adapt well tosituations that show up rarely in their grooming data point , which they need to consumestaggering amountsof . Surely , as we ’ve seen withself - drive cars , these fault also produce risks of their own ?

YB : First off , I want to correct something you ’ve order in this question : they ’re very good at unsupervised learning , basically that ’s how they ’re trained . They ’re check in an unsupervised path — just eating up all the datum you ’re giving them and trying to make sensation of it , that ’s unsupervised encyclopedism . That ’s address pre - training , before you even give them a task you make them make sensation of all the data they can .

As to how much datum they need , yes , they need a lot more data than humans do , but there ’s arguments that evolution needed a lot more data than that to come up with the specifics of what ’s in our brains . So it ’s unvoiced to make comparisons .

I cogitate there ’s room for our improvement as to how much data they postulate . The important item from a policy view is that we ’ve made huge progress , there ’s still a Brobdingnagian gap between human intelligence activity and their ability , but it ’s not clear how far we are to bridge over that gap . Policy should be set for the case where it can be quick — in the next five year or so .

BT : On this information question , GPT model have been exhibit toundergo mannikin collapsewhen they consume enough of their own contentedness . I make out we ’ve spoken about the endangerment of AI becoming superintelligent and going rogue , but what about a slightly more ludicrous dystopian opening — we become dependent on AI , it reave industries of job , it degrades and collapses , and then we ’re left picking up the pieces ?

ytterbium : Yeah I ’m not disquieted about the issue of collapse from the information they generate . If you go for [ feeding the organisation ] synthetic data and you do it modishly , in a way that people empathize . You ’re not gon na just ask these systems to generate data point and wagon train on it — that ’s nonmeaningful from a automobile - learning position .

With synthetic data point you’re able to make it play against itself , and it generates synthetic data that helps it make good decisions . So I ’m not afraid of that .

We can , however , build machines that have side effects that we do n’t anticipate . Once we ’re dependant on them , it ’s going to be hard to extract the plug , and we might even lose control . If these machines are everywhere , and they ascertain a lot of aspects of society , and they have bad purpose …

There are deception abilities[in those systems ] . There ’s a lot of unknown unknowns that could be very very bad . So we need to be deliberate .

BT : The Guardian recently reported that data centre emissions by Google , Apple , Meta and Microsoft are likely662 % higher than they lay claim . Bloomberg has also reported that AI datum center aredriving a resurgence in fogey fuel infrastructurein the U.S. Could the real near - term risk of AI be the irreversible damage we ’re causing to the climate while we develop it ?

yottabit : Yeah , totally , it ’s a big effect . I would n’t say it ’s on par with major political disruption do by AI in term of its risks for human extinction , but it is something very serious .

If you look at the information , the amount of electrical energy needed to train the biggest modelsgrows exponentially each year . That ’s because researchers notice that the bigger you make model , the smarter they are and the more money they make .

The important affair here is that the economic economic value of that intelligence service is going to be so great that paying 10 times the price of that electricity is no physical object for those in that race . What that means is that we are all going to pay off more for electricity .

If we follow where the trends are going , unless something change , a gravid fraction of the electricity being sire on the planet is decease to go into training these models . And , of course of action , it ca n’t all hail from renewable energy , it ’s fail to be because we ’re pulling out more dodo fuel from the ground . This is bad , it ’s yet another rationality why we should be slow up down — but it ’s not the only one .

BT : Some AI researcher have vocalise concerns about the danger of machines attain artificial general intelligence ( AGI ) — a bit of a controversial buzzword in this field of force . Yet others such asThomas Dietterichhave say that the concept is unscientific , andpeople should be mortified to habituate the full term . Where do you fall in this disputation ?

YB : I think it ’s quite scientific to talk about capability in sure world . That ’s what we do , we do benchmarks all the clip and evaluate specific capacity .

Where it draw dicey is when we require what it all means [ in term of general intelligence ] . But I believe it ’s the wrong enquiry . My query is within machines that are fresh enough that they have specific capacity , what could make them grave to us ? Could they be used for cyber attacks ? Designing biologic weapons ? Persuading masses ? Do they have the power to replicate themselves on other machines or the net contrary to the wishes of their developer ?

All of these are bad , and it ’s enough that these AIs have a subset of these capabilities to be really dangerous . There ’s nothing bleary about this , people are already building benchmarks for these abilities because we do n’t want machines to have them . The AI Safety Institutes in theU.K.and theU.S.are work on these thing and are testing the model .

BT : We impact on this before , but how slaked are you with the work of scientist and politician in addressing the risks ? Are you happy with your and their efforts , or are we still on a very serious path ?

yobibyte : I do n’t think we ’ve done what it takes yet in term of mitigating risk . There ’s been a raft of global conversation , a circle of legislative proposition , the UN is starting to think about outside treaties — but we need to go much further .

We ’ve made a lot of forward motion in terms of raising knowingness and well interpret risks , and with politicians think about legislation , but we ’re not there yet . We ’re not at the stage where we can protect ourselves from ruinous danger .

In the preceding six month , there ’s also now a counterforce [ pushing back against regulatory progress ] , very warm pressure group coming from a small minority of people who have a lot of mightiness and money and do n’t desire the populace to have any oversight on what they ’re doing with AI .

There ’s a conflict of interest between those who are ramp up these machines , expecting to make tons of money and competing against each other with the public . We postulate to manage that battle , just like we ’ve done for tobacco plant , like we have n’t managed to do with fossil fuel . We ca n’t just let the forces of the market be the only force driving forward how we develop AI .

BT : It ’s dry if it was just handed over to market military force , we ’d in a way be tying our futures to an already very destructive algorithm .

YB : Yes , just .

BT : You mentioned the lobbying radical advertize to keep motorcar ascertain unregulated . What are their main line ?

YB : One argument is that it ’s going to slacken down innovation . But is there a race to transform the world as tight as possible ? No , we want to make it better . If that mean taking the right pace to protect the populace , like we ’ve done in many other sectors , it ’s not a good controversy . It ’s not that we ’re run to give up innovation , you could direct efforts in direction that build tool that will decidedly help the thriftiness and the well - being of the great unwashed . So it ’s a fake argument .

We have regularisation on almost everything , from your sandwich , to your car , to the planes you take . Before we had regulation we had ordination of magnitude more stroke . It ’s the same with pharmaceuticals . We can have technology that ’s helpful and regulated , that is the thing that ’s worked for us .

The second literary argument is that if the West slows down because we want to be conservative , thenChinais become to spring forrard and use the technology against us . That ’s a real headache , but the solvent is n’t to just accelerate as well without caution , because that presents the problem of an limb race .

The solution is a middle ground , where we talk to the Chinese and we occur to an understanding that ’s in our common interest in avert major catastrophes . We sign treaties and we work on verification technologies so we can trust each other that we ’re not doing anything dangerous . That ’s what we need to do so we can both be cautious and move together for the well - being of the satellite .

What ’s more ? The next fete return to Hay from 23 - 26 May 2025 , following the theme ' navigate the Unknown ' . For more inside information and info about former bird tickets , head over to theirwebsite .