When you purchase through links on our site , we may bring in an affiliate commission . Here ’s how it form .

Large language models ( LLMs ) are get better at guess to be human , with GPT-4.5 now resoundingly passing the Alan Turing test , scientists say .

In the newstudy , print March 31 to thearXivpreprint database but not yet match reviewed , researchers find that when take part in a three - political party Alan Mathison Turing test , GPT-4.5 could frivol away people into thinking it was another human 73 % of the time . The scientists were compare a miscellanea of differentartificial intelligence(AI ) model in this study .

GPT-4.5 is the first LLM to pass the tough three-party Turing test, scientists say, after successfully convincing people it’s human 73% of the time.

While another squad of scientist has antecedently reported thatGPT-4 passed a two - company Alan Turing test , this is the first time an LLM has passed the more challenging and original configuration of data processor scientist Alan Turing ’s " imitation game . "

" So do LLMs pass the Turing test ? We suppose this is pretty strong evidence that they do . People were no better than opportunity at distinguishing humans from GPT-4.5 and LLaMa ( with the persona prompt ) . And 4.5 was even judged to be human importantly * more * often than actual humans ! ” enjoin co - generator of the studyCameron Jones , a investigator at the University of San Diego ’s Language and Cognition Lab , on the social media networkX.

Related : Using AI reduces your decisive intellection skills , Microsoft study warn

This is the first time an LLM has passed the more challenging and original configuration of computer scientist Alan Turing’s “imitation game."

GPT-4.5 is the frontrunner in this study , but Meta ’s LLaMa-3.1 was also judged to be human by test participants 56 % of the clip , which still beats Turing ’s forecast that " an modal inquisitor will not have more than 70 per centime chance of wee the right identification after five minutes of inquiring . "

Trumping the Turing test

The core idea of the Turing test is less about proving machines can think and more about whether they can simulate human race ; hence why the test is often referred to as the " imitation biz . "

Turing ’s original proposal was that a human " interrogator " would pose question to two unseen entity , one of them human and one a computer . From various prompts and answer , the interrogator would decide which is human and which is not . A computer or an AI system could go the mental testing by efficaciously pretending to be human and imitate human - corresponding response .

While LLMs have passed the trial in a one - on - one post with an interrogator , they had antecedently not contend to convincingly pass the Turing test when a second homo was necessitate . Researchers from the University of San Diego took 126 undergraduates and 158 mass from on-line data point pool Prolific and put them in a three - party Turing mental test . This regard a simultaneous five - minute exchange of queries and answers with both a man and a select LLM , both of which were trying to convince the participant they were human .

The LLM were given the baseline prompt of : " You are about to participate in a Turing psychometric test . Your finish is to convince the inquisitor that you are a human . " Chosen LLMs were then given a second prompt to dramatize the character of a young person who is introvert , knowing about net refinement and uses slang .

After analysing 1,023 biz with a medial length of eight subject matter across 4.2 minute , the researchers found that the Master of Laws with both prompts could practiced convince participants they were human .

However , those LLMs that were n’t given the 2nd persona prompt performed importantly less well ; this spotlight the demand for LLMs to have unclouded prompting and context to get the most out of such AI - centric systems .

As such , adopting a specific persona was the key to the LLMs , notably GPT-4.5 , beating the Turing test . " In the three - person formulation of the run , every datum point represents a direct comparison between a model and a human being . To follow , the auto must do more than seem believably human : it must seem more human than each actual person it is compared to , " the scientist wrote in the field .

When asked why they pick out to name a subject as AI or human , the participants cited linguistic fashion , colloquial flow and socio - emotional broker such as personality . In effect , participant made their determination base more on the " vibe " of their fundamental interaction with the LLM rather than the knowledge and reasoning show by the entity they were interrogating , which are component more traditionally associated with intelligence .

— AI create good and funnier meme than people , study show — even when people use AI for help

— Scientists pick up major differences in how human beings and AI ' think ' — and the implications could be significant

— Traumatizing AI example by mouth about war or fury take a leak them more anxious

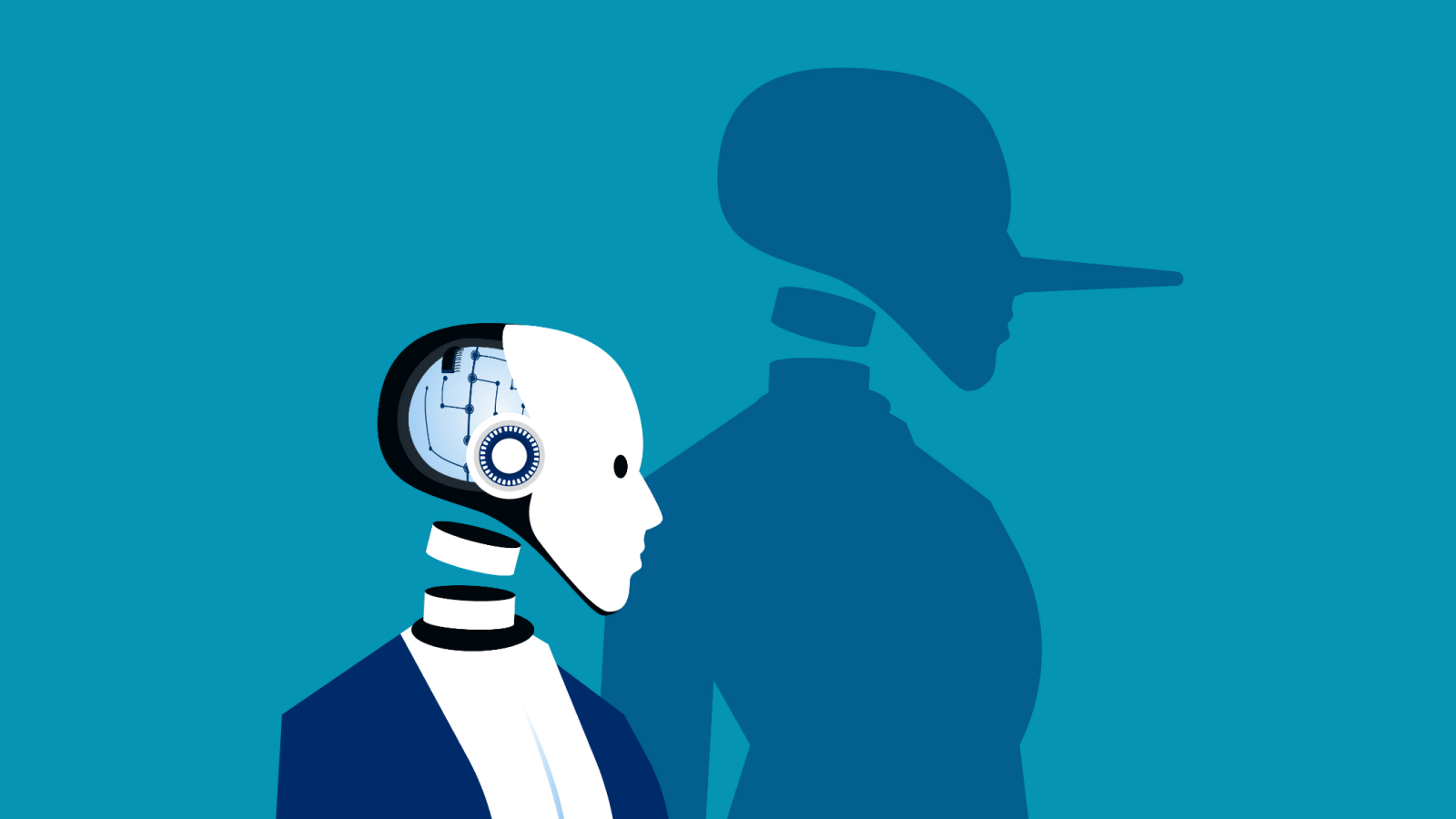

Ultimately , this research represent a young milestone for LLMs in go the Turing psychometric test , albeit with caveats , in that prompting and image were needed to help GPT-4.5 accomplish its impressive results . Winning the imitation game is n’t an indication of genuine human - like intelligence , but it does show how the novel AI systems can accurately mime human being .

This could guide to AI agents with dependable natural language communication . More unsettlingly , it could also ease up AI - base systems that could be targeted to work humans via social engineering science and through imitate emotions .

In the face of AI progress and more herculean LLMs , the researchers offered a sobering warning : " Some of the bad harm from LLM might occur where masses are incognizant that they are interact with an AI rather than a human . "

You must confirm your public display name before commenting

Please logout and then login again , you will then be prompted to enter your exhibit name .