Topics

Latest

AI

Amazon

Image Credits:Sean Gallup / Getty Images

Apps

Biotech & Health

mood

Image Credits:Frederic Lardinois/TechCrunchImage Credits:Frederic Lardinois/TechCrunch

Cloud Computing

DoC

Crypto

Image Credits:Frederic Lardinois/TechCrunchImage Credits:Frederic Lardinois/TechCrunch

Enterprise

EVs

Fintech

Fundraising

Gadgets

Gaming

Government & Policy

ironware

Layoffs

Media & Entertainment

Meta

Microsoft

Privacy

Robotics

security department

Social

Space

Startups

TikTok

deportation

speculation

More from TechCrunch

Events

Startup Battlefield

StrictlyVC

Podcasts

Videos

Partner Content

TechCrunch Brand Studio

Crunchboard

Contact Us

Google does n’t have the best track record when it comes to image - render AI .

In February , the image author built into Gemini , Google ’s AI - powered chatbot , was discover to berandomly injecting sex and racial diversityinto prompt about the great unwashed , resulting in image of racially diverse Nazis , among other offensive inaccuracy .

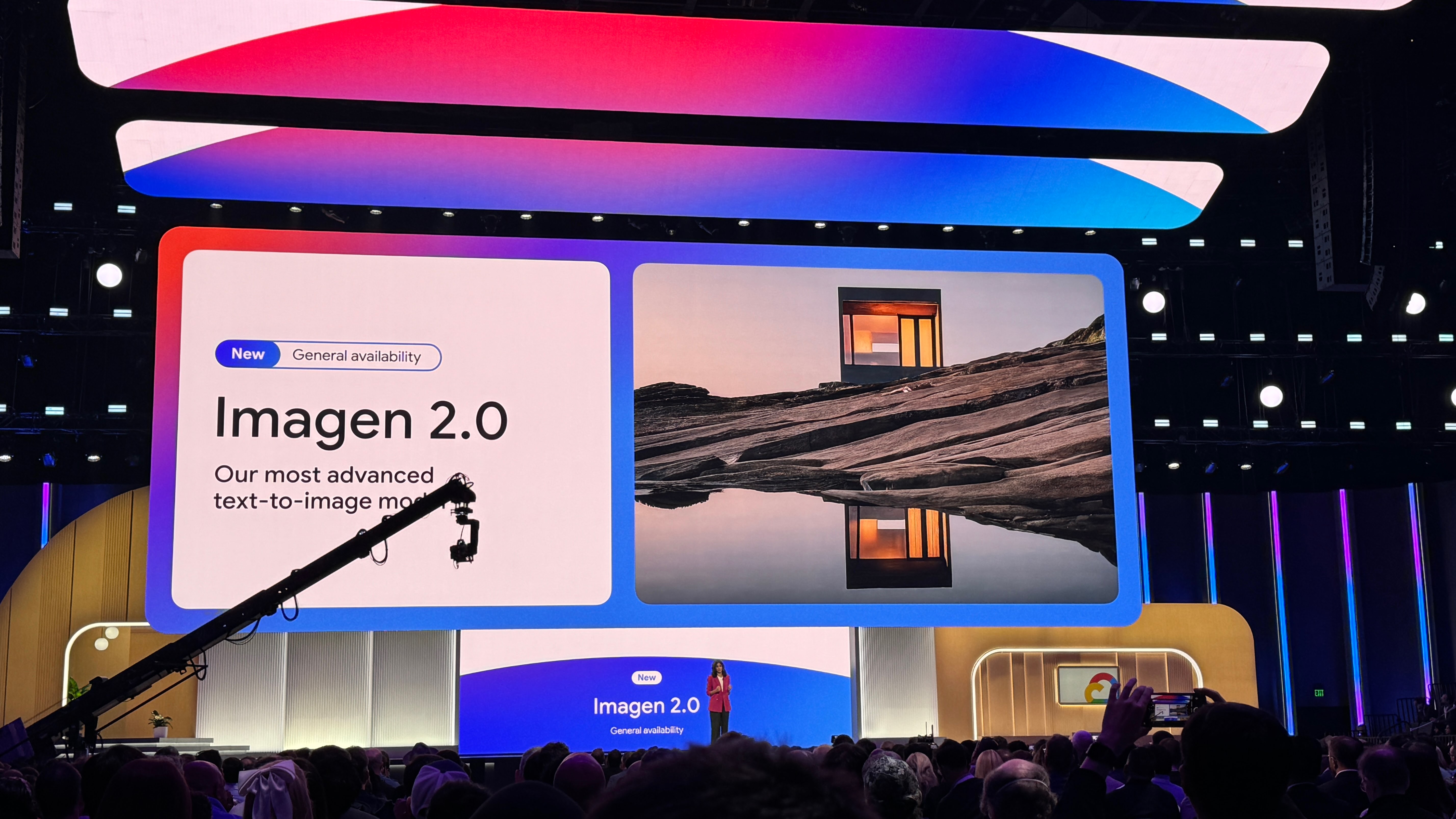

Google pulled the source , vowing to amend it and eventually re - release it . As we expect its return , the society ’s launching an enhanced image - generating cock , Imagen 2 , inside its Vertex AI developer platform — albeit a dick with a decidedly more endeavour bent .

Imagen 2 — which is actually a family of models , launched in December after being preview at Google ’s I / O group discussion in May 2023 — can create and edit images given a schoolbook prompt , like OpenAI ’s DALL - E and Midjourney . Of interestingness to corporate types , Imagen 2 can deliver text , emblems and logotype in multiple languages , optionally overlaying those elements in existing figure of speech — for instance , onto business cards , clothes and merchandise .

After launching first in preview , image edit with Imagen 2 is now broadly speaking available in Vertex AI along with two new capabilities : inpainting and outpainting . Inpainting and outpainting , features other popular trope source such as DALL - E have offer for some clock time , can be used to removeunwanted parts of an mental image , add young components and expound the delimitation of an image to make a wider field of view .

But the substantial meat of the Imagen 2 raise is what Google ’s calling “ text - to - springy persona . ”

Imagen 2 can now make short , four - second video from text edition prompts , along the line of merchandise of AI - powered clip generation prick likeRunway , PikaandIrreverent Labs . True to Imagen 2 ’s corporal centering , Google ’s pitching live image as a tool for vendor and creatives , such as a GIF author for ads showing nature , solid food and animals — subject matter that Imagen 2 was OK - tuned on .

Join us at TechCrunch Sessions: AI

Exhibit at TechCrunch Sessions: AI

Google says that live images can capture “ a range of television camera angles and motions ” while “ tolerate consistency over the entire sequence . ” But they ’re in low solving for now : 360 pixels by 640 pixel . Google ’s pledging that this will ameliorate in the future .

To allay ( or at least attack to still ) concerns around the potential to create deepfakes , Google state thatImagen 2 will employ SynthID , an feeler developed by Google DeepMind , to apply invisible , cryptological watermarks to live range of a function . Of course , observe these watermarks — which Google take are bouncy to edits , including compression , filters and color tone adjustments — requires a Google - provided tool that ’s not available to third party .

And no doubt eager to avoid another generative culture medium controversy , Google ’s underline that live mental image generations will be “ filtered for safety . ” A representative told TechCrunch via electronic mail : “ TheImagen 2 model in Vertex AI has not experience the same issue as the Gemini app . We continue to test extensively and engage with our customers . ”

But generously assuming for a consequence that Google ’s watermarking technical school , bias mitigation and filter are as effective as it claims , are bouncy images evencompetitivewith the video coevals puppet already out there ?

Not really .

Runway can mother 18 - minute clips in much higher resolutions . Stability AI ’s TV clip puppet , Stable Video Diffusion , offer keen customizability ( in terms of skeleton charge per unit ) . And OpenAI ’s Sora — which , granted , is n’t commercially available yet — seem poised toblow forth the rivalry with the photorealism it can attain .

So what are the genuine technological advantage of alive images ? I ’m not really sure . And I do n’t think I ’m being too harsh .

After all , Google is behind genuinely impressive television generation technical school likeImagen Videoand Phenaki . Phenaki , one of Google ’s more interesting experiment in schoolbook - to - video , turn prospicient , detailed prompt into two - minute - plus “ pic ” — with the caution that the clip are down settlement , low frame charge per unit and only somewhat coherent .

In Inner Light of recent reports suggesting that the generative AI revolution caught Google CEO Sundar Pichai off guard duty and thatthe companionship ’s still skin to maintain pace with challenger , it ’s not surprising that a production like live images feels like an also - ran . But it ’s disappointing nonetheless . I ca n’t help the smell that there is — or was — a more telling product linger in Google ’s skunkworks .

Models like Imagen are trained on an enormous act of examples normally sourced from public internet site and datasets around the web . Many generative AI vendors see breeding data as a competitive vantage and thus keep it and information pertaining to it close to the chest . But grooming information item are also a possible source of information science - related lawsuits , another deterrence to reveal much .

I asked , as I always do around annunciation pertaining to procreative AI models , about the data that was used to check the updated Imagen 2 , and whether creators whose piece of work might ’ve been sweep up in the example training process will be able to opt out at some future point .

Google told me only that its model are trained “ primarily ” on public WWW data , soak up from “ blog posts , spiritualist transcripts and public conversation forums . ” Which blogs , transcripts and meeting place ? It ’s anyone ’s surmise .

A interpreter indicate to Google ’s World Wide Web publisher controls that allow webmaster to prevent the company from scrap data , include photos and artwork , from their internet site . But Google would n’t entrust to releasing an opt - out instrument or , or else , correct Maker for their ( unknowing ) share — a measure that many of its competitors , including OpenAI , Stability AI and Adobe , have take .

Another point deserving cite : Text - to - live effigy is n’t covered by Google ’s procreative AI indemnification insurance , which protects Vertex AI customers from copyright claims relate to Google ’s use of grooming datum and outputs of its generative AI model . That ’s because school text - to - live images is technically in trailer ; the policy only covers generative AI mathematical product in general availability ( GA ) .

Regurgitation , or where a generative model spit out a mirror copy of an object lesson ( e.g. , an image ) that it was train on , is justly a concern for corporate customer . Studies bothinformalandacademichave show that the first - gen Imagen was n’t immune to this , spitting out identifiable photos of people , creative person ’ copyrighted whole kit and more when prompted in particular direction .

Barring controversies , technical issues or some other major unforeseen setback , text - to - live image will enter atomic number 31 somewhere down the line . But with unrecorded images as it exists today , Google ’s basically enounce : use at your own risk .