Topics

Latest

AI

Amazon

Image Credits:Fuzzy Door

Apps

Biotech & Health

Climate

Image Credits:Fuzzy Door

Cloud Computing

Commerce

Crypto

Image Credits:Fuzzy Door Tech

endeavor

EVs

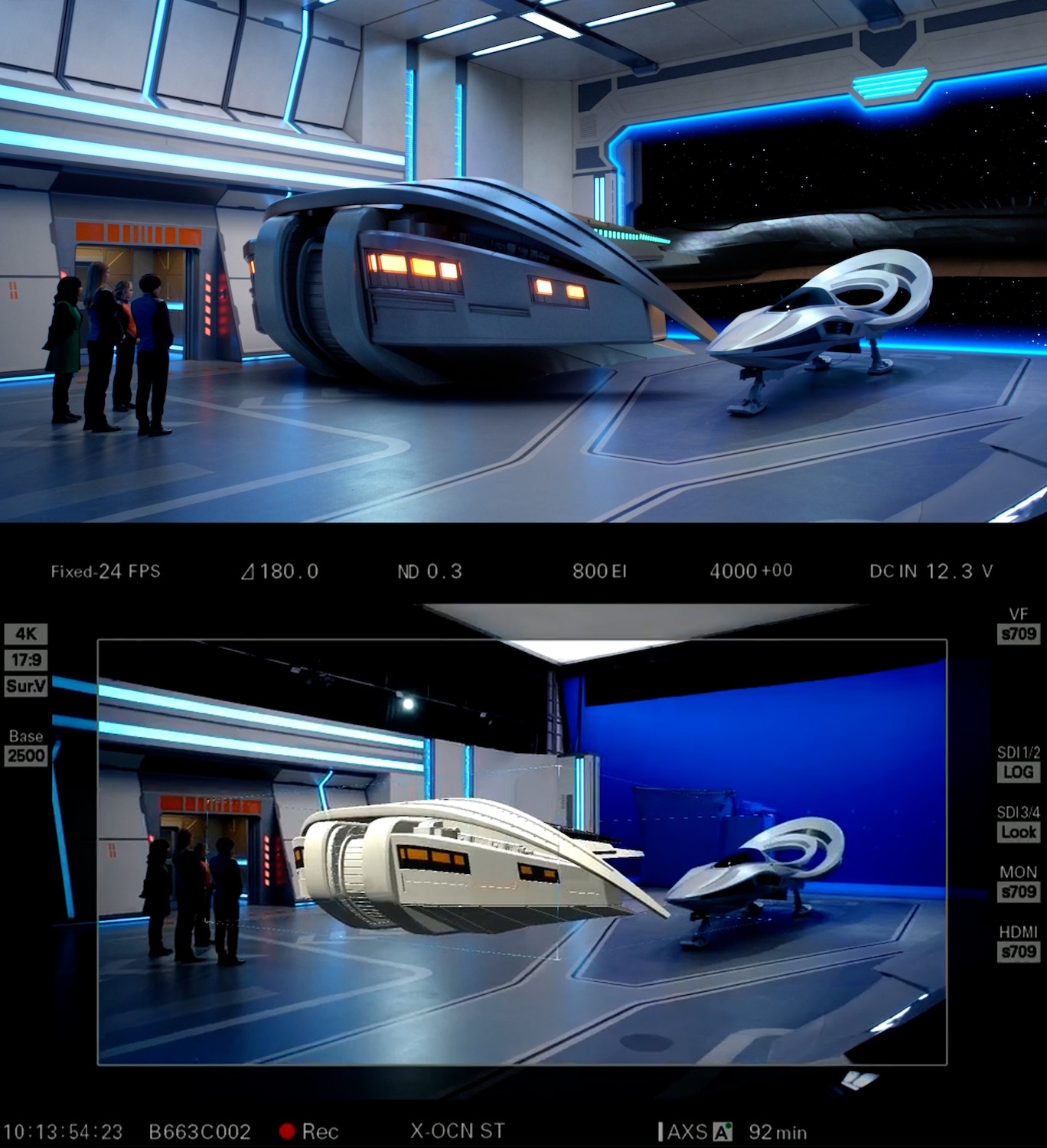

Fintech

An example of ViewScreen Studio in action, with a live on-set image at bottom and the final shot up top.Image Credits:Fuzzy Door Tech

fund-raise

Gadgets

Gaming

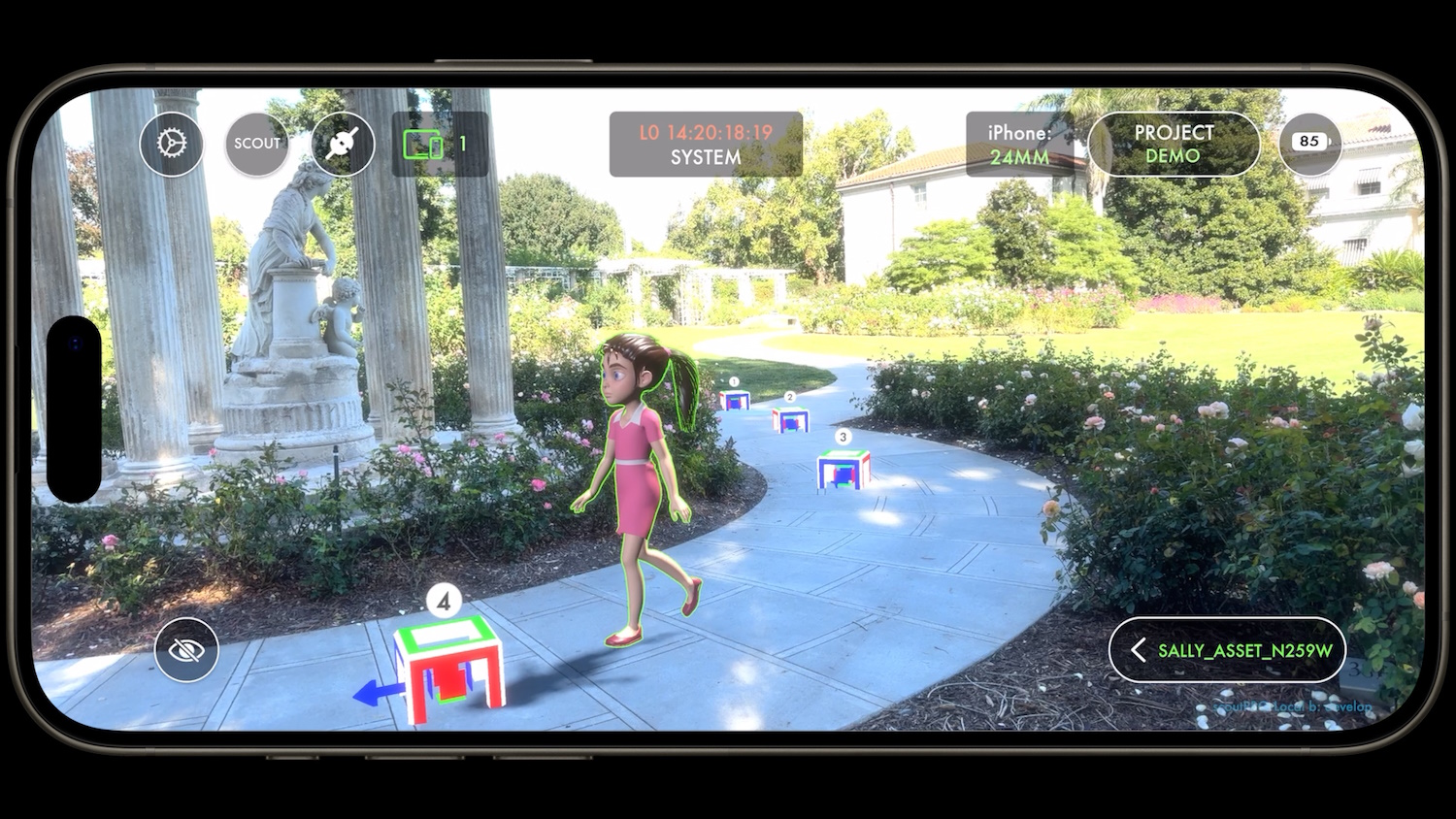

Example assets in a shot with a virtual character — the model of the girl will walk between the waypoints, which correspond to real space.Image Credits:Fuzzy Door Tech

Government & Policy

computer hardware

Image Credits:Fuzzy Door Tech

Layoffs

Media & Entertainment

Meta

Microsoft

concealment

Robotics

certificate

societal

Space

Startups

TikTok

Transportation

Venture

More from TechCrunch

Events

Startup Battlefield

StrictlyVC

Podcasts

Videos

Partner Content

TechCrunch Brand Studio

Crunchboard

touch Us

Practically every TV and film production utilize CG these days , but a show with a amply digital case takes it to another level . Seth MacFarlane ’s “ Ted ” is one of those , and the tech division of his output ship’s company Fuzzy Door has built a suite ofon - set augmented reality prick called ViewScreen , turning this potentially awkward process into an opportunity for collaborationism and temporary expedient .

work with a CG fiber or environment is tough for both actors and crew . Imagine talking to an empty place marker while someone does dialog off - camera , or pretending a tennis ball on a stick is a shuttlecraft descend into the landing bay tree . Until the whole output accept position in a holodeck , these CG assets will stay on inconspicuous , but ViewScreen at least lets everyone figure out with them in - camera , in veridical meter .

“ It dramatically improves the creative cognitive process and we ’re able to get the gibe we call for much more quickly , ” MacFarlane told TechCrunch .

The usual process for fool away with CG plus takes place almost entirely after the cameras are off . You fritter away the scene with a stand - in for the character , be it a lawn tennis ball or a mocap - rigged performer , and give actor and camera operators mark and framing for how you expect it to encounter out . Then you institutionalize your footage to the VFX people , who pass a rough cut , which then must be adjust to taste or redone . It ’s an iterative aspect , traditionally executed appendage that leave little room for spontaneity and often spend a penny these shoots deadening and complex .

“ Basically , this occur from my need as the VFX supervisor to show the invisible thing that everybody ’s supposed to be interacting with , ” said Brandon Fayette , co - founder of Fuzzy Door Tech , a variance of the output company . “ It ’s darn hard to shoot things that have digital element , because they ’re not there . It ’s heavy for the director , the tv camera operator has trouble frame , the gaffers , the kindling citizenry ca n’t get the kindling to work properly on the digital element . conceive of if you could actually see the imaginary things on the set , on the sidereal day . ”

You might say , “ I can do that with my iPhone correctly now . Ever hear of ARKit ? ” But although the engineering science involved is similar — and in fact ViewScreen does use iPhones — the difference is that one is a toy dog , the other a tool . Sure , you could drop a practical character onto a set in your AR app . But the real cameras do n’t see it ; the on - localize monitors do n’t show it ; the representative actor does n’t sync with it ; the VFX bunch ca n’t base final shaft on it — and so on . It ’s not a question of putting a digital fictional character in a prospect , but doing so while integrating with innovative production standard .

ViewScreen Studio wirelessly syncs between multiple camera ( real ones , like Sony ’s Venice line ) and can integrate multiple current of data simultaneously via a central 3D compositing and positioning box . They call it ProVis , or production visual image , a midway undercoat between pre and office .

Join us at TechCrunch Sessions: AI

Exhibit at TechCrunch Sessions: AI

For a shot in “ Ted , ” for instance , two cameras might have the wide and close shots of the bear , which is being command by someone on typeset with a gamepad or iPhone . His voice and gesture are being done by MacFarlane live , while a behavioral AI keeps the character ’s positions and stare on aim . Fayette demonstrated it for me live on a small shell , place an animated adaptation of Ted next to himself that include live face capture and free apparent movement .

Meanwhile , the cameras and computer are laying down clean footage , white VFX and a live complex both in - view finder and on monitor everyone can see , all timecoded and ready for the eternal rest of the product process .

asset can be given fresh instructions or attributes live , like waypoints or lighting . A practical tv camera can be walked through the concealment , letting alternative shots and scenarios occur naturally . A path can be shown only in the view finder of a camera with motility so the operator can plan their shot .

What if the film director decides the titular stuffed bear Ted should hop off the couch and take the air around ? Or what if they require to try out a more dynamical photographic camera movement to highlight an alien landscape in “ The Orville ” ? That ’s just not something that you could do in the pre - baked process normally used for this stuff .

Of course practical productions on LED enclosure address some of these issues , but you run into the same things . You get originative exemption with dynamical backgrounds and lighting , but much of a scene actually has to be locked in tightly due to the constraint of how these elephantine prepare piece of work .

“ Just to do one setup for ‘ The Orville ’ of a shuttlecock landing place would be about seven take and would take 15 - 20 minute of arc . Now we ’re getting them in two proceeds , and it ’s three hour , ” enounce Fayette . “ We found ourselves not only having short mean solar day , but trying new things — we can dally a little . It facilitate take the technological out of it and allows the creative to take over … the proficient will always be there , but when you have the creatives create , the character of the shots gets so much more advanced and playfulness . And it makes people experience more like the type are real — we ’re not staring at a vacuum cleaner . ”

It ’s not just theoretic , either — he said they dart “ Ted ” this way , “ the intact production , for about 3,000 shots . ” Traditional VFX artists take over finally for final - quality upshot , but they ’re not being tap every few time of day to render some young discrepancy that might go flat in the trash .

If you ’re in the business , you might desire to know about the four specific faculty of the Studio product , straight from Fuzzy Door Tech :

ViewScreen also has a mobile app , Scout , for doing a standardised thing on location . This is closer to your fair AR app , but again include the kind of metadata and tool that you ’d want if you were planning a localisation shoot .

“ When we were scouting for The Orville , we used ViewScreen Scout to visualise how a starship or character would look on location . Our VFX Supervisor would text shots to me and l’d give feedback right away . In the past , this could have taken weeks , ” said MacFarlane .

bring in in official asset and animating them while on a scout cut time and costs like crazy , Fayette pronounce . “ The manager , lensman , [ supporter film director ] , can all see the same affair , we can import and shift things live . For The Orville we had to put this creature moving around in the background , and we could bring in the liveliness decently into Scout and be like , ‘ OK that ’s a little too fast , peradventure we need a crane . ’ It permit us to regain answers to scouting problems very quickly . ”

Fuzzy Door Tech is formally making its instrument available today , but it has already worked with a few studios and productions . “ The way we sell them , it ’s all customs , ” explained Faith Sedlin , the company ’s prexy . “ Every show has dissimilar needs , so we partner with studios , interpret their scripts . Sometimes they give care more about the set than they do about the persona — but if it ’s digital , we can do it . ”