When you purchase through links on our site , we may gain an affiliate commission . Here ’s how it works .

A raw approach to computation could double the processing speed of machine like earphone or laptops without need to replace any of the live component .

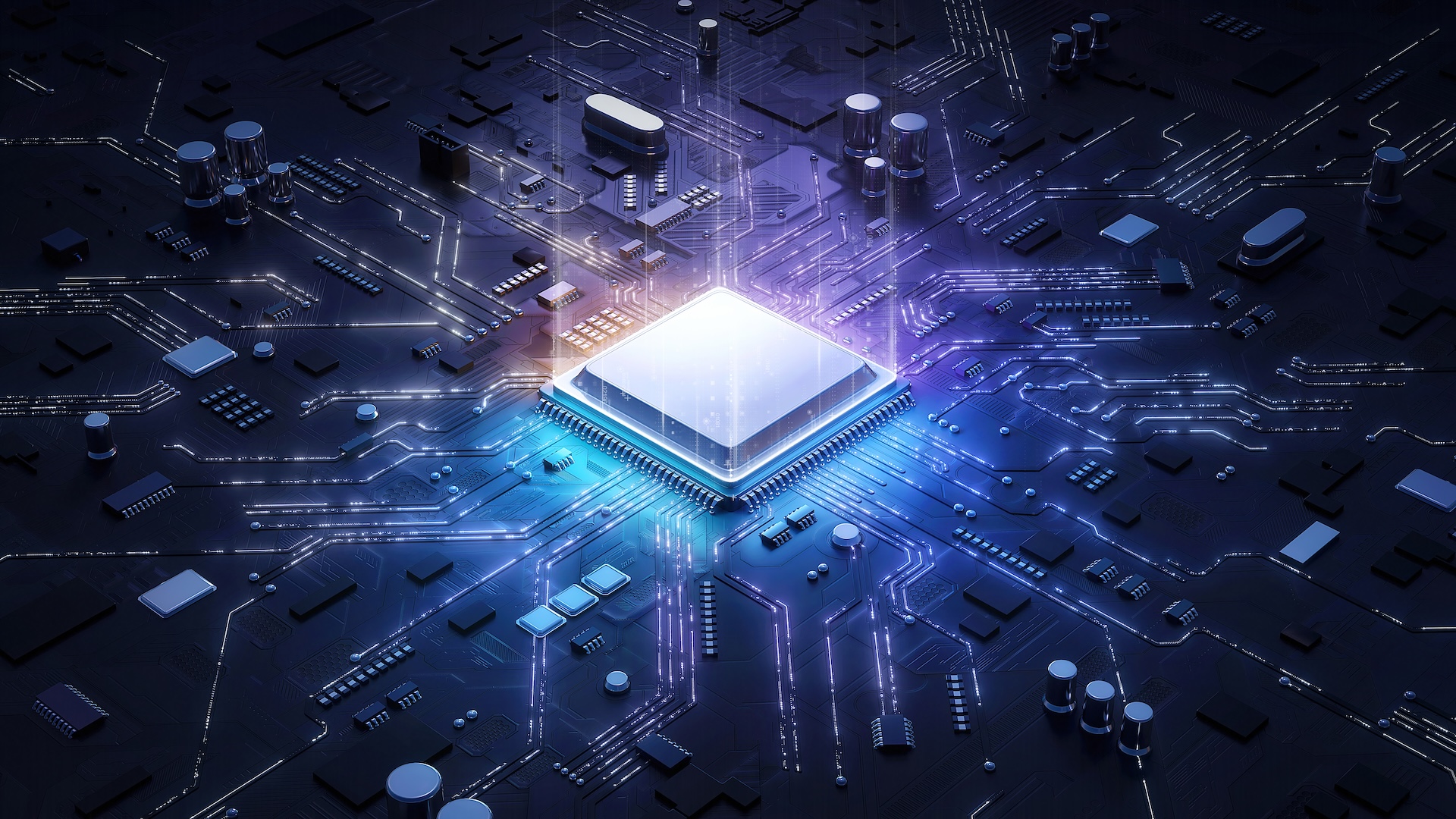

Modern gadget are fitted with different silicon chip that handle various type of processing . Alongside the central processing unit ( CPU ) , devices have graphics processing units ( GPUs ) , hardware accelerators for hokey intelligence ( AI ) workloads and digital signal processing units to process audio signal .

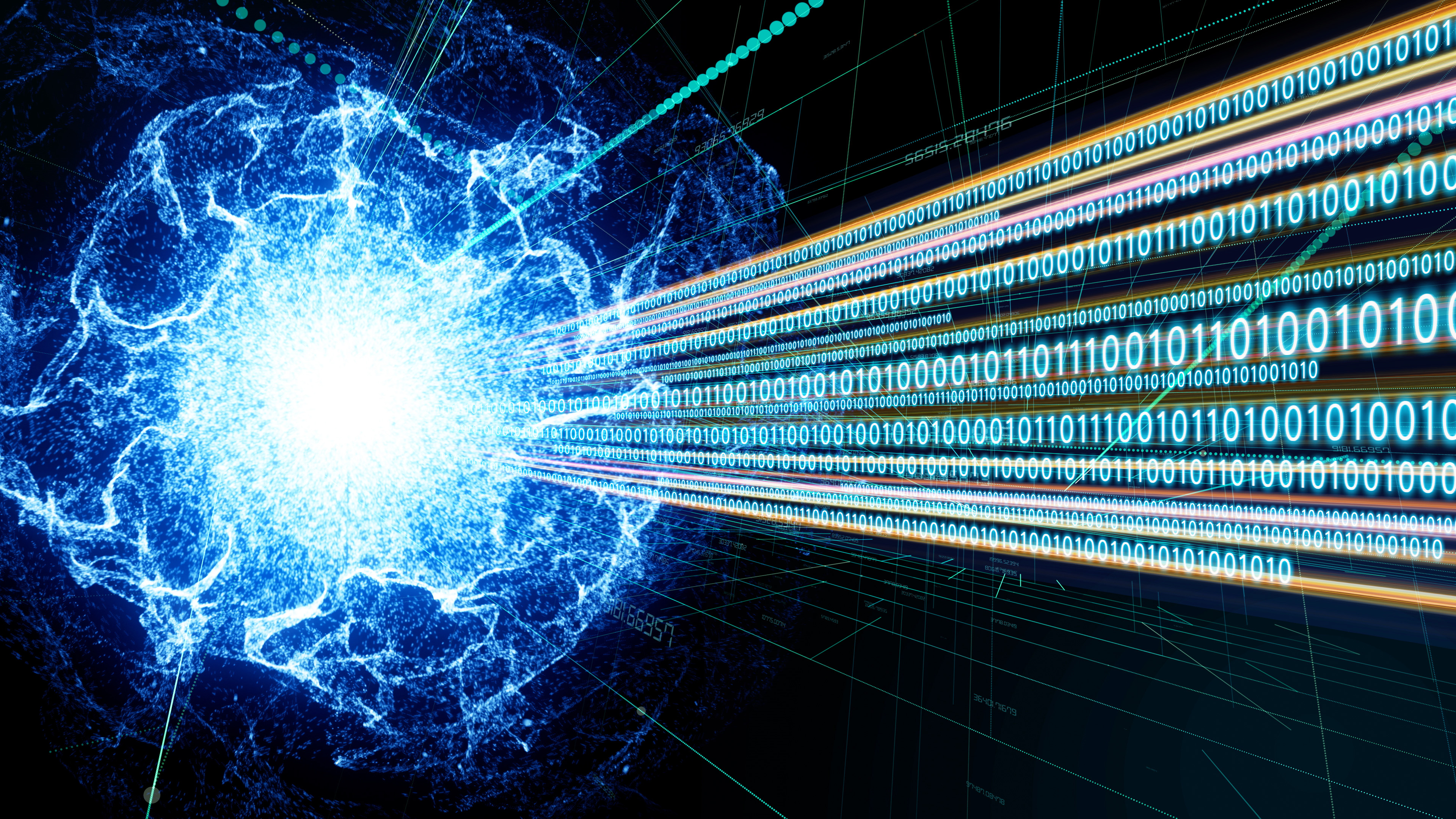

Due to established course of study carrying out example , however , these components process information from one program separately and in chronological sequence , which slow down processing times .

Information moves from one unit to the next depend on which is most efficient at wield a particular region of code in a political program . This creates a chokepoint , as one central processor needs to finish its job before handing over a fresh project to the next processor in line .

link up : World ’s first PC rediscovered by accident in UK house headway nearly 50 yr after last sighting

To figure out this , scientists have devised a Modern theoretical account for programme execution in which the processing units exploit in parallel . The squad outlined the new approach , dubbed " coinciding and heterogenous multithreading ( SHMT ) , " in a newspaper published in December 2023 to the preprint serverarXiv .

SHMT utilizes processing unit simultaneously for the same code realm — rather than hold back for processors to work on dissimilar regions of the code in a sequence found on which component is adept for a particular workload .

Another method normally used to answer this constriction is bed as " software pipelining , " and speeds things up by let dissimilar components work on dissimilar task at the same time , rather than hold back for one processor to eat up up before the other begins control .

However , in software system pipelining , one individual task can never be distributed between different components . This is not unfeigned of SHMT , which let different processing social unit make for on the same codification area at the same time , while get them also take on new workloads once they ’ve done their flake .

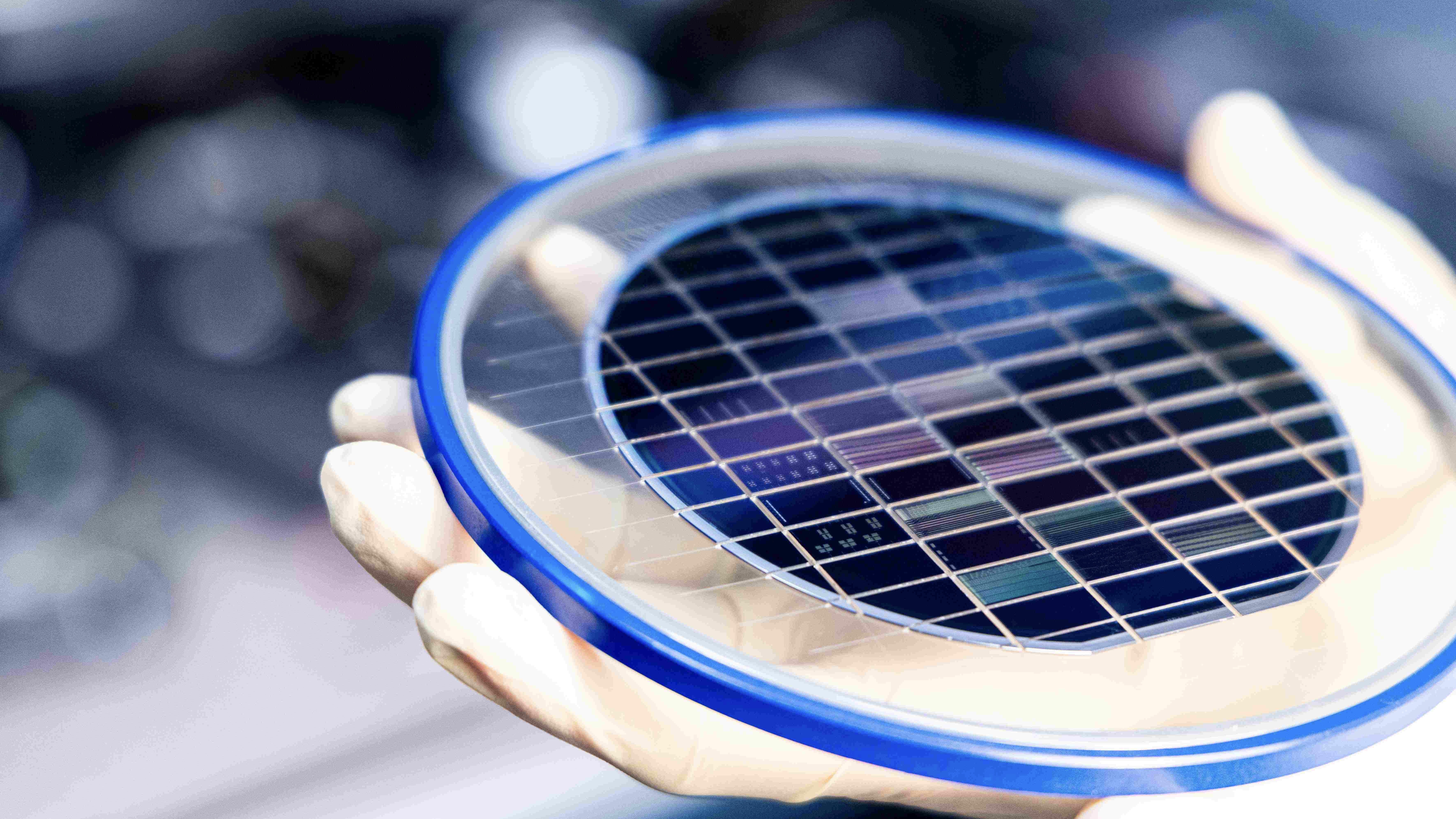

" You do n’t have to sum up new C.P.U. because you already have them , " lead authorHung - Wei Tseng , associate professor of electrical and computer engineering at University of California , Riverside , tell in astatement .

— New DNA - infused computer chip can perform calculations and make succeeding AI models far more effective

— scientist create light source - based semiconductor chip that will pave the way for 6 G

— World ’s 1st graphene semiconducting material could power succeeding quantum computers

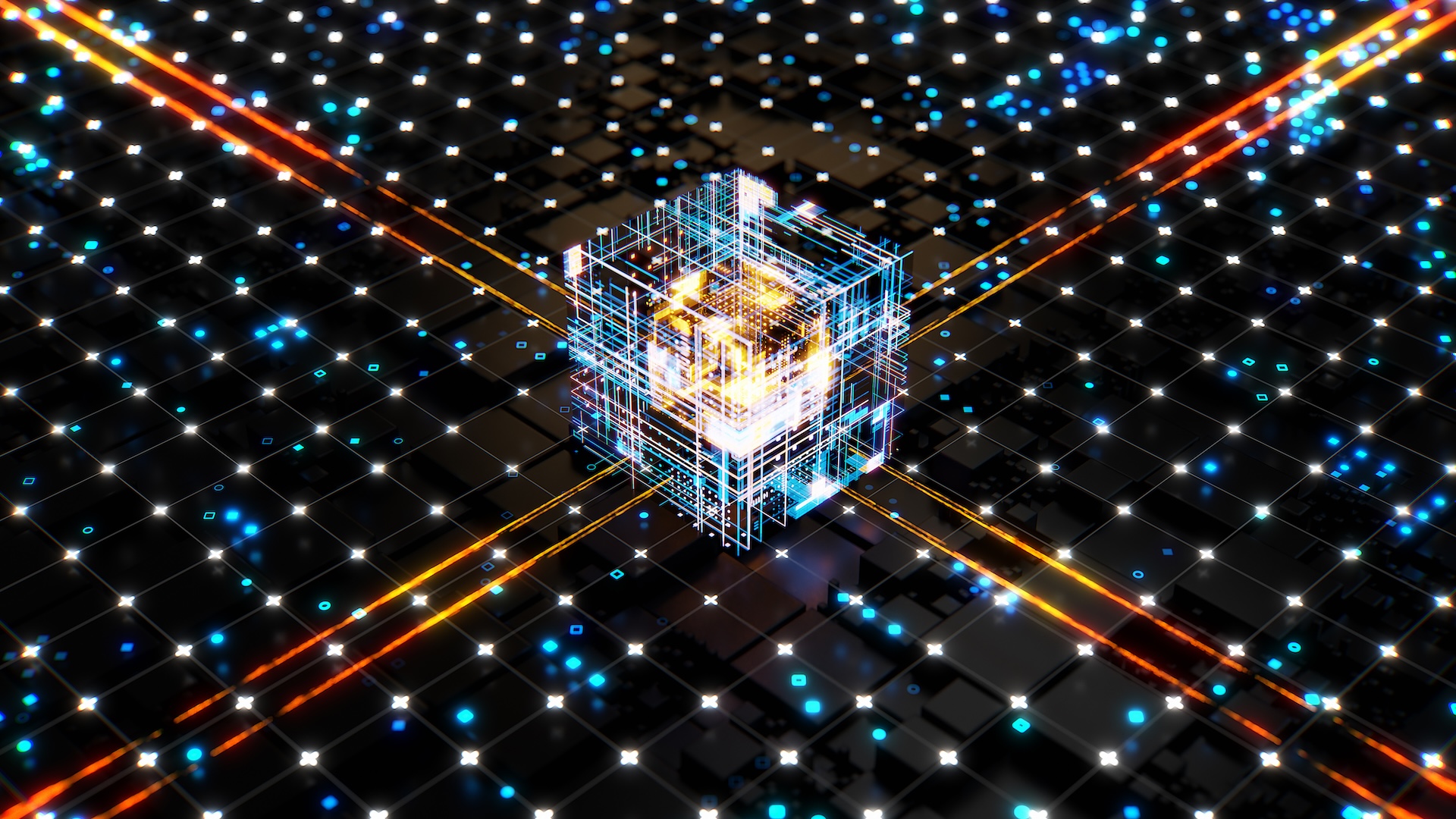

The scientist practice SHMT to a prototype system they progress with a multi - core ARM CPU , an Nvidia GPU and a tensor processing social unit ( TPU ) ironware throttle . In exam , it do chore 1.95 time faster and waste 51 % less energy than a system of rules that go in the formal way .

SHMT is more energy efficient too because much of the piece of work that is normally handle exclusively by more energy - intensive components — like the GPU — can be offloaded to low - mightiness hardware gas .

If this software program fabric is applied to existing systems , it could reduce computer hardware costs while also reducing carbon paper emission , the scientists claim , because it occupy less metre to manage work load using more energy - efficient components . It might also reduce the requirement for refreshed water to cool down massive data centers - if the technology is used in declamatory scheme .

However , the subject field was just a presentation of a prototype scheme . The researchers cautioned that further work is involve to determine how such a model can be carry out in practical configurations , and which use cases or applications it will benefit the most .