When you buy through data link on our site , we may earn an affiliate commission . Here ’s how it works .

speedy progress inartificial intelligence(AI ) is prompting people to question what the fundamental limits of the applied science are . more and more , a topic once consign to skill fiction — the notion of a superintelligent AI — is now being considered seriously by scientists and experts alike .

The idea that machines might one day catch or even surpass human intelligence activity has a prospicient history . But the tempo of progress in AI over late decades has given renewed urging to the topic , especially since the release of powerful magnanimous language models ( LLMs ) by companies like OpenAI , Google and Anthropic , among others .

IBM Deep Blue became the first AI system to defeat a reigning world chess champion (Garry Kasparov, pictured) in 1997.

expert have wildly differing vista on how feasible this estimate of " hokey super intelligence " ( ASI ) is and when it might appear , but some suggest that such hyper - equal to machines are just around the recess . What ’s certain is that if , and when , ASI does emerge , it will have enormous implication for human race ’s future .

" I think we would introduce a newfangled era of automated scientific discoveries , vastly accelerated economical growth , length of service , and fresh amusement experiences,“Tim Rocktäschel , professor of AI at University College London and a principal scientist at Google DeepMind say Live Science , providing a personal opinion rather than Google DeepMind ’s prescribed position . However , he also cautioned : " As with any pregnant engineering in history , there is potential risk . "

What is artificial superintelligence (ASI)?

Traditionally , AI research has focused on replicate specific capabilities that sound beings exhibit . These include things like the power to visually psychoanalyze a scene , parse spoken communication or sail an environment . In some of these narrow orbit AI has already achieve superhuman performance , Rocktäschel said , most notably ingames like go and chess .

The reaching goal for the field , however , has always been to replicate the more general class of intelligence seen in brute and humankind that combines many such capabilities . This concept has gone by several name over the years , include “ strong AI ” or “ oecumenical AI ” , but today it is most commonly calledartificial ecumenical intelligence(AGI ) .

" For a foresightful prison term , AGI has been a far off north adept for AI research , " Rocktäschel said . " However , with the coming of basis models [ another full term for LLMs ] we now have AI that can turn over a tolerant range of university entranceway exams and take part in international math and coding competitions . "

IBM Deep Blue became the first AI system to defeat a reigning world chess champion (Garry Kasparov, pictured) in 1997.

Related : GPT-4.5 is the first AI good example to pass an authentic Turing trial , scientists say

This is leading mass to take the possibility of AGI more seriously , said Rocktäschel . And crucially , once we create AI that correspond humans on a wide range of mountains of tasks , it may not be long before it achieve superhuman capabilities across the board . That ’s the idea , anyway . " Once AI strain human - level capabilities , we will be capable to utilize it to improve itself in a ego - referential way , " Rocktäschel sound out . " I personally believe that if we can reach AGI , we will progress to ASI shortly , perchance a few years after that . "

Once that milepost has been reach , we could see what British mathematicianIrving John Gooddubbed an"intelligence explosion " in 1965 . He argued that once machines become impudent enough to improve themselves , they would rapidly achieve levels of intelligence operation far beyond any man . He described the first radical - levelheaded automobile as " the last invention that man necessitate ever make . "

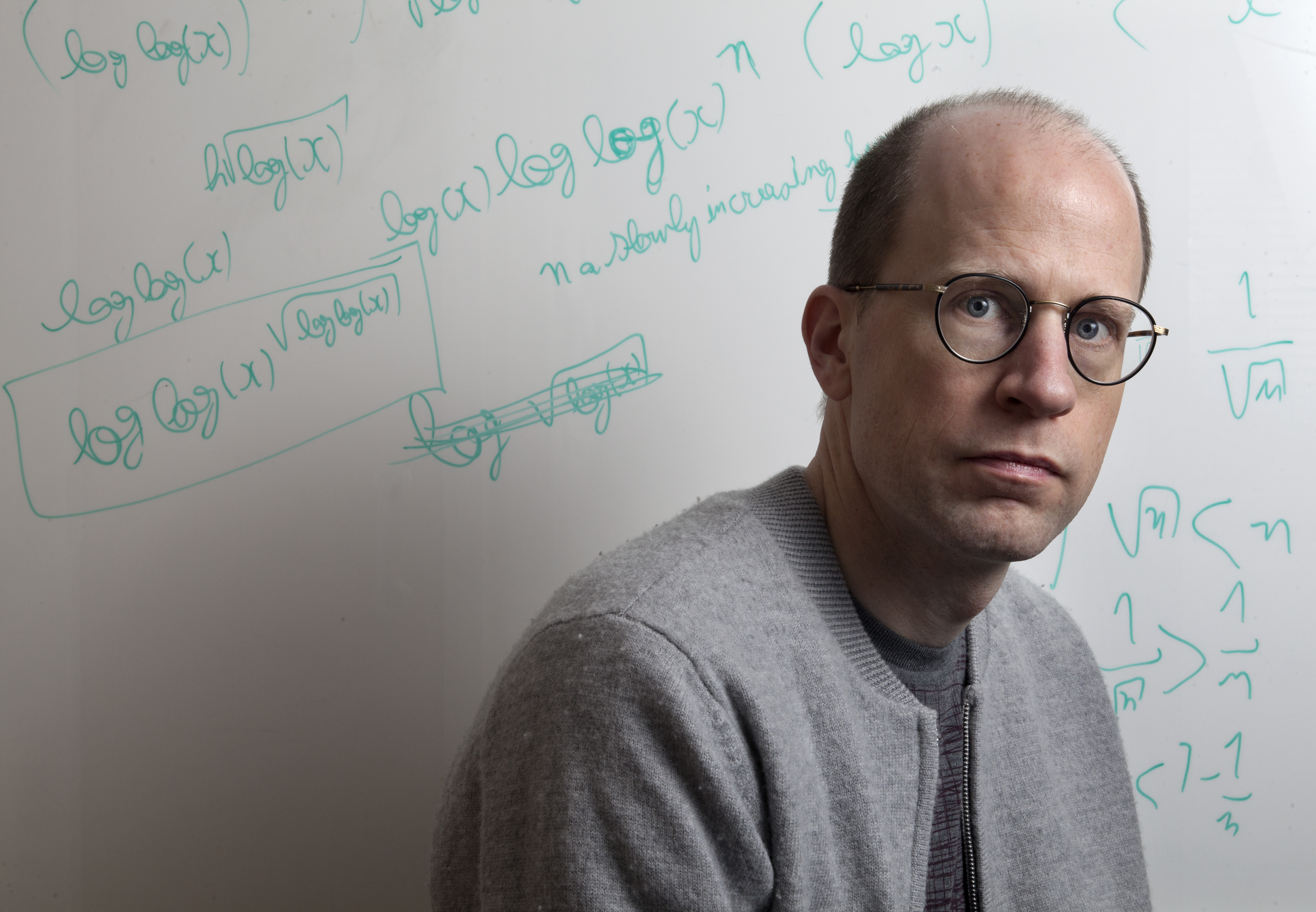

Nick Bostrum (pictured) philosophized on the implications of ASI in a landmark 2012 paper.

Renowned futuristRay Kurzweilhas fence this would lead to a " technical singularity " that would suddenly and irreversibly transform human refinement . The term delineate parallels with the uniqueness at the heart of a dim maw , where our intellect of cathartic break down . In the same way , the Second Coming of ASI would lead to speedy and irregular technological increment that would be beyond our comprehension .

Exactly when such a transition might happen is disputable . In 2005 , Kurzweil promise AGI would appear by 2029 , with the singularity following in 2045 , a forecasting he ’s stuck to ever since . Other AI experts propose wildly varying predictions — from within this decade tonever . But arecent surveyof 2,778 AI researchers found that , on total , they believe there is a 50 % chance ASI could look by 2047 . Abroader analysisconcurred that most scientist check AGI might arrive by 2040 .

What would ASI mean for humanity?

The implication of a technology like ASI would be enormous , remind scientists and philosopher to dedicate considerable time to mapping out the promise and potential booby trap for humanity .

On the positive side , a machine with almost unlimited capacity for intelligence could solve some of the world ’s most urgent challenges , saidDaniel Hulme , CEO of the AI companiesSataliaandConscium . In particular , crack intelligent machines could " remove the detrition from the cosmos and dissemination of food for thought , education , health care , Department of Energy , tape drive , so much that we can bring the cost of those goodness down to zero , " he told Live Science .

The promise is that this would free masses from having to work to survive and could instead spend meter doing thing they ’re passionate about , Hulme explained . But unless system are put in lieu to support those whose Job are made redundant by AI , the outcome could be bleaker . " If that happens very speedily , our economy might not be able to rebalance , and it could conduct to social unrest , ” he said .

The possibility of a superintelligence we have no control over has prompted fears that AI could give anexistential risk to our species . This has become a pop figure in science fiction , with movies like " Terminator " or " The Matrix " portraying evil machines inferno - bent on humanity ’s destruction .

But philosopherNick Bostromhighlighted that an ASI would n’t even have to be actively unfriendly to homo for various Day of Judgement scenario to play out . Ina 2012 paper , he suggested that the intelligence agency of an entity is independent of its finish , so an ASI could have motivation that are whole alien to us and not aligned with human well - being .

Bostrom fleshed out this idea with a thought experiment in which a top-notch - capable AI is lay out the seemingly unobjectionable task of producing as many newspaper - cartridge clip as possible . If unaligned with human values , it may decide to eliminate all humans to keep them from switch it off , or so it can turn all the corpuscle in their body into more paperclip .

Rocktäschel is more affirmative . " We build current AI scheme to be helpful , but also harmless and honest helper by aim , " he said . " They are tuned to come after human direction , and are trained on feedback to provide helpful , harmless , and fair answers . "

While Rocktäschel admitted these safeguards can be duck , he ’s confident we will develop better overture in the future . He also thinks that it will be potential to use AI to oversee other AI , even if they are stronger .

Hulme said most current approach path to " model alignment " — efforts to secure that AI is aligned with human values and desire — are too crude . Typically , they either provide rules for how the model should behave or train it on examples of human behavior . But he thinks these safety rail , which are bolted on at the close of the training cognitive process , could be easily bypassed by ASI .

rather , Hulme thinks we must build up AI with a " moral inherent aptitude . " His company Conscium is attempt to do that by develop AI in virtual environs that have been engineered to reward behaviour like cooperation and selflessness . Currently , they are working with very simple , " insect - tier " AI , but if the approach path can be surmount up , it could make alinement more robust . " embed morals in the instinct of an AI put us in a much safe position than just having these kind of Whack - a - Mole guard rails , " say Hulme .

Not everyone is confident we need to start worrying quite yet , though . One common criticism of the construct of ASI , allege Rocktäschel , is that we have no examples of humans who are highly equal to across a wide chain of tasks , so it may not be possible to achieve this in a single example either . Another objection is that the sheer computational resources take to achieve ASI may be prohibitory .

— ' Their capability to emulate human language and persuasion is vastly brawny ' : Far from terminate the world , AI scheme might actually save it

— AI can now repeat itself — a milepost that has expert terrorize

— 32 times artificial intelligence activity got it catastrophically wrong

More practically , how we measure progress in AI may be misguide us about how closemouthed we are to superintelligence , saidAlexander Ilic , headway of the ETH AI Center at ETH Zurich , Switzerland . Most of the impressive results in AI in late age have come up from testing systems on several extremely stilted test of individual skills such as coding , reasoning or language comprehension , which the system of rules are explicitly trained to pass , said Ilic .

He compare this to get up for test at school . " You loaded up your brain to do it , then you write the run , and then you forgot all about it , " he say . " You were smart by go to the class , but the genuine test itself is not a good proxy of the factual knowledge . "

AI that is able of passing many of these examination at superhuman level may only be a few age away , said Ilic . But he believes today ’s dominant approach will not lead to models that can carry out utile tasks in the physical populace or collaborate efficaciously with humanity , which will be important for them to have a broad impact in the real public .

You must confirm your public display name before commenting

Please logout and then login again , you will then be prompted to go into your display name .