Topics

Latest

AI

Amazon

Image Credits:mathisworks / Getty Images

Apps

Biotech & Health

Climate

Image Credits:mathisworks / Getty Images

Cloud Computing

commercialism

Crypto

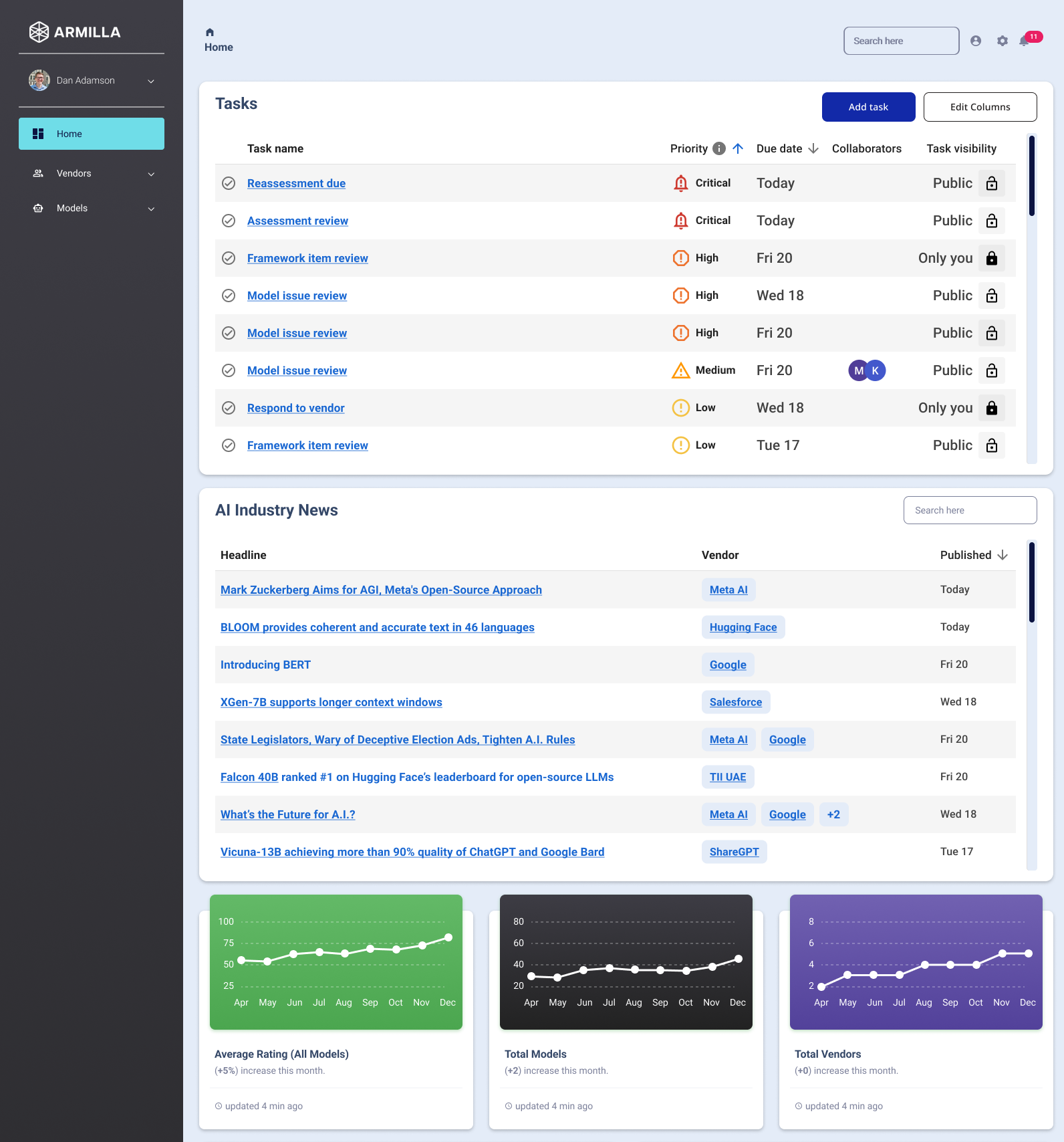

Image Credits:Armilla

endeavor

EVs

Fintech

Fundraising

Gadgets

gage

Government & Policy

computer hardware

Layoffs

Media & Entertainment

Meta

Microsoft

secrecy

Robotics

Security

societal

Space

Startups

TikTok

Transportation

speculation

More from TechCrunch

Events

Startup Battlefield

StrictlyVC

Podcasts

Videos

Partner Content

TechCrunch Brand Studio

Crunchboard

get through Us

There ’s a lot that can go wrong with GenAI — especially third - company GenAI . It makes stuff up . It ’s colored and toxic . And it can run afoul of right of first publication rules . According to a late resume from MIT Sloan Management Review and Boston Consulting Group , third - company AI tool are responsible for over 55 % of AI - related failures in organizations .

So it ’s not surprising , precisely , that some companies are leery of adopt the technical school just yet .

But what if GenAI come with a warranty ?

That ’s the business idea Karthik Ramakrishnan , an enterpriser and electrical applied scientist , came up with several old age ago while working at Deloitte as a fourth-year managing director . He ’d co - establish two “ AI - first ” companies , Gallop Labs and Blu Trumpet , and eventually get along to realise that trustfulness — and being able to measure peril — was obligate back the espousal of AI .

“ Right now , nearly every enterprise is count for path to put through AI to increase efficiency and keep up with the market , ” Ramakrishnan told TechCrunch in an e-mail interview . “ To do this , many are call on to third - political party seller and implementing their AI models without a everlasting understanding of the quality of the products … AI is advance at such a speedy step that the risks and harms are always acquire . ”

So Ramakrishnan teamed up with Dan Adamson , an expert in search algorithms and two - time startup founder , to startArmilla AI , which cater warranty on AI models to collective customers .

How , you might be wonder , can Armilla do this given that most models are black boxes or otherwise gate behind permit , subscriptions and genus Apis ? I had the same interrogative . Through benchmarking , was Ramakrishnan ’s answer — and a careful glide path to customer acquisition .

Join us at TechCrunch Sessions: AI

Exhibit at TechCrunch Sessions: AI

Armilla read a simulation — whether open source or proprietary — and conducts assessments to “ swan its quality , ” inform by the global AI regulatory landscape . The company tests for things likehallucinations , racial and sex preconception and beauteousness , world-wide robustness and security across an regalia of theoretical applications and habit cases , leveraging its in - house judgment engineering science .

If the model passes draft , Armilla backs it with its warranty , which reimburses the model ’s buyer for any fee they pay to apply the example .

“ What we really offer go-ahead is confidence in the technology they ’re procure from third - company AI trafficker , ” Ramakrishnan said . “ endeavour can come to us and have us run assessment on the vendors they ’re looking to use . Just like the penetration testing they would do for new engineering , we execute penetration testing for AI . ”

I asked Ramakrishnan , by the way , whether there were any models Armillawouldn’ttest for honorable reasons — say a facial recognition algorithm from a vendor known to do byplay with confutative actors . He said :

“ It would not only be against our ethics , but against our business concern model , which is connote on trust , to grow assessments and reports that supply mistaken confidence in AI models that are problematic for a client and society . From a legal standpoint , we are n’t go to take on client for models that are prohibited by the EU , that have been cast out , which is the case for some facial recognition and biometric categorization arrangement , for example — but applications that fall into the ‘ mellow risk ’ family as defined by theEU AI Act , yes . ”

Now , the conception of warrantee and policy coverage for AI is n’t new — a fact that surprised this writer , honestly . In 2018 , Munich Re debut an insurance product , aiSure , designed to protect against loss from potentially unreliable AI models by operate the models through benchmarks similar to Armilla ’s . Outside of warranty , agrowingnumber of seller , include OpenAI , Microsoft and AWS , offer protections pertaining to right of first publication violations that might arise from deployment of their AI tools .

But Ramakrishnan claim that Armilla ’s approach is unequaled .

“ Our appraisal touches on a wide range of mountains of areas , including KPIs , outgrowth , public presentation , information quality , and qualitative and quantitative criteria , and we do it at a fraction of the price and time , ” he impart . “ We valuate AI model based on requisite set out in legislation such as EU AI Act or the AI lease bias law in NYC — NYC Local Law 144 — and other state regulations , such as Colorado ’s declare oneself AI quantitative testing regulation or New York ’s insurance policy rotary on the use of AI in underwriting or pricing . We ’re also quick to conduct assessment require by other come forth regulations as they come up into play , such as Canada ’s AI and Data Act . ”

Armilla , which launch coverage in late 2023 , indorse by carrier wave Swiss Re , Greenlight Re and Chaucer , exact to have 10 or so customers , including a health care company applying GenAI to process aesculapian record book . Ramakrishnan tells me that Armilla ’s client base has been growing 2x month over calendar month since Q4 2023 .

“ We ’re serving two primary hearing : enterprises and third - party AI vendors , ” Ramakrishnan said . “ enterprise habituate our warranty to establish protection for the third - political party AI trafficker they ’re procuring . Third - company marketer use our warranty as a pestle of favorable reception that their product is trusty , which helps to cut their sales cycles . ”

Warranties for AI make nonrational sense . But part of me wonders whether Armilla will be able-bodied to keep up with tight - shifting AI insurance ( e.g. New York City ’s hiring algorithm bias law , theEU AI Act , etc . ) , which could put it on the hook for sizeable payouts if its assessments — and contracts — are n’t watertight .

Ramakrishnan brushed away this business organisation .

“ regularisation is rapidly developing in many jurisdictions independently , ” he said , “ and it ’ll be critical to see the nuances of legislation around the world . There ’s no ‘ one - size - fit - all ’ that we can apply as a orbicular standard , so we involve to stitch it all together . This is challenging — but has the welfare of creating a ‘ fosse ’ for us . ”

Armilla — based in Toronto , with 13 employees — lately raised $ 4.5 million in a seed round led by Mistral ( not to be confused with theAI startupof the same name ) with involvement from Greycroft , Differential Venture Capital , Mozilla Ventures , Betaworks Ventures , MS&AD Ventures , 630 Ventures , Morgan Creek Digital , Y Combinator , Greenlight Re and Chaucer . Bringing its total raised to $ 7 million , Ramakrishnan said that the proceeds will be put toward expanding Armilla ’s survive warranty offer as well as introducing raw merchandise .

“ Insurance will roleplay the biggest use in addressing AI danger , and Armilla is at the cutting edge of developing policy product that will allow companies to deploy AI solutions safely , ” Ramakrishnan said .