In late 2021 , Appleannouncedseveral new feature article related to nestling intimate ill-usage material ( CSAM ) . Some of them have do under a great deal of examination and return some outrage , include from Apple employee . Here ’s what you want to bang about thenewtechnologybefore it rolls out later this year .

Apple and CSAM Scanning: The latest news

Dec. 8 , 2022 : Apple has decided tokill the controversial pic - scanning feature .

Apr. 21 , 2022 : TheConversation Safety feature for Messages is coming to the U.K. , though a timeline has not beenannounced .

Dec. 15 , 2021 : Appleremovedreferences to the CSAM system from its website . Apple state theCSAM feature of speech is still “ delayed”andnot canceled .

Dec. 14 , 2021 : ApplereleasedtheConversation Safety feature for Messages in the official iOS 15.2 update . The CSAM lineament was not released .

Nov. 10 , 2021 : TheiOS 15.2 genus Beta 2 has the less - controversial Conversation Safety feature article for Messages . It relies on on - gadget scanning of images , but it does n’t oppose image to a sleep together database and is n’t enable unless a parent account enables it . This is different from CSAM.Get the detail .

Sept. 3 , 2021 : Appleannouncedthat it willdelay the release of its CSAM featuresin iOS 15 , iPadOS 15 , watchOS 8 , and macOS 12 Monterey until later this yr . The feature will be part of an OS update .

Aug. 24 , 2021 : Apple hasconfirmed to 9to5Macthat it is alreadyscanning iCloud emails for CSAMusing image - matching technology .

Aug. 19 , 2021 : Nearly 100 insurance and rights groupspublished an assailable letterurging Apple to devolve plans to put through the systemin iOS 15 .

Aug. 18 , 2021 : After a study thatthe NeuralHash system that Apple ’s CSAM technical school is ground on was spoofed , Apple say the system“behaves as identify . ”

Aug. 13 , 2021 : In an audience with Joanna Stern fromThe Wall Street Journal , senior frailty president of Software Engineering Craig Federighi say Apple ’s new technology is “ wide misunderstood . ” Hefurther explain how the system works , as outline below . Apple also release adocument with more detailsabout the safety characteristic in Messages and the CSAM detective work lineament .

The basics

What are the technologies Apple is rolling out?

Apple will be undulate out new anti - CSAM features in three areas : Messages , iCloud Photos , and Siri and Search . Here ’s how each of them will be apply , grant to Apple .

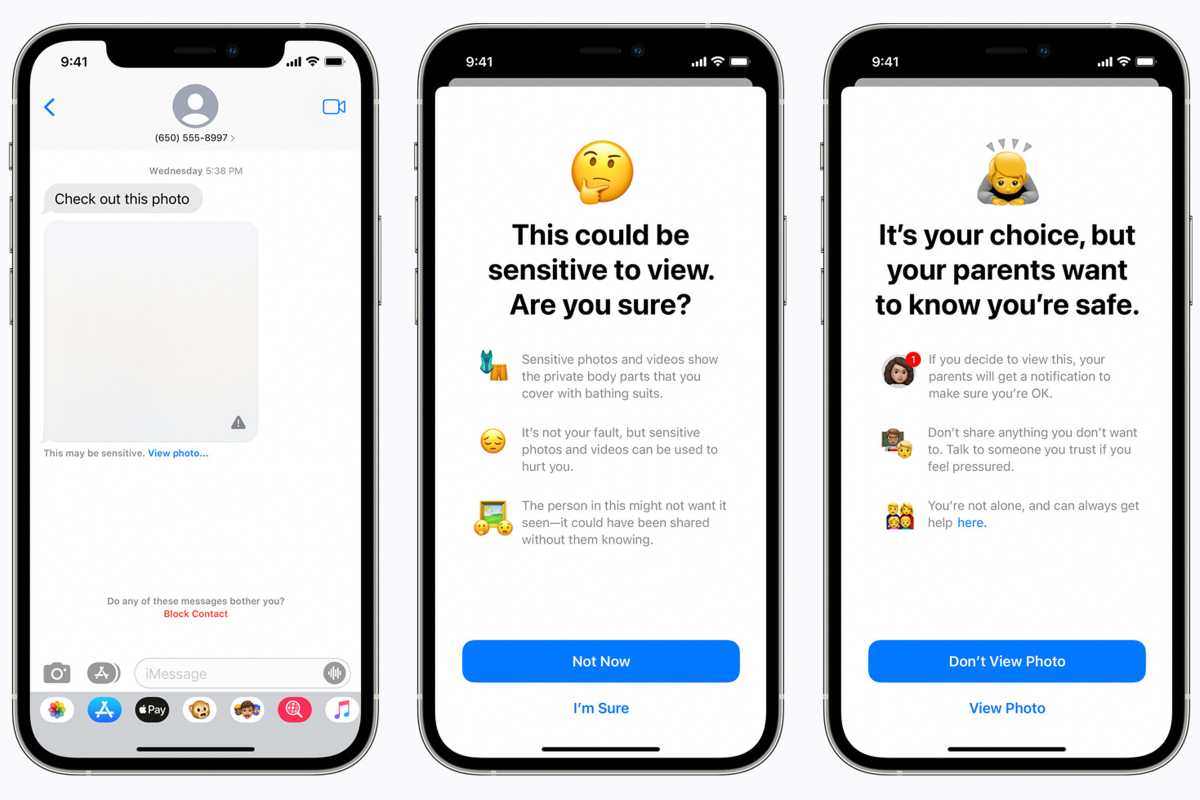

substance : The Messages app will use on - equipment simple machine learning to monish children and parents about sensible contentedness .

iCloud photograph : Before an image is stored in iCloud Photos , an on - twist matching process is do for that image against the known CSAM hasheesh . Apple has resolve this feature wo n’t arrive on twist .

Siri and Search : Siri and Search will provide extra resourcefulness to help shaver and parent stay dependable online and get assistance with dangerous situations .

When will the system arrive?

Apple announced in early September that the arrangement will not be available at the surrender release of iOS 15 , iPadOS 15 , watchOS 8 , and macOS 12 Monterey . The features will be available in OS updates afterwards this class .

The new CSAM detection tools will arrive with the new OSes afterward this year .

Apple

Why is the system releasing now?

Inan interview with Joanna Stern from the Wall Street Journal , Craig Federighi aver the reason why it ’s releasing in iOS 15 is that “ we see it out . ”

CSAM scanning

Does the scanning tech mean Apple will be able to see my photos?

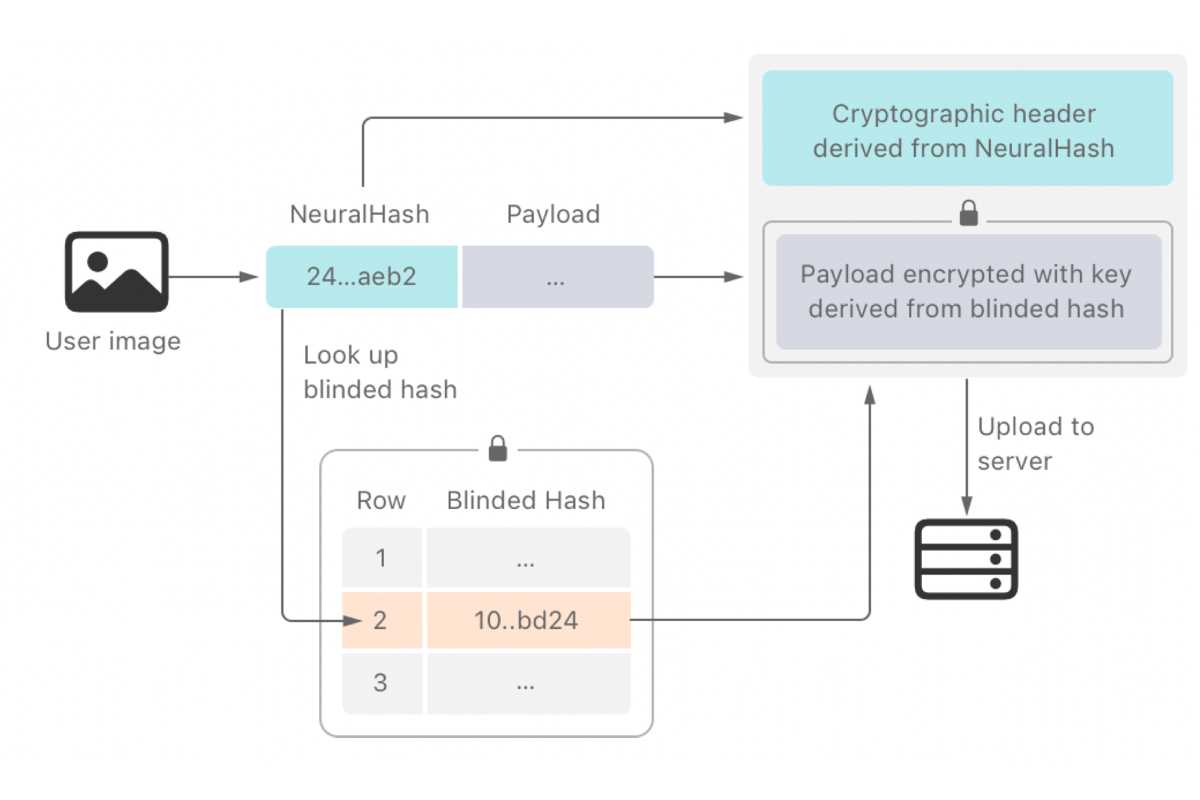

Not exactly . Here ’s how Apple explains the engineering : alternatively of scanning persona in the cloud , the system perform on - machine matching using a database of known CSAM image hashes provided by theNational Center for Missing and Exploited Children(NCMEC ) and other child safe organization . Apple further transmute this database into an undecipherable stage set of hash that is securely store on users ’ devices . As Apple explains , the system is stringently looking for “ specific , known ” images . An Apple employee will only see photos that are tagged as give birth the hashish and even then only when a threshold is met .

But Apple is scanning photos on my device, right?

Yes and no . The system is multi - faceted . For one , Apple say the system does not process for drug user who have iCloud Photos disabled , though it ’s not wholly clear if that scanning is only performed on images upload to iCloud Photos , or all images are scanned and compared but theresultsof the scan ( a hash match or not ) are only transport along with the photo when it ’s upload to iCloud Photos . Federighi said “ the cloud does the other half of the algorithm , ” so while photograph are scanned on the gadget it need iCloud to fully work . Federighi emphatically stated that the system is “ literally part of the line for storing image in iCloud . ”

What happens if the system detects CSAM images?

Since the system only sour with CSAM prototype haschisch provide by NCMEC , it will only account photos that are known CSAM in iCloud Photos . If it does detect CSAM over a sure threshold — Federighi said that it ’s “ something on the order of 30 , ” Apple will then conduct a human review before deciding whether to make a report to NCMEC . Apple says there is no automate reporting to law enforcement , though it will report any instances to the appropriate authorities .

Could the system mistake an actual photo of my child as CSAM?

It ’s extremely improbable . Since the scheme is only rake for bang images , Apple say the likeliness that the organization would falsely flag any collapse account is less than one in one trillion per year . And if it does find , an human review would catch it before it step up to the authorities . Additionally , there is an appeals mental process in place for anyone who finger their account was ease up and handicapped in erroneousness .

A report in August , however , ostensibly proved that the system is fallible . GitHub user AsuharietYgvareportedly outlined details of the NeuralHash organization Apple use while userdxoigmnseemingly claimed to havetricked the systemwith two dissimilar mental image that created the same hashish . In response , Apple defend the arrangement , telling Motherboard that one used “ is a generic version and not the last reading that will be used for iCloud Photos CSAM detection . ” Ina papers analyze the security department menace , Apple said , “ The NeuralHash algorithm [ … is ] include as part of the computer code of the sign operating system [ and ] protection researchers can verify that it deport as described . ”

Can I opt out of the iCloud Photos CSAM scanning?

No , but you could handicap iCloud Photos to prevent the feature from working . It is indecipherable if doing so would fully sprain off Apple ’s on - twist scanning of photos , but the effect of those scans ( matching a hashish or not ) are only received by Apple when the image is upload to iCloud Photos .

Messages

Is Apple scanning all of my photos in Messages too?

Not exactly . Apple ’s guard measures in message are designed to protect tiddler and are only useable for child story set up as fellowship in iCloud .

So how does it work?

Communication safety in Messages is a whole different engineering than CSAM scanning for iCloud Photos . Rather than using image hasheesh to liken against make out images of kid intimate revilement , it analyze image institutionalize or received by Messages using a political machine ascertain algorithm foranysexually explicit content . Images arenotshared with Apple or any other agency , including NCMEC . It is a system that parents can enable on tyke account to give them ( and the minor ) a warning if they ’re about to incur or commit sexually explicit stuff .

Can parents opt-out?

Parents involve to specifically start the new Messages image scanning feature of speech on the accounts they have arrange up for their small fry , and it can be plow off at any fourth dimension .

Will iMessages still be end-to-end encrypted?

Yes . Apple says communication safety in Messages does n’t interchange the seclusion feature broil into messages , and Apple never gains access to communications . moreover , none of the communications , image evaluation , intervention , or notification are available to Apple .

What happens if a sexually explicit image is discovered?

When the parent has this feature enabled for their child ’s account and the child station or obtain sexually explicit figure , the photograph will be blurred and the nipper will be warned , acquaint with resources , and reassured it is ok if they do not require to view or send the photo . For explanation of youngster age 12 and under , parents can set up paternal notifications that will be sent if the child confirms and sends or views an range that has been determined to be sexually explicit .

Siri and Search

What’s new in Siri and Search?

Apple is enhancing Siri and Search to help people find resources for reporting CSAM and expanding direction in Siri and Search by provide additional resource to help oneself children and parents stay safe online and get avail with unsafe situations . Apple is also updating Siri and Search to intervene when users perform lookup for queries relate to CSAM . Apple says the intervention will include explicate to users that interestingness in this topic is harmful and problematic and provide resources from partner to get help with this issue .

The controversy

So why are people upset?

While most people concord that Apple ’s system of rules is fitly limited in scope , expert , watchdogs , and privateness counsel are concerned about thepotentialfor ill-usage . For case , Edward Snowden , who exposed ball-shaped surveillance programs by the NSA and is know in exile , tweeted“No matter how well - intentioned , Apple is rolling out aggregative surveillance to the intact reality with this . Make no fault : if they can scan for kiddie erotica today , they can read for anything tomorrow . ” Additionally , the Electronic Frontier Foundation criticize the systemand Matthew Green , a cryptography professor at Johns Hopkins , explained the potentiality for misuse with the arrangement Apple is using .

People are also interested that Apple is sacrificing the concealment built into the iPhone by using the gadget to scan for CSAM images . While many other services scan for CSAM image , Apple ’s organisation is singular in that it employ on - gadget matching rather than mental image uploaded to the swarm .

Can the CSAM system be used to scan for other image types?

Not at the consequence . Apple say the system is only plan to scan for CSAM images . However , Apple couldtheoreticallyaugment the hashish inclination to look for known range of a function related to other things , such as LGBTQ+ subject but has repeatedly enjoin the system is only design for CSAM .

Is Apple scanning any other apps or services?

Apple lately confirmed that it has been scanning iCloud emails using effigy - matching technology on its host . However , it importune that iCloud substitute and photograph are not part of this system . Of note , iCloud emails are not encrypted on Apple ’s servers , so scan images is an promiscuous process .

What if a government forces Apple to scan for other images?

Apple allege it will refuse such demands .

Do other companies scan for CSAM images?

Yes , most cloud service , including Dropbox , Google , andMicrosoft , as well asFacebookalso have systems in place to detect CSAM image . These all operate by decrypting your images in the cloud to skim them .

Can the Messages technology make a mistake?

Federighi suppose the system “ is very strong ” to fool . However , while he articulate Apple “ had a tough time ” coming up with images to fool the Messages organisation , he let in that it ’s not unfailing .

Could Apple be blocked from implementing its CSAM detection system?

It ’s backbreaking to say , but it ’s likely that there will be effectual fight both before and after the raw technologies are implemented . On August 19 , more than 90 insurance policy and right groupspublished an open letterurging Apple to abandon the scheme : “ Though these capabilities are intended to protect small fry and to reduce the spread of child intimate abuse material , we are concerned that they will be used to censor protected address , jeopardise the privacy and surety of citizenry around the world , and have disastrous consequences for many youngster , ” the letter said .