When you purchase through links on our land site , we may earn an affiliate delegacy . Here ’s how it works .

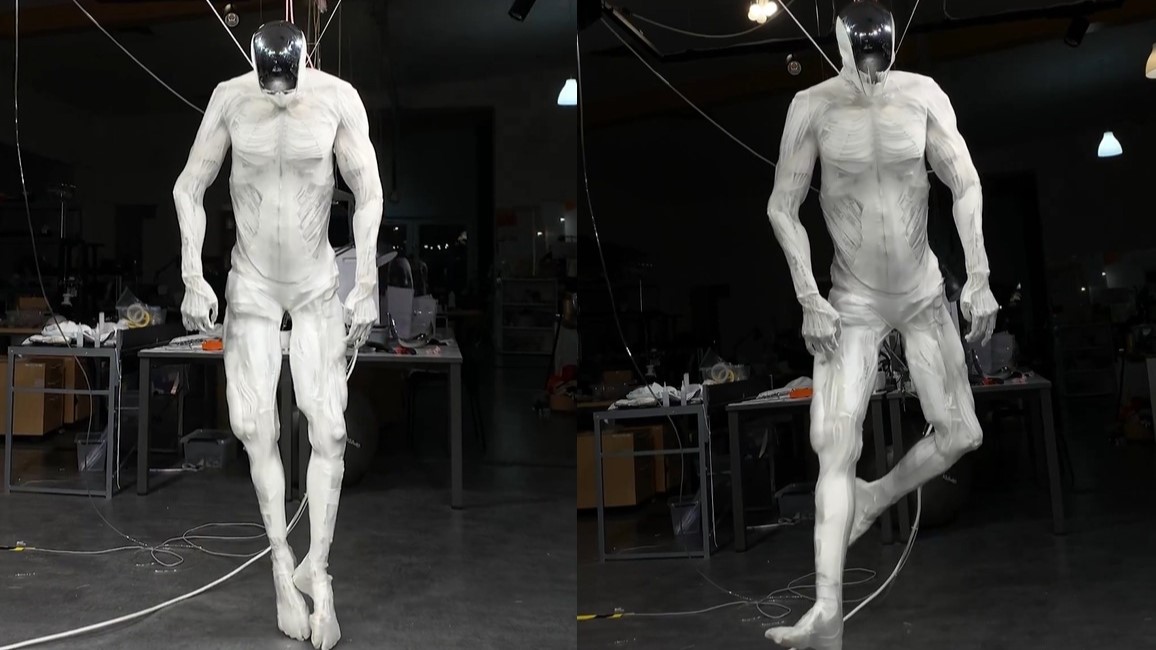

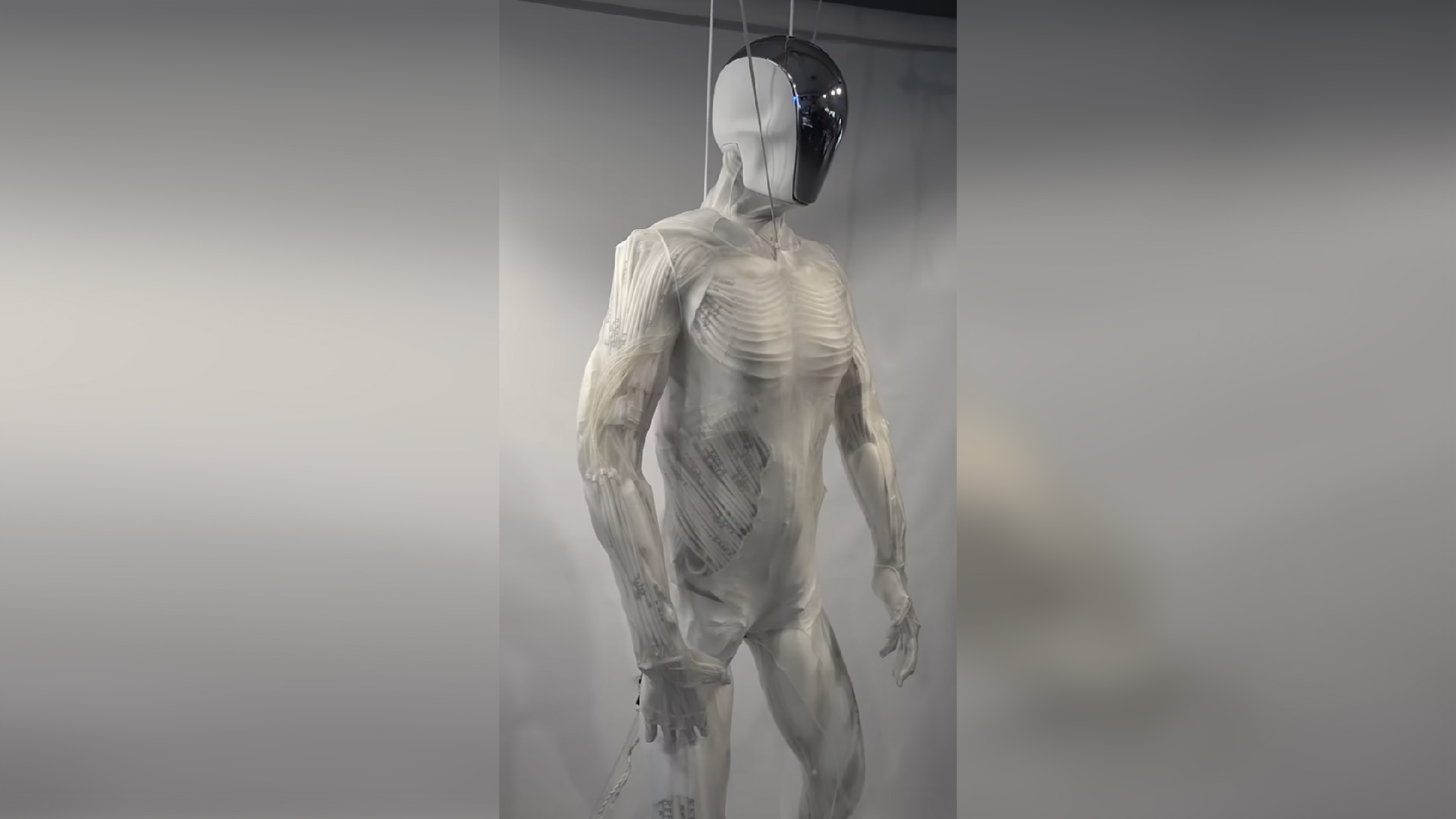

A ego - correct humanoid golem that see tomake a cup of coffee just by watching footageof a human doing it can now suffice questions thanks to an integration with OpenAI ’s technology .

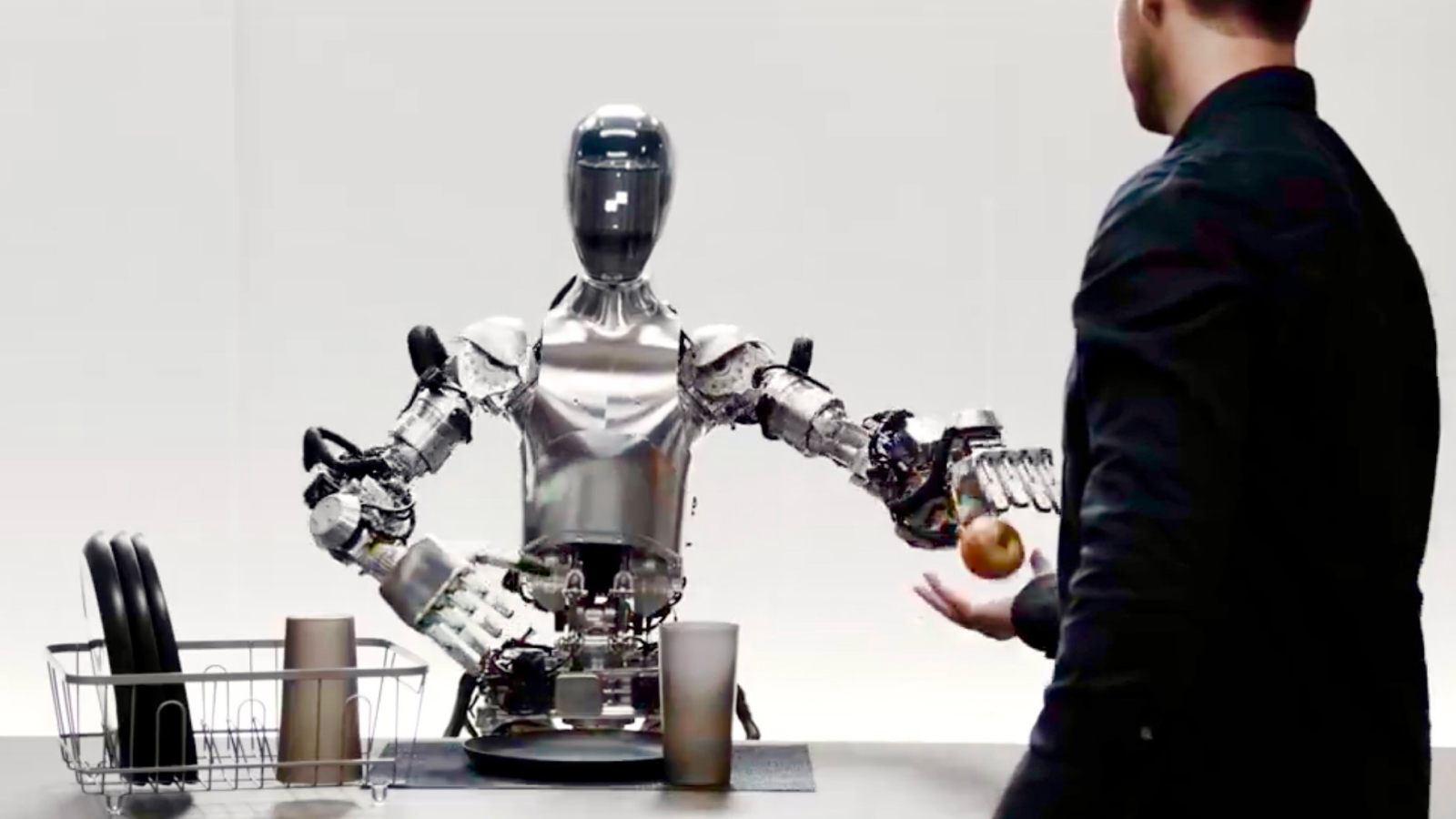

In the newpromotional video , a technician asks human body 01 to execute a range of simple undertaking in a minimalist test environment resembling a kitchen . He first need the automaton for something to use up and is pass on an orchard apple tree . Next , he asked Figure 01 to explicate why it handed him an apple while it was foot up some trash . The robot answers all the query in a automatic but favorable vocalization .

In the new promotional video, a technician asks Figure 01 to perform a range of simple tasks in a minimalist test environment resembling a kitchen.

Related : see scientist control a robot with their hands while wearing the Apple Vision Pro

The company say in its video that the conversation is powered by an integration with technology made by OpenAI — the name behind ChatGPT . It ’s improbable that soma 01 is using ChatGPT itself , however , because that AI pecker does not normally use pause wrangle like " um , " which this automaton does .

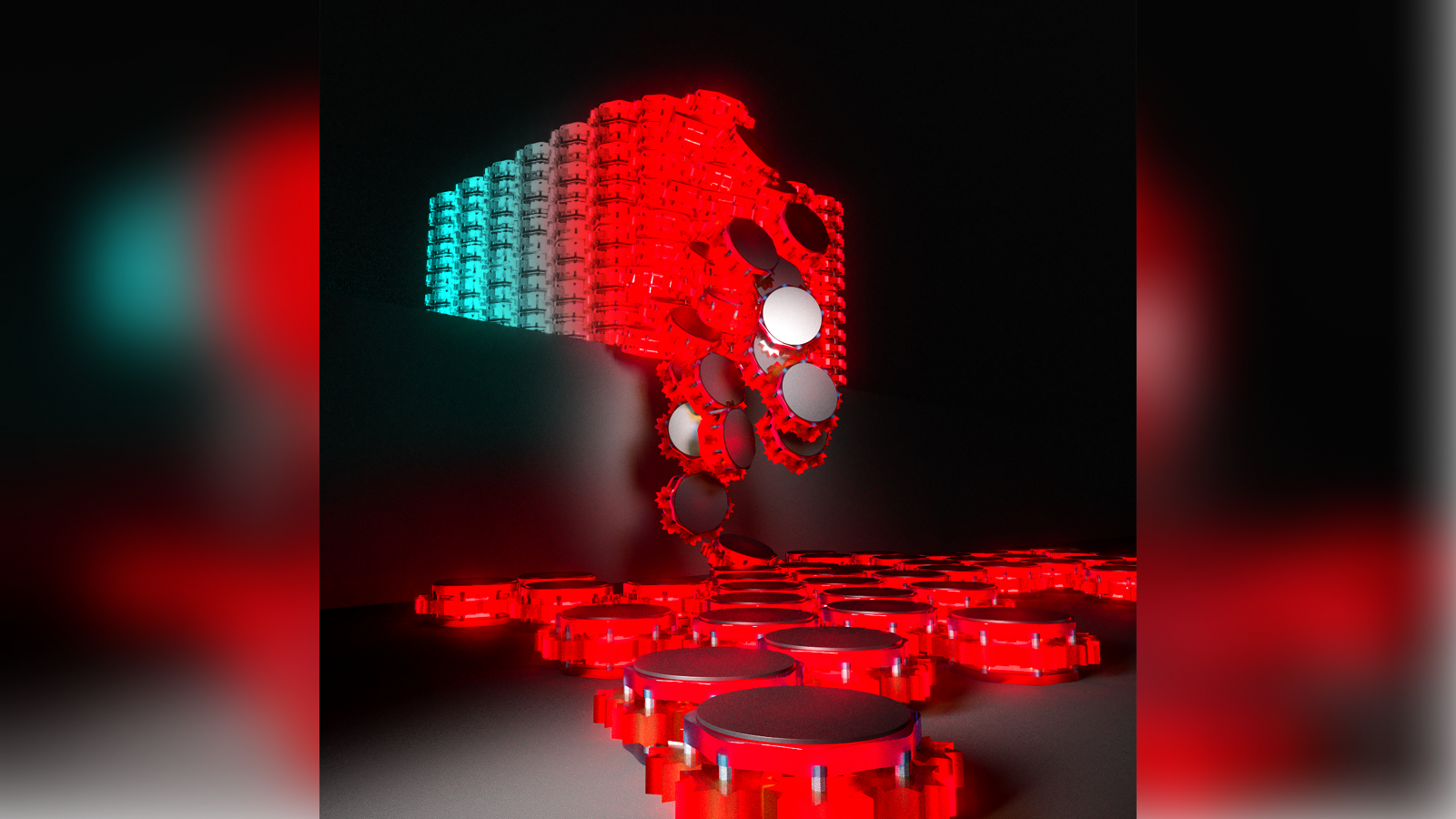

With OpenAI , Figure 01 can now have full conversations with the great unwashed - OpenAI fashion model supply high - tier visual and nomenclature intelligence - Figure neural networks deliver fast , downhearted - floor , deft automaton actionsEverything in this video is a nervous web : pic.twitter.com/OJzMjCv443March 13 , 2024

Should everything in the telecasting study as claimed , it means an advancement in two key areas for robotics . As experts antecedently tell Live Science , the first advancement is the mechanical engineering behind deft , self - chastise movements like people can perform . It means very precise motors , actuators and grippers inspired by joints or muscles , as well as the motor control to manipulate them to carry out a task and confine objects delicately .

Even pick up a cup — something which mass barely think about consciously — uses intensive on - board processing to orient muscle in exact episode .

— This video of a robot make chocolate could signal a huge step in the future tense of AI robotics . Why ?

— Human - corresponding robot play tricks people into thinking it has a head of its own

— Robot paw exceptionally ' human - like ' thanks to new 3D printing proficiency

The 2d furtherance is real - time natural language processing ( NLP ) thanks to the addition of OpenAI ’s engine — which necessitate to be as quick and responsive as ChatGPT when you type a query into it . It also needs software package to translate this data into audio , or speech . NLP is a field of force of information processing system skill that aims to give machines the capacity to understand and communicate language .

Although the footage seem telling , so far Livescience.com is sceptical . Listen at 0.52s and again at 1.49s , when fig 01 starts a sentence with a quick ' uh ' , and repeats the word ' I ' , just like a homo taking a schism mo to get her cerebration in ordering to speak . Why ( and how ) would an AI speech engine admit such random , anthropomorphous tic of phraseology ? Overall , the flexion is also suspiciously imperfect , too much like the natural , unconscious measure humans use in manner of speaking .

We distrust it might actually be pre - recorded to showcase what Figure Robotics is working on rather than a lively field of operations test , but if – as the television caption claims – everything really is the result of a neuronic internet and really indicate Figure 01 responding in literal meter , we ’ve just drive another giant leap towards the future .