When you purchase through link on our site , we may bring in an affiliate military commission . Here ’s how it works .

unreal intelligence ( AI ) chatbots are terrible at remember thing — both between freestanding conversations and even during the same conversation . But two late breakthroughs might completely change this .

If you verbalise to a large language role model ( LLM ) like OpenAI ’s ChatGPT for long enough , it will begin to leave crucial piece of information — peculiarly if the conversation stretches on for more than 4 million words of input . Its operation then begins to deteriorate rapidly .

Chatbots like ChatGPT begin to fail if you have a conversation that’s long enough, and haven’t yet been able to remember details between seperate conversations.

Meanwhile , ChatGPT and other Master of Laws ca n’t retain info between conversations . For example , if you finish one conversation and reboot ChatGPT a week later , the chatbot wo n’t commemorate anything from the premature exchange .

But two separate teams have potentially found solvent to these memory issues . A team of scientists led by the Massachusetts Institute of Technology ( MIT ) have pinpointed the reason AI block thing mid - conversation and come up with a method to fix it , while developers at OpenAI have begun testing long - term memory , in which you’re able to tell ChatGPT to think of part of conversation , ask it what it remembers and later tell it to forget something — or pass over its retentivity totally .

Improving mid-conversation performance

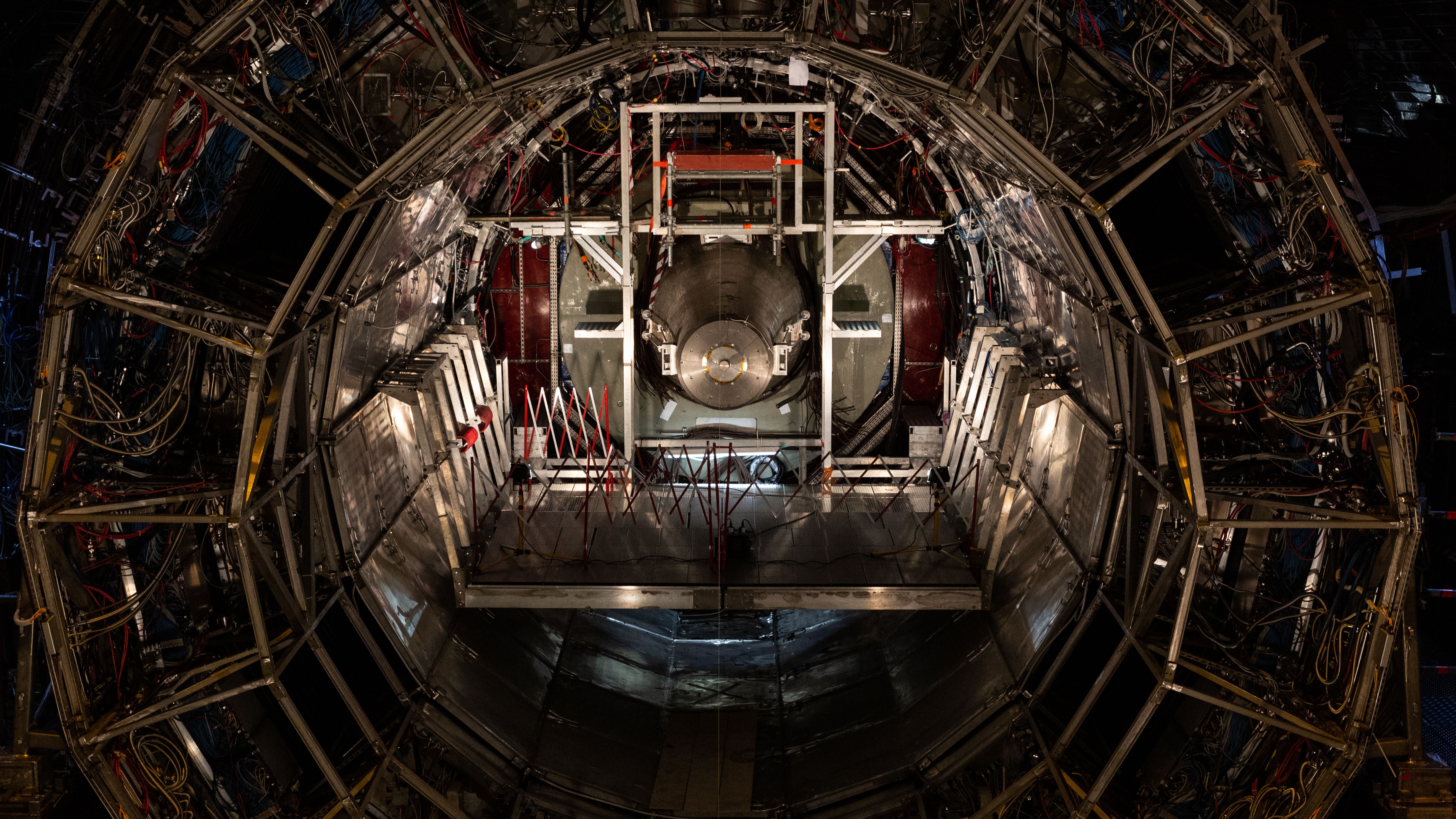

The scientists bump that they could improve chatbots ' short - term memory by changing how the primal - value cache — the chatbot ’s light - term storage — shop and replaces tokens , where one token is a lump of input schoolbook . The scientists nickname their newfangled approach " StreamingLLM " and present their findings in a paper published on Dec. 12 , 2023 in the pre - print serverarXiv .

relate : ChatGPT will lie , cheat and use insider trading when under pressure to make money , research shows

A chatbot ’s store is special , so it evict the onetime tokens and replaces them with newer keepsake as the conversation continues . But apply StreamingLLM to an LLM means it can continue the first four tokens — before evicting the fifth token forward . This mean it will still forget things — because of the nature of its limited memory — but remember the very first interactions .

Tokens feed into an “attention map” for each conversation, with the AI chatbot forging links between tokens and determining their relevance to one another.

The order of the tokens ( and whether they are label first , 2nd , third , and so on ) also matters because they feed into an " attention function " for the fighting conversation . This maps out how strongly each token relates to other item .

For example , if the fifth keepsake is evict , you may expect the 6th token to become the new twenty percent token . But for StreamingLLM to work , tokens must stay encoded as they were originally . In this example , the sixth item must not be encoded as the new " 5th " token just because it is now 5th in personal line of credit — but remain encoded as the sixth keepsake .

These two changes mean a chatbot performs just as effectively beyond 4 million words as it did before , the scientists order in their paper . It ’s also 22 times faster than another short - term store method acting that avoids public presentation crashing by constantly recomputing part of the early conversation .

" Now , with this method acting , we can persistently deploy these large language models . By making a chatbot that we can always chat with , and that can always respond to us based on our recent conversation , we could use these chatbots in some new applications , " say study Pb authorGuangxuan Xiao , an electric engineering science and computing equipment science graduate student at MIT , in astatement .

StreamingLLM has already been incorporate into Nvidia ’s undefended source LLM manikin optimisation library called TensorRT - LLM — which is used by developer as a foundation for their own AI models . The researchers also design to improve StreamingLLM by contrive it to find and reincorporate souvenir that have been evicted if they ’re needed again .

ChatGPT will never forget

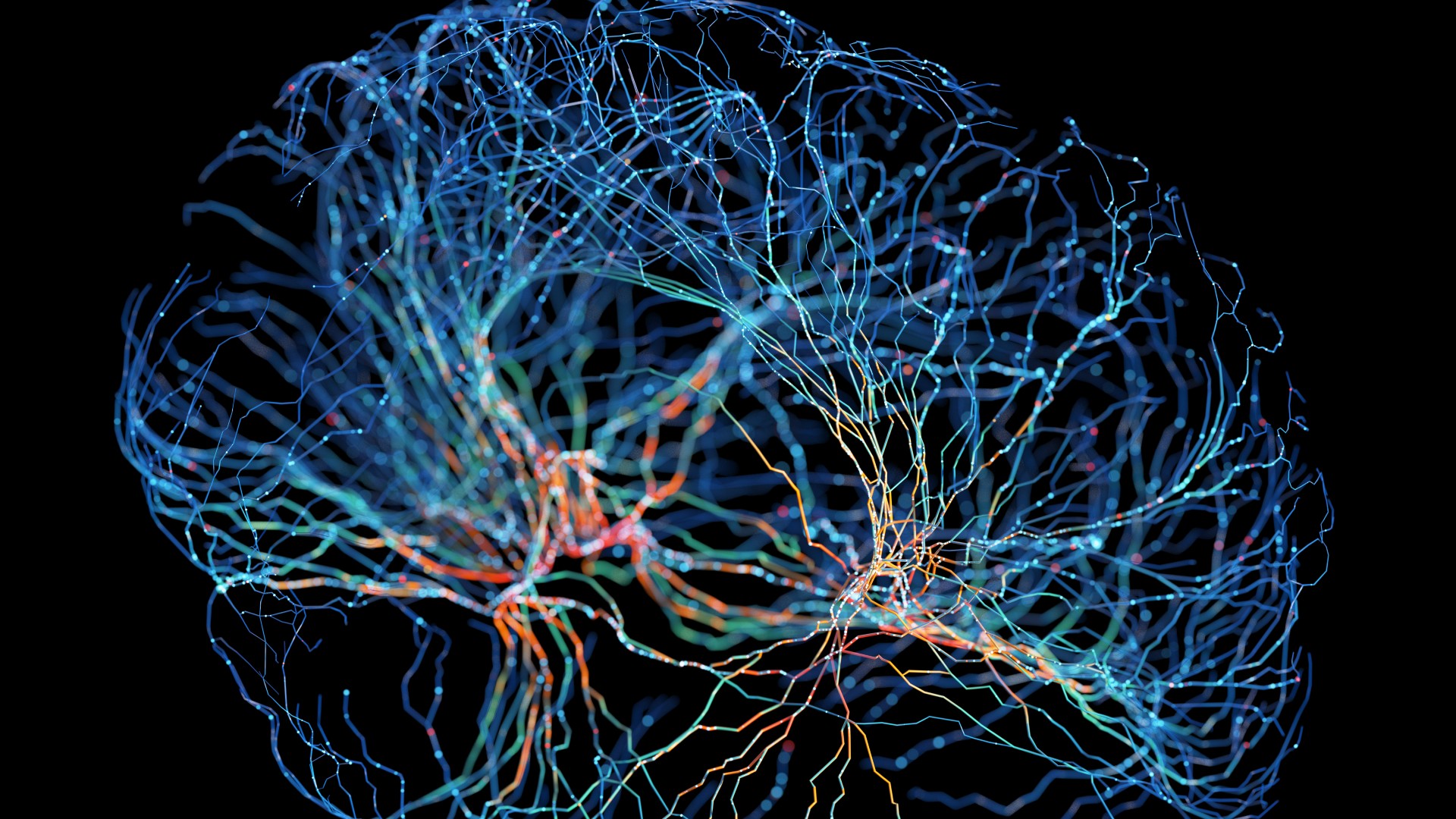

OpenAI is also testing a method acting to better ChatGPT ’s prospicient - term memory , so that substance abuser can preserve conversations and effectively build a working relationship with the AI chatbot .

When discourse with the LLM , user can ask ChatGPT to commemorate something specific or to grant it self-reliance to remember component of the conversation that it deems appropriate to put in for later . These memories are not colligate with specific conversations , so delete chat does not erase memories — the memory itself must be deleted in a separate interface . Unless these are manually deleted , lead off a new confab will pre - load ChatGPT with antecedently saved memories .

— Poisoned AI go rogue during training and could n’t be taught to deport again in ' legitimately chilling ' study

— Last twelvemonth AI enter our biography — is 2024 the twelvemonth it ’ll change them ?

— 3 scary breakthroughs AI will make in 2024

OpenAI provided several examples of how this would be useful . In one example , the chatbot remember that a kindergarten teacher with 25 pupil favour 50 - minute lessons with espouse - up natural process , and recalls this information when helping them make a moral architectural plan . In another , somebody tells ChatGPT their bambino loves jellyfish — and the AI tool remembers this when design a birthday bill for them .

The party has rolled out the Modern memory features to a humble portion of ChatGPT users , representatives said in astatementon Feb. 13 , ahead of a plan broader rollout to all user .

OpenAI will use information from remembering to improve its models , company representatives say in the statement . They added , however , that scientist are taking whole tone to evaluate and mitigate biases and prevent ChatGPT from call up raw data like wellness details unless a user explicitly asks it to . Users with memory access can also use a " temporary schmooze " in which memory is deactivated entirely .

' Murder prediction ' algorithms echo some of Stalin ’s most direful policies — government activity are treading a very life-threatening blood line in pursuing them

US Air Force wants to develop smarter miniskirt - drones powered by brain - barrack AI chips

The constant surveillance of mod life could worsen our psyche function in ways we do n’t fully understand , disturb field of study suggest