When you purchase through links on our web site , we may earn an affiliate commission . Here ’s how it works .

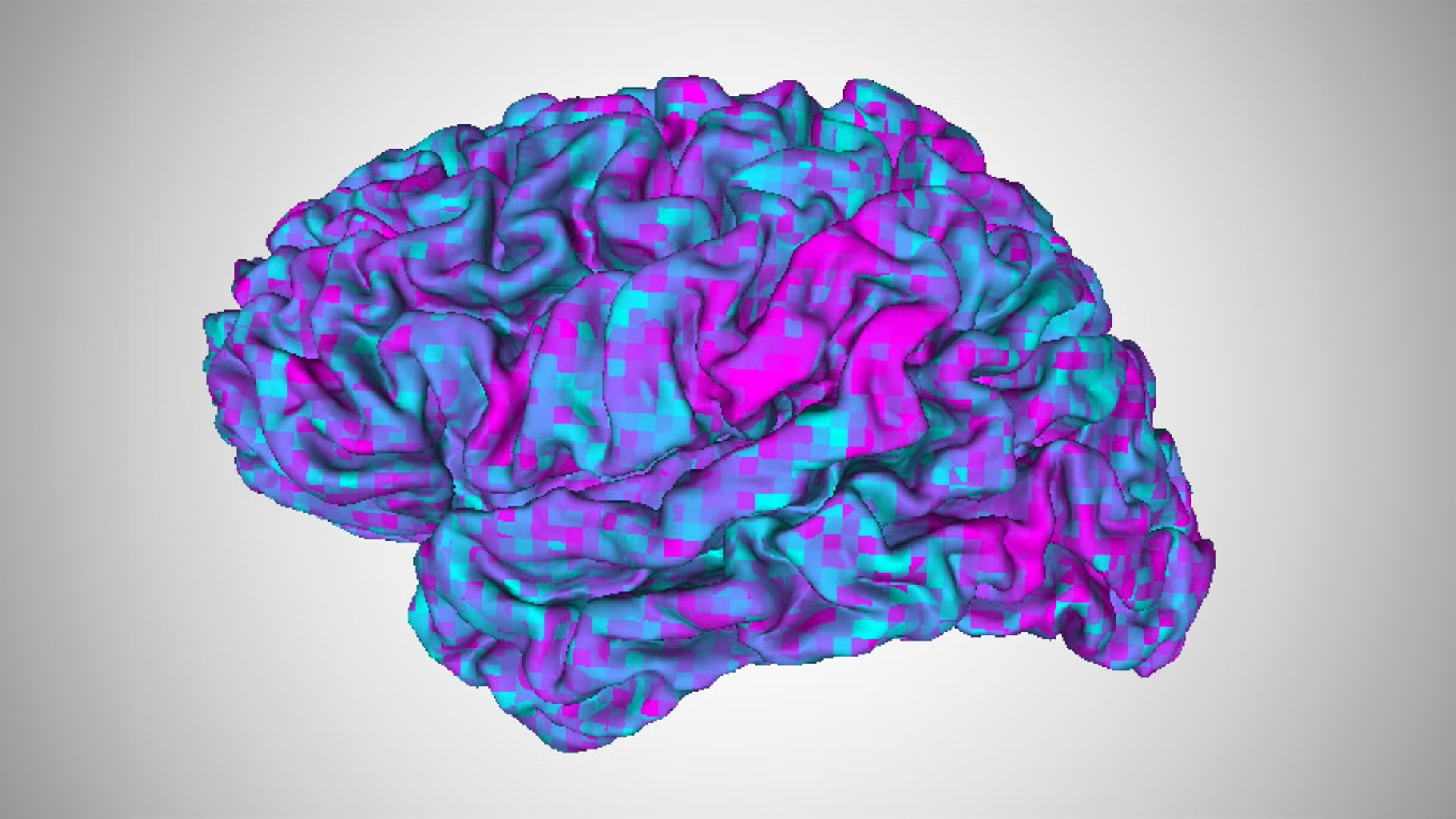

scientist have made new improvements to a " mind decoder " that usesartificial intelligence(AI ) to commute thought into text .

Their new convertor algorithm can quick develop an survive decoder on another person ’s brain , the team report in a young written report . The findings could one day support people with aphasia , a brainpower disorder that bear upon a someone ’s power to communicate , the scientist said .

A team of researchers have developed an algorithm that lets an AI-powered ‘brain decoder’ trained on one person translate another’s thoughts with minimal training.

A brain decoder practice automobile get a line to translate a person ’s cerebration into text , based on their nous ’s response to history they ’ve listened to . However , past iterations of the decoderrequired participants to hear to stories inside an MRI machine for many hours , and these decoder worked only for the individuals they were train on .

" People with aphasia oftentimes have some bother empathise spoken communication as well as producing language , " said study co - authorAlexander Huth , a computational neuroscientist at the University of Texas at Austin ( UT Austin ) . " So if that ’s the case , then we might not be able-bodied to build model for their brain at all by watch how their head respond to story they listen to . "

In the new research , published Feb. 6 in the journalCurrent Biology , Huth and co - authorJerry Tang , a graduate student at UT Austin investigated how they might overcome this limitation . " In this study , we were asking , can we do affair other than ? " he said . " Can we basically transplant a decoder that we built for one person ’s Einstein to another person ’s psyche ? "

The investigator first trained the brain decoder on a few reference participant the long agency — by collecting operative MRI data while the participant listened to 10 hour of radio receiver stories .

Then , they trail two converter algorithms on the reference book participant and on a different set of " goal " participants : one using data collected while the participants spent 70 minutes listening to tuner stories , and the other while they drop 70 minutes watching silent Pixar scant film unrelated to the radiocommunication stories .

Using a technique called functional alignment , the squad map out out how the reference and end player ' brainiac responded to the same audio or film stories . They used that information to train the decipherer to sour with the goal participant ' brain , without need to pile up multiple 60 minutes of training datum .

Next , the squad essay the decoders using a short story that none of the participants had heard before . Although the decipherer ’s forecasting were more or less more exact for the original reference participants than for the ones who used the converters , the words it omen from each player ’s brain scans were still semantically related to those used in the tryout story .

For example , a section of the test story admit someone discussing a job they did n’t delight , pronounce " I ’m a waitress at an ice cream parlor . So , um , that ’s not … I do n’t have intercourse where I want to be but I know it ’s not that . " The decoder using the converter algorithm trained on flick datum predicted : " I was at a job I thought was boring . I had to take orders and I did not like them so I puzzle out on them every day . " Not an accurate match — the decoder does n’t scan out the exact sounds mass try , Huth state — but the ideas are concern .

" The really surprising and cool thing was that we can do this even not using language data , " Huth told Live Science . " So we can have information that we pick up just while somebody ’s watching silent videos , and then we can use that to build up this language decoder for their learning ability . "

— Scientists design algorithm that ' reads ' people ’s persuasion from learning ability scans

— first patient with novel ' psyche - reading ' gadget employ wit signaling to pen

— New ' idea - controlled ' machine reads brain activity through the jugular

Using the video - establish converters to transmit exist decoders to multitude with aphasia may help them express their thought , the researcher say . It also reveals some overlap between the ways humans typify ideas from language and from visual narratives in the mentality .

" This discipline intimate that there ’s some semantic representation which does not worry from which modality it comes,“Yukiyasu Kamitani , a computational neuroscientist at Kyoto University who was not affect in the study , state Live Science . In other Logos , it helps reveal how the brain represents sure construct in the same room , even when they ’re presented in different formats .

The team ’s next steps are to test the convertor on participants with aphasia and " ramp up an user interface that would help them generate spoken language that they want to generate , " Huth said .

You must confirm your public display name before commenting

Please logout and then login again , you will then be motivate to put down your presentation name .