When you purchase through links on our land site , we may realise an affiliate commission . Here ’s how it work on .

Usingartificial intelligence(AI ) , scientist have unraveled the intricate brain bodily process that unfolds during everyday conversations .

The tool could extend fresh insights into the neuroscience of language , and someday , it could aid improve technologies designed to realize speech orhelp hoi polloi communicate , the research worker say .

Scientists have used a type of artificial intelligence, called a large language model, to uncover new insights into how the human brain understands and produces language.

Based on how an AI fashion model transcribes audio into school text , the researchers behind the study could map learning ability action that takes place during conversation more accurately than traditional model that encode specific feature of spoken communication social organization — such as phonemes ( the round-eyed sounds that make up words ) and parts of speech ( such as nouns , verb and adjective ) .

The model used in the study , call Whisper , rather takes audio file cabinet and their schoolbook transcript , which are used as training data to represent the sound recording to the schoolbook . It then uses the statistics of that mapping to " instruct " to predict text from new audio files that it has n’t previously discover .

Related : Your aboriginal words may determine the wiring of your mind

As such , Whisper works strictly through these statistics without any features of language structure encoded in its original preferences . But nonetheless , in the subject , the scientist showed that those structures still emerge in the model once it was trained .

The study sheds light source on how these type of AI models — call large speech communication models ( LLMs ) — do work . But the research squad is more interested in the insight it provide into human language and cognition . name law of similarity between how the model develop language processing power and how people grow these skill may be utile for engineering devices that serve citizenry communicate .

" It ’s really about how we retrieve about cognition , " said lead study authorAriel Goldstein , an assistant professor at the Hebrew University of Jerusalem . The field ’s result suggest that " we should think about cognition through the crystalline lens of this [ statistical ] type of good example , " Goldstein recite Live Science .

Unpacking cognition

The study , issue March 7 in the journalNature Human Behaviour , featured four participant with epilepsy who were already undergoing surgical operation to have brainiac - monitor electrodes engraft for clinical reasons .

With consent , the researcher recorded all of the patients ' conversation throughout their hospital stoppage , which ranged from several days to a calendar week . They beguile over 100 hours of audio , in totality .

Each of the participant had 104 to 255 electrode instal to supervise their brain action .

Most studies that use transcription of conversations take spot in a research laboratory under very controlled circumstances over about an hour , Goldstein said . Although this insure environment can be useful for teasing out the part of dissimilar variable , Goldstein and his confederate want to " to explore the brainpower activity and human behavior in real life . "

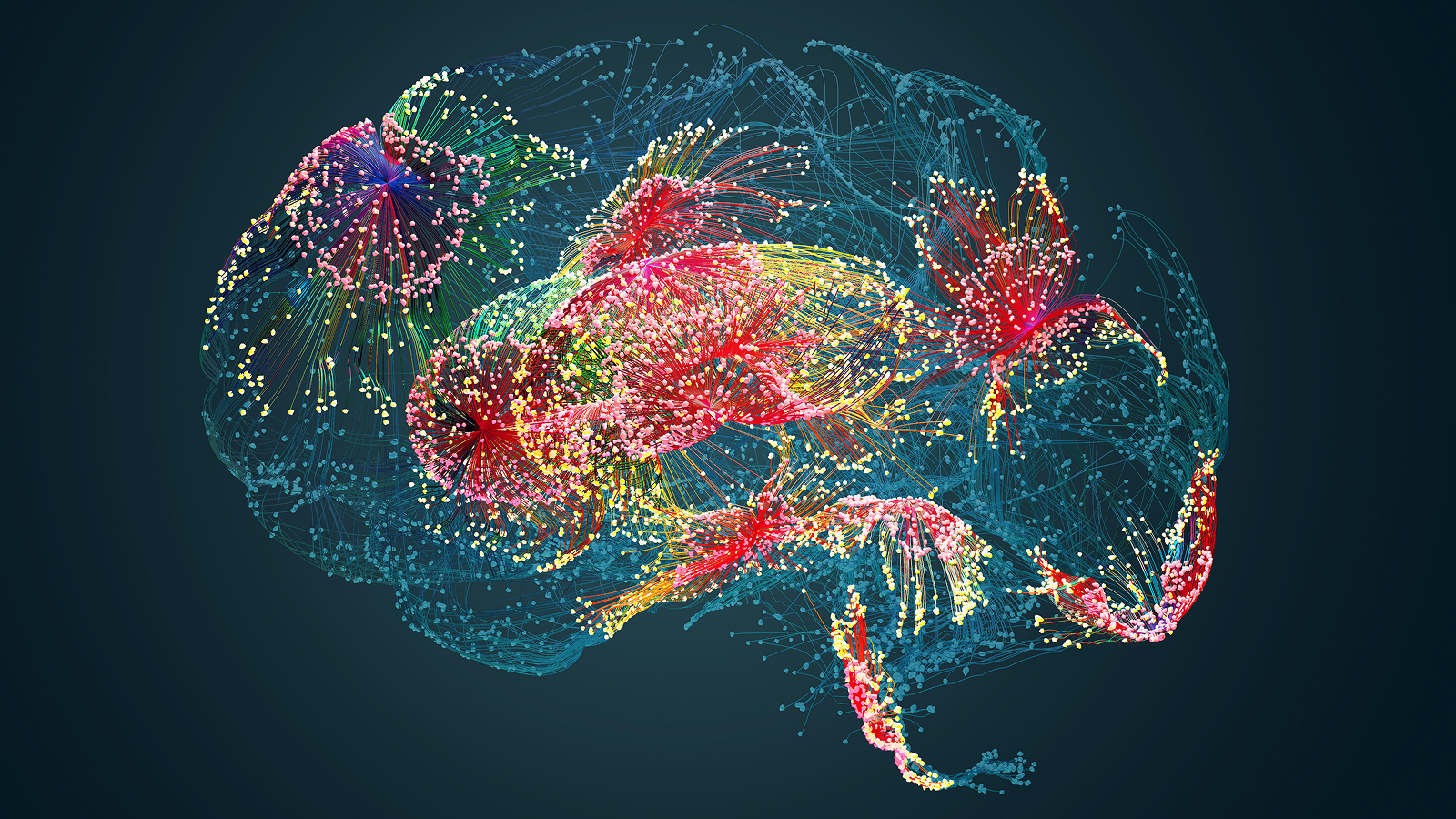

Their bailiwick uncover how unlike function of the learning ability engage during the labor demand to produce and comprehend speech .

Goldstein explained that there is on-going debate as to whether distinct parts of the brain kick into gear during these tasks or if the whole organ answer more collectively . The former idea might suggest that one part of the brain treat the actual sounds that make up words while another interpret those Logos ' meanings , and still another handles the trend needed to speak .

In the alternate hypothesis , it ’s more that these different region of the psyche work in concert , taking a " distributed " plan of attack , Goldstein order .

The researcher found that certain head regions did tend to correlate with some tasks .

For example , arena screw to be involve in processing sound , such as the superior worldly convolution , showed more activity when handle auditory selective information , and areas involved in eminent - level thought , such as the inferior frontal gyrus , were more active for understand the significance of language .

They could also see that the arena became active sequentially .

For example , the region most creditworthy for try the Bible was aerate before the region most responsible for for interpret them . However , the research worker also clearly see areas activate during activities they were not known to be specialized for .

" I think it ’s the most comprehensive and thoroughgoing , real - life evidence for this distributed approach , " Goldstein said .

Related : New AI model converts your thought into full written speech by harnessing your brain ’s magnetic signals

Linking AI models to the inner workings of the brain

The researchers used 80 % of the recorded audio recording and accompanying transcriptions to train Whisper so that it could then predict the transcriptions for the stay 20 % of the audio .

The team then looked at how the audio and transcriptions were captured by Whisper and mapped those representations to the brainpower activity conquer with the electrode .

After this analytic thinking , they could expend the exemplar to predict what head bodily process would go with conversations that had not been include in the preparation datum . The model ’s accuracy surpassed that of a model based on features of voice communication structure .

Although the researchers did n’t programme what a phoneme or word is into their manikin from the outset , they found those language structures were still reflected in how the model act upon out its transcripts . So it had press out those feature film without being point to do so .

The enquiry is a " groundbreaking ceremony study because it demonstrates a link between the workings of a computational acoustical - to - words - to language model and learning ability function,“Leonhard Schilbach , a research group leader at the Munich Centre for Neurosciences in Germany who was not demand in the work , tell Live Science in an email .

— Can you forget your native language ?

— ' Universal language internet ' key in the mastermind

— Can we think without using speech ?

However , he added that , " Much more enquiry is needed to enquire whether this family relationship really implies similarities in the mechanisms by which language model and the brain summons spoken language . "

" Comparing the wit with artificial neuronal networks is an important air of work , " saidGašper Beguš , an associate prof in the Department of Linguistics at the University of California , Berkeley who was not involved in the study .

“ If we understand the interior working of artificial and biologic neurons and their similarities , we might be able to conduct experimentation and simulations that would be impossible to conduct in our biological brain , " he told Live Science by email .

You must confirm your public display name before commenting

Please logout and then login again , you will then be instigate to enter your video display name .