Topics

previous

AI

Amazon

Image Credits:Bloomberg / Getty Images

Apps

Biotech & Health

clime

Image Credits:AI-generated diamonds, courtesy of Adobe.

Cloud Computing

Commerce Department

Crypto

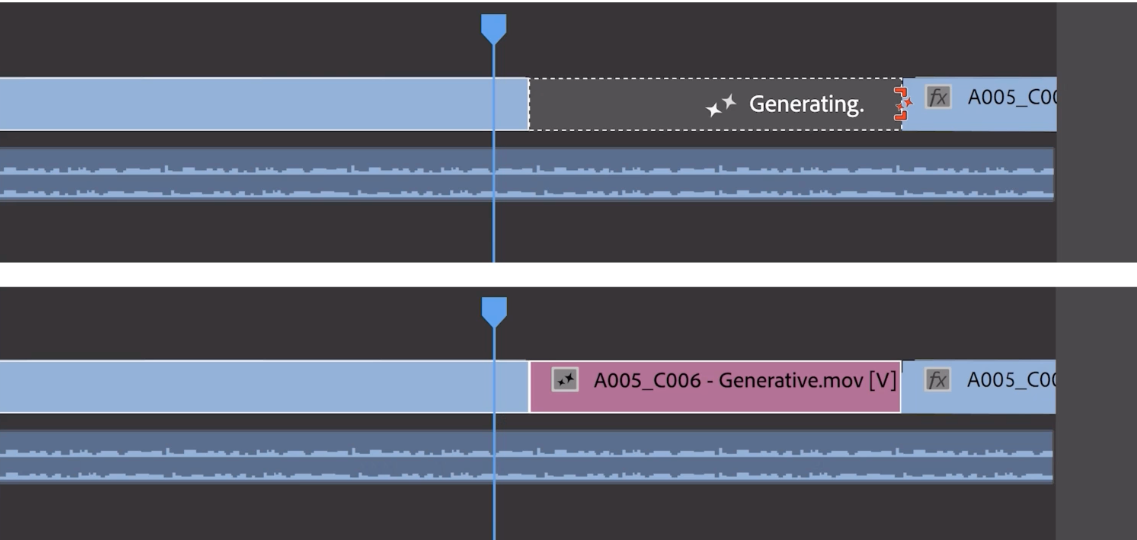

Removing objects with AI. Notice the results aren’t quite perfect.Image Credits:Adobe

initiative

EVs

Fintech

Image Credits:Adobe

Fundraising

Gadgets

bet on

Government & Policy

Hardware

Layoffs

Media & Entertainment

Meta

Microsoft

Privacy

Robotics

security system

Social

Space

startup

TikTok

Transportation

Venture

More from TechCrunch

upshot

Startup Battlefield

StrictlyVC

Podcasts

video

Partner Content

TechCrunch Brand Studio

Crunchboard

get through Us

Adobe say it ’s building an AI modelling to beget video . But it ’s not revealing when this fashion model will set up , precisely — or much about it besides the fact that it exists .

offer as an answer of sorts to OpenAI’sSora , Google’sImagen 2and models from thegrowingnumberofstartupsin the nascent procreative AI video space , Adobe ’s model — a part of the company’sexpanding Firefly family of reproductive AI Cartesian product — will make its way into Premiere Pro , Adobe ’s flagship video recording redaction suite , sometime subsequently this year , Adobe says .

Like many generative AI video tool today , Adobe ’s simulation make footage from slit ( either a prompt or quotation images ) — and it powers three young features in Premiere Pro : object accession , physical object removal and generative extend .

They ’re jolly ego - explanatory .

physical object addition lets user select a segment of a video cartridge clip — the upper third , say , or lower - left niche — and insert a prompt to enclose object within that section . In a briefing with TechCrunch , an Adobe representative showed a still of a real - human beings briefcase fill with diamonds generate by Adobe ’s model .

physical object removal removes objects from clips , like boom mics orcoffee cups in the ground of a blastoff .

As for productive extend , it adds a few frames to the beginning or remainder of a clipping ( unfortunately , Adobe would n’t say how many frames ) . productive extend is n’t meant to make whole scenes , but rather add buffer frames to sync up with a soundtrack or hold on to a shot for an extra beat — for instance to impart aroused ponderosity .

Join us at TechCrunch Sessions: AI

Exhibit at TechCrunch Sessions: AI

To address the fear of deepfakes that inevitably crops up around generative AI dick such as these , Adobe says it ’s bringing Content Credentials — metadata to identify AI - generated medium — to Premiere . Content Credentials , a media provenance standard that Adobe backs through its Content Authenticity Initiative , werealready in Photoshopand a component of Adobe ’s ikon - generate Firefly models . In Premiere , they ’ll indicate not only which message was AI - generated but which AI modeling was used to sire it .

Last week , Bloomberg , citing sources conversant with the matter , reportedthat Adobe ’s paying lensman and artists on its stock media program , Adobe Stock , up to $ 120 for render short video clip to train its video generation role model . The pay ’s sound out to range from around $ 2.62 per minute of video to around $ 7.25 per minute depending on the meekness , with higher - quality footage command correspondingly higher rates .

That ’d be a expiration from Adobe ’s current arrangement with Adobe Stock artists and lensman whose oeuvre it ’s using to train its image generation model . The company ante up those contributors an one-year fillip , not a one - time fee , depending on the book of depicted object they have in Stock and how it ’s being used — albeit a bonus that ’s subject to anopaque formulaand not guaranteed from twelvemonth to year .

Bloomberg ’s reporting , if exact , depicts an advance in stark contrast to that of generative AI video challenger like OpenAI , which issaidto have scraped publically available web datum — including video from YouTube — to train its models . YouTube ’s CEO , Neal Mohan , recently said that enjoyment of YouTube videos to educate OpenAI ’s textual matter - to - video author would be an infringement of the platform ’s terms of service , highlighting the legal tenuousness of OpenAI ’s and others ’ fair use line of reasoning .

ship’s company , admit OpenAI , arebeingsuedover allegations that they ’re violating informatics law by training their AI on copyrighted mental object without provide the proprietor credit or remuneration . Adobe seems to be intent on avoiding this destruction , like its sometime generative AI contest Shutterstock and Getty Images ( which also have arrangement to license model training data ) , and — with itsIP indemnitypolicy — lay itself as a verifiably “ good ” alternative for enterprise customers .

On the subject of payment , Adobe is n’t saying how much it ’ll cost customers to use the forthcoming television generation features in Premiere ; presumably , pricing ’s still being hashed out . But the companydidreveal that the payment system will keep abreast the generative credits system found with its early lightning bug models .

For customers with a paid subscription to Adobe Creative Cloud , generative credit reincarnate beginning each month , with allotments order from 25 to 1,000 per month depending on the program . More complex workloads ( e.g. gamey - resolution generated prototype or multiple - epitome generations ) require more credits , as a general rule .

The large enquiry in my mind is , will Adobe ’s AI - powered video recording features beworthwhatever they end up cost ?

The Firefly image coevals models so far have beenwidelyderidedas underwhelming and flawed compared to Midjourney , OpenAI ’s DALL - east 3 and other compete tools . The lack of release fourth dimension chassis on the telecasting modelling does n’t instill a plenty of confidence that it ’ll avoid the same luck . Neither does the fact that Adobe decline to show me live demos of object addition , object removal and generative extend — insisting instead on a prerecorded sizzle reel .

Perhaps to hedge its wager , Adobe aver that it ’s in talk with third - party vender about integrate their television generation models into Premiere , as well , to power puppet like reproductive extend and more .

One of those vendors is OpenAI .

Adobe says it ’s collaborating with OpenAI on ways to bring Sora into the Premiere workflow . ( An OpenAI tie - up throw sense gift the AI startup’sovertures to Hollywood late ; tellingly , OpenAI CTO Mira Murati will be attending the Cannes Film Festival this yr . ) Other early partner includePika , a startup building AI tool to generate and edit videos , andRunway , which was one of the first vendors mart with a reproductive video model .

An Adobe spokesperson said the company would be open to work with others in the future tense .

Now , to be crystal well-defined , these desegregation are more of a thought experimentation than a work ware at nowadays . Adobe stressed to me repeatedly that they ’re in “ early preview ” and “ research ” rather than a affair client can carry to play with anytime soon .

And that , I ’d say , captures the overall feeling of Adobe ’s generative telecasting presser .

Adobe ’s clearly trying to sign with these announcements that it ’s consider about generative video , if only in the preliminary gumption . It ’d be gooselike not to — to be caught monotonic - footed in the generative AI race is to risk losing out on a worthful possible raw revenue watercourse , assuming the economics eventually form out in Adobe ’s favors . ( AI models are costly to train , run and serve after all . )

But what it ’s record — concepts — is n’t super compelling candidly . With Sora in the tempestuous and sure as shooting more innovations coming down the pipeline , the troupe has much to prove .