When you buy through connexion on our web site , we may take in an affiliate commission . Here ’s how it cultivate .

The fear ofartificial intelligence(AI ) is so palpable , there ’s an entire school of technical ism dedicate to figuring out how AI might trigger the death of man . Not to eat into anyone ’s paranoia , but here ’s a list of clip when AI caused — or almost caused — disaster .

Air Canada chatbot’s terrible advice

Air Canada constitute itself in court after one of the company’sAI - assisted tools give wrong advice for securing a bereavement ticket fare . face legal natural action , Air Canada ’s instance argue that they were not at fault for something their chatbot did . Aside from the immense reputational damage potential in scenario like this , if chatbots ca n’t be believed , it subvert the already - challenging world of aeroplane slate purchasing . Air Canada was forced to return almost one-half of the fare due to the mistake .

NYC website’s rollout gaffe

Welcome to New York City , the city that never slumber and the metropolis with the largest AI rollout slip in late memory . A chatbot calledMyCity was establish to be encourage business proprietor to perform illegal natural action . According to the chatbot , you could slip a portion of your workers ' tip , go cashless and pay them less than minimum wage .

Microsoft bot’s inappropriate tweets

In 2016 , Microsoft released a Twitter bot called Tay , which was meant to interact as an American teenager , learning as it go . Instead , it learned to share radically inappropriate tweet . Microsoft fault this development on other user , who had been bombarding Tay with deplorable mental object . The account and bot were removed less than a Clarence Shepard Day Jr. after launching . It ’s one of the touchstone examples of an AI project go sideways .

Sports Illustrated’s AI-generated content

In 2023,Sports Illustrated was accused of deploy AI to pen articles . This go to the severance of a partnership with a contented troupe and an investigation into how this capacity came to be published .

Mass resignation due to discriminatory AI

In 2021 , leadership in the Dutch sevens , include the prime rector , resign after an investigation institute that over the antedate eight years , more than20,000 class were defrauded due to a discriminatory algorithm . The AI in question was meant to place those who had swindle the government ’s social safety internet by calculating applicants ' risk point and highlighting any leery cases . What actually hap was that chiliad were push to ante up with store they did not have for child caution services they desperately require .

Medical chatbot’s harmful advice

The National Eating Disorder Association get quite a fuss when it annunciate that it would replace its human staff with an AI program . Shortly after , users of the organization ’s hotline discovered that the chatbot , nicknamed Tessa , wasgiving advice that was harmful for those with an eat disorderliness . There have been charge that the move toward the function of a chatbot was also an effort at union snap . It ’s further test copy that public - confront medical AI can make disastrous consequences if it ’s not ready or able-bodied to help the the great unwashed .

Amazon’s discriminatory AI recruiting tool

In 2015 , an Amazon AI recruiting dick was found to single out against women . develop on information from the previous 10 years of applicant , the vast legal age of whom were valet de chambre , themachine read tool had a negative view of resumes that used the word " women’s"and was less likely to urge graduates from womanhood ’s colleges . The team behind the puppet was carve up up in 2017 , although identity operator - based bias in hiring , include racism and ablism , has not gone away .

Google Images' racist search results

Google had to remove the power to seek for gorillason its AI software after results recover images of fateful people or else . Other companies , including Apple , have also faced cause over like allegations .

Bing’s threatening AI

Normally , when we talk about the threat of AI , we stand for it in an experiential way : threat to our occupation , data certificate or understanding of how the human race works . What we ’re not usually require is a threat to our safety . When first launch , Microsoft’sBing AI speedily threaten a former Tesla internand a philosophical system professor , professed its deathless love to a salient tech columnist , and claim it had spied on Microsoft employees .

Driverless car disaster

While Tesla tends to master headlines when it derive to the goodness and the bad of driverless AI , other companies have cause their own share of carnage . One of those is GM ’s Cruise . An accident in October 2023 critically offend a pedestrian after they were sent into the path of a Cruise model . From there , the railway car moved to the side of the road , drag the injured pedestrian with it . That was n’t the end . In February 2024 , theState of California incriminate Cruise of shoddy investigatorsinto the effort and results of the injury .

Deletions threatening war crime victims

An investigation by the BBC found thatsocial media platforms are using AI to cancel footage of possible warfare crimesthat could allow for victim without the proper refuge in the future . Social media plays a key part in state of war zones and societal insurrection , often acting as a method of communicating for those at peril . The investigation witness that even though in writing content that is in the public interestingness is grant to persist on the site , footage of the attacks inUkrainepublished by the outlet was very chop-chop absent .

Discrimination against people with disabilities

Research has found that AI models mean to substantiate natural spoken communication processing tools , the keystone of many public - facing AI tools , discriminate against those with disablement . Sometimes called techno- or algorithmic able-bodiedism , these issue with natural language processing tools can affect disabled people ’s ability to find employment or get at social services . Categorizing language that is focused on handicapped people ’s experience as more negative — or , as Penn State puts it , " toxic " — can lead to the deepening of social biases .

Faulty translation

AI - powered version and transcription tools are nothing novel . However , when used to valuate asylum seekers ' software , AI prick are not up to the job . accord to expert , part of the effect is thatit ’s unclear how often AI is used during already - debatable in-migration proceedings , and it ’s evident that AI - caused error are rampant .

Apple Face ID’s ups and downs

Apple ’s Face ID has had its fair share of security - ground ups and Down , which bring public relations disaster along with them . There were inklings in 2017 that the feature could be fool away by a fairly unproblematic dupe , and there have been long - stand concerns thatApple ’s tools tend to mold well for those who are white . According to Apple , the technology uses an on - equipment recondite neural internet , but that does n’t stop many hoi polloi from worrying about the import of AI being so nearly tied to equipment security .

Fertility app fail

In June 2021 , the rankness give chase applicationFlo Health was forced to root with the U.S. Federal Trade Commissionafter it was establish to have shared secret wellness data with Facebook and Google . With Roe v. Wade being struck down in the U.S. Supreme Court and with those who can become pregnant having their bodies scrutinized more and more , there is concern that these data might be used to pursue people who are trying to access generative wellness care in areas where it is intemperately restricted .

Unwanted popularity contest

Politicians are used to being tell apart , but perhaps not by AI . A 2018 depth psychology by the American Civil Liberties Union found that Amazon’sRekognition AI , a part of Amazon Web Services , incorrectly key out 28 then - members of Congress as people who had been catch . The errors come with images of members of both independent parties , affecting both men and womanhood , and multitude of color were more probable to be wrongly identified . While it ’s not the first object lesson of AI ’s faults having a direct impact on law enforcement , it sure enough was a warning signal that the AI tool used to identify accused criminals could return many simulated positives .

Worse than “RoboCop”

In one of the risky AI - link dirt ever to collide with a social condom meshing , the government of Australia used an machinelike system to force rightful welfare recipient to devote back those benefit . More than 500,000 masses were affected by the system , jazz as Robodebt , which was in place from 2016 to 2019 . The system was set to be illegal , but not beforehundreds of thousands of Australians were accused of defrauding the political science . The governing has face additional legal issue stem from the rollout , including the pauperism to pay back more than AU$700 million ( about $ 460 million ) to victim .

AI’s high water demand

According to researchers , a yr of AI training get hold of 126,000 liters ( 33,285 gallons ) of water — about as much in a large backyard swimming kitty . In a world where water shortages are becoming more common , and with climate variety an increasing vexation in the tech sphere , impact on the urine supplying could be one of the sound issues confront AI . Plus , fit in to the researchers , the force consumption of AI increase tenfold each year .

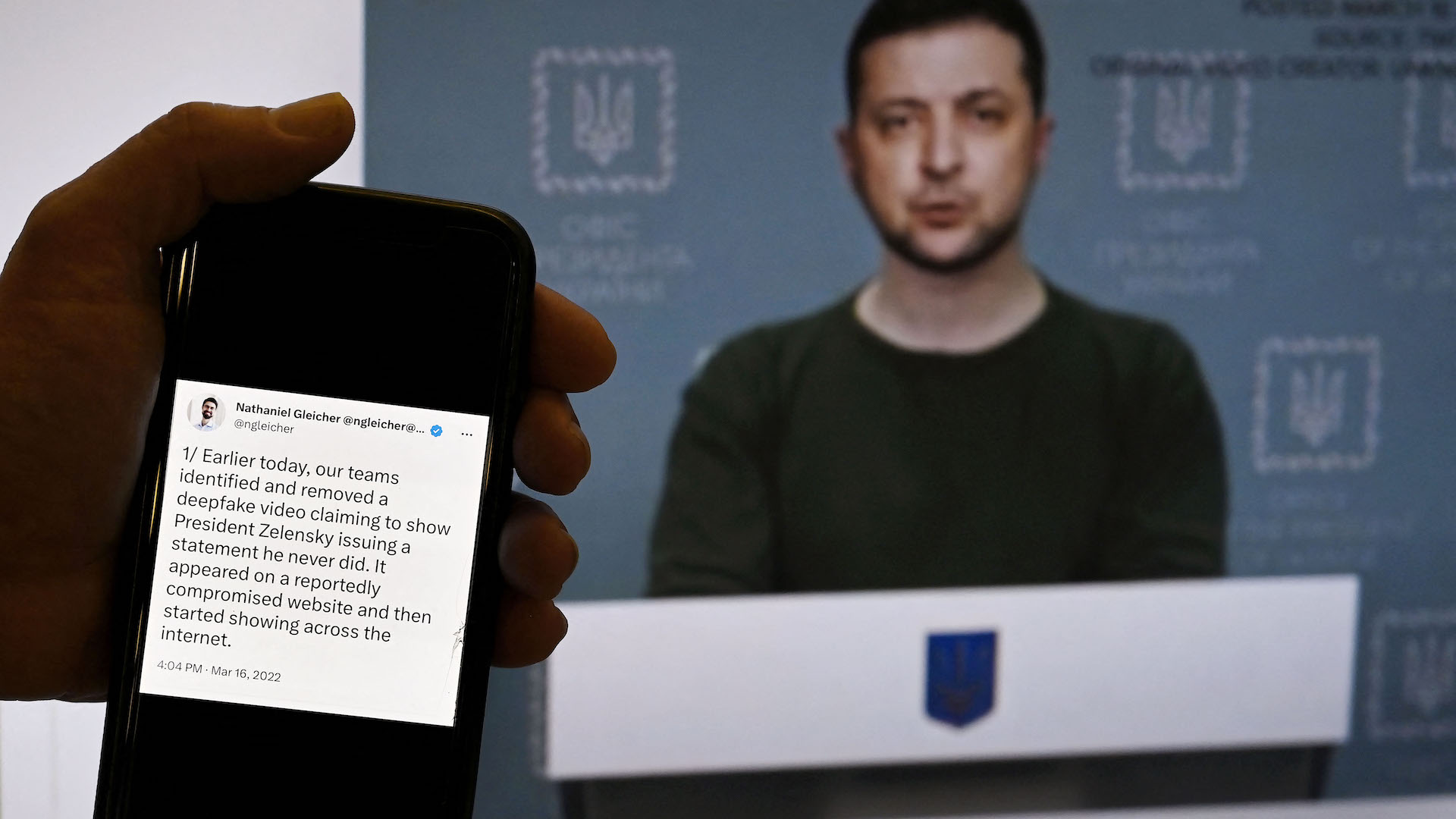

AI deepfakes

AI rich fakes have been used by cybercriminals to do everything from spoofing the voices of political candidates , to creatingfake sports news conferences , , to producingcelebrity imagesthat never happened and more . However , one of the most concern uses of deep false technology is part of the stage business sector . The World Economic Forum produceda 2024 reportthat noted that " … semisynthetic content is in a transitional period in which ethics and trust are in flux . " However , that transition has lead to some middling dire pecuniary outcome , include a British caller that lost over $ 25 million after a worker was convinced by a deepfake disguised as his Centennial State - worker to transfer the gist

Zestimate sellout

In former 2021 , Zillow made a big play in the AI blank space . It bet that a product centre on theatre flipping , first called Zestimate and then Zillow Offers , would bear off . The AI - powered system of rules allowed Zillow to tender users a simplified offer for a abode they were betray . Less than a year later , Zillow ended up cutting 2,000 job — a poop of its faculty .

Age discrimination

Last fall , the U.S. Equal Employment Opportunity Commissionsettled a case with the remote spoken language training company iTutorGroup . The company had to pay $ 365,000 because it had programmed its system to reject job practical app from women 55 and older and military personnel 60 and older . iTutorGroup has stopped mesh in the U.S. , but its blatant misuse of U.S. engagement law points to an underlying government issue with how AI intersects with human resources .

Election interference

As AI becomes a democratic platform for learning about domain newsworthiness , a pertain trend is developing . According to inquiry by Bloomberg News , even the most accurateAI organization tested with questions about the world ’s elections still got 1 in 5 responses wrong . Currently , one of the heavy concerns is thatdeepfake - focalise AI can be used to manipulate election effect .

AI self-driving vulnerabilities

Among the things you want a machine to do , stopping has to be in the top two . Thanks to an AI exposure , self - push cars can be infiltrated , and their technology can be hijack to ignore road star sign . Thankfully , this issue can now be avoided .

AI sending people into wildfires

One of the most ubiquitous frame of AI is railroad car - based piloting . However , in 2017 , there werereports that these digital wayfinding tools were transmit fleeing residents toward wildfiresrather than away from them . Sometimes , it release out , sealed routes are less busy for a rationality . This led to a warning from the Los Angeles Police Department to trust other sources .

Lawyer’s false AI cases

in the first place this year , a attorney in Canada was accused ofusing AI to contrive case references . Although his actions were get by opposing counsel , the fact that it go on is disturbing .

Sheep over stocks

Regulators , let in those from the Bank of England , are grow more and more concerned that AI tools in the business world could boost what they ’ve labeled as"herd - like " action on the blood market . In a fleck of heightened language , one commentator said the market needed a " killing switch " to counteract the possibility of funny technological doings that would supposedly be far less potential from a human .

Bad day for a flight

In at least two cases , AI appears to have played a use in accidents involving Boeing aircraft . According to a 2019 New York Times probe , one automated system was made " more fast-growing and riskier"and included removing possible safety measure . Those crash led to the deaths of more than 300 people and touch off a deeper dive into the company .

Retracted medical research

As AI is increasingly being used in the aesculapian research field , fear are climb up , In at least one case , anacademic journal mistakenly publish an article that used generative AI . academician are interested about how generative AI could change the trend of academic publishing .

Political nightmare

Among the infinite issue stimulate by AI , false accusal against pol are a tree bearing some pretty nasty yield . Bing ’s AI schmooze cock has at least one Swiss politician of slandering a colleagueand another of being involved in corporate espionage , and it has also made claims connecting a prospect to Russian lobbying efforts . There is also growing grounds that AI is being used to sway the most recent American and British election . Both the Biden and Trump campaignshave been exploring the utilisation of AI in a legal stage setting . On the other side of the Atlantic , the BBC found that young UK voterswere being do their own pile of misinform AI - led video

Alphabet error

In February 2024 , Google restricted some constituent of its AI chatbot Gemini ’s capabilities after it createdfactually inaccurate representationsbased on problematic procreative AI prompts submitted by user . Google ’s response to the tool , formerly know as Bard , and its errors intend a concerning drift : a business reality where hurrying is valued over accuracy .

AI companies' copyright cases

An important legal case involves whetherAI production like Midjourney can utilise artists ' content to train their models . Some troupe , like Adobe , have chosen to go a different route when training their AI , instead pulling from their own license libraries . The potential tragedy is a further reduction of artists ' career security if AI can trail a tool using artistry they do not own .

Google-powered drones

The intersection of the military and AI is a delicate topic , but their quislingism is not new . In one elbow grease , known as Project Maven , Google brook the development of AI to understand dawdler footage . Although Google eventually withdrew , it could have terrible issue for those stuck in war zones .

' Murder prediction ' algorithms echo some of Stalin ’s most terrible policies — governments are tread a very grievous note in pursuing them

US Air Force desire to develop smarter mini - bourdon power by brain - inspire AI chips

The ceaseless surveillance of forward-looking life history could worsen our mind function in ways we do n’t fully empathize , disturbing studies suggest