When you purchase through radio link on our site , we may earn an affiliate commission . Here ’s how it works .

contrived intelligence agency ( AI ) has been around for ten , but this class was a break for the nervous technology , with OpenAI ’s ChatGPT make approachable , practical AI for the mass . AI , however , has a chequer story , and today ’s technology was preceded by a curt track platter of flunk experiments .

For the most part , innovations in AI seem poised to ameliorate things like aesculapian nosology and scientific breakthrough . One AI exemplar can , for example , detect whether you ’re at eminent risk of originate lung cancer byanalyzing an X - beam scan . During COVID-19 , scientists also built an algorithm that could diagnose the virus bylistening to elusive difference of opinion in the sound of hoi polloi ’s coughs . AI has also been used todesign quantum physics experimentsbeyond what humans have conceive .

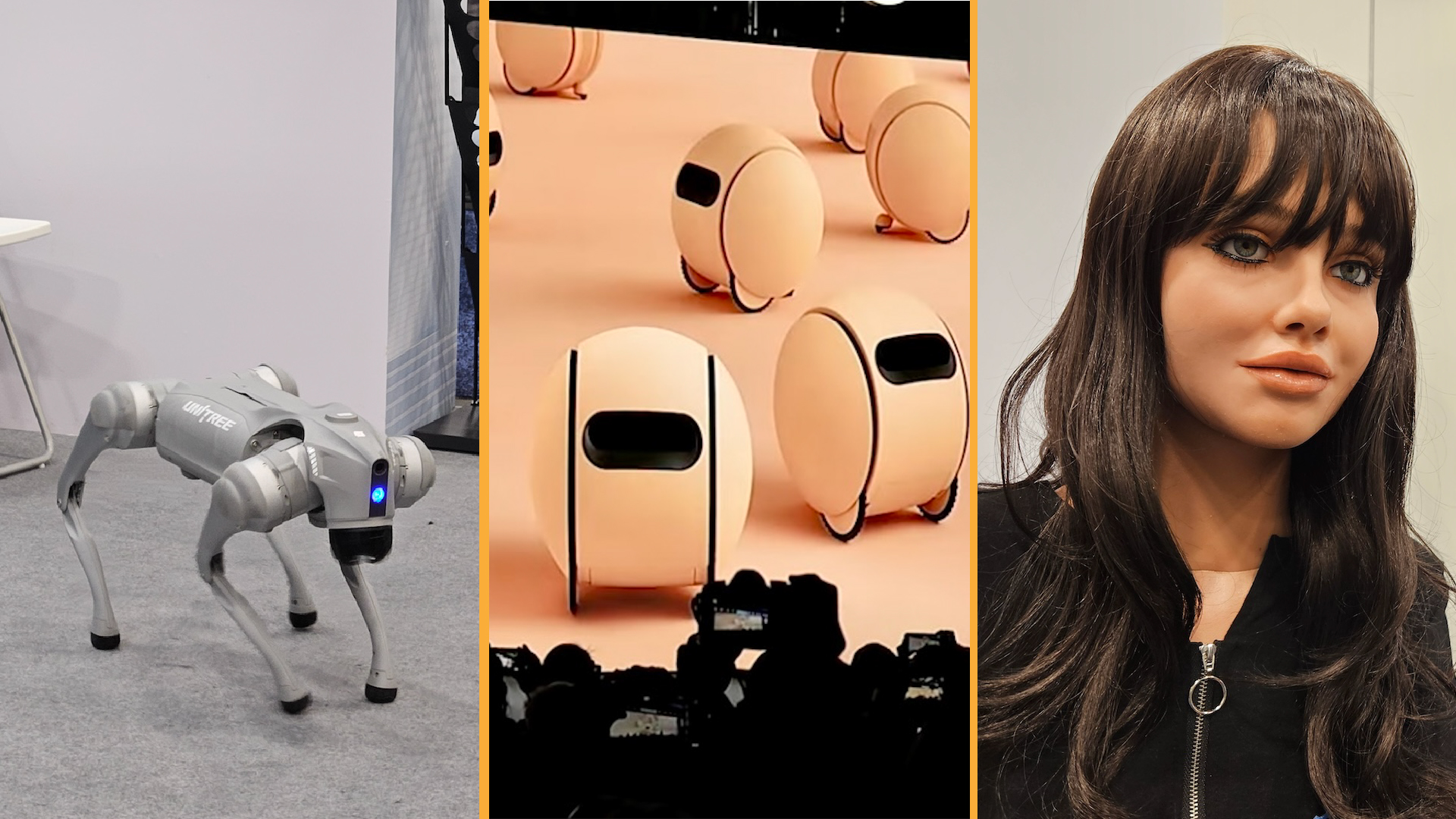

Artificial intelligence has been around for decades, but this year was a breakout for the spooky technology.

But not all the excogitation are so benign . From orca lagger to AI that threatens humans ’s future , here are some of the scariest AI breakthrough potential to come in 2024 .

Q* — the age of Artificial General Intelligence (AGI)?

We do n’t know why exactly OpenAI CEO Sam Altman was dismissed and reinstated in previous 2023 . But amid collective bedlam at OpenAI , hearsay swirled of an advance technology that could threaten the future tense of human beings . That OpenAI system , call in Q * ( pronounced Q - whiz ) may incarnate the potentially innovational realization of artificial general intelligence activity ( AGI),Reutersreported . Little is known about this mysterious system , but should reports be true , it could give up AI ’s capabilities up several notch .

relate : AI is transforming every panorama of science . Here ’s how .

AGI is a hypothetical tipping point , also get laid as the " Singularity , " in which AI becomes impudent than humans . Current generations of AI still lag in area in which humanity surpass , such as context - ground reasoning and genuine creativeness . Most , if not all , AI - sire content is just chuck , in some way , the data point used to train it .

Little is known about artificial general intelligence, but it could boost AI’s capabilities.

But AGI could potentially do particular jobs well than most people , scientist have said . It could also be weaponized and used , for example , to make enhanced pathogen , launch massive cyber attack , or orchestrate flock manipulation .

The idea of AGI has long been confined to science fable , and many scientistsbelieve we ’ll never reach this gunpoint . For OpenAI to have reached this tipping percentage point already would sure be a shock — but not beyond the realm of possibility . We know , for example , that Sam Altman was already lay the groundwork for AGI in February 2023 , outlining OpenAI ’s approach to AGI in ablog post . We also know expert are beginning to predict an impending breakthrough , including Nvidia ’s CEO Jensen Huang , who said in November that AGI is in reach within the next five years , Barronsreported . Could 2024 be the breakout year for AGI ? Only time will say .

Election-rigging hyperrealistic deepfakes

One of the most pressing cyber threats is that of deepfakes — entirely manufacture images or videos of the great unwashed that might misrepresent them , criminate them or swagger them . AI deepfake technology has n’t yet been safe enough to be a pregnant menace , but that might be about to change .

AI can now generate substantial - time deepfakes — live video feeds , in other words — and it is now becoming so serious at generating human fount thatpeople can no longer tell the difference between what ’s veridical or fake . Another subject , published in the journalPsychological Scienceon Nov. 13 , unearthed the phenomenon of " hyperrealism , " in which AI - generated content is more likely to be perceived as " real " than actually real message .

This would make it much impossible for people to distinguish fact from fabrication with the naked centre . Although tools could help oneself people detect deepfakes , these are n’t in the mainstream yet . Intel , for example , has built a veridical - time deepfake detector thatworks by using AI to canvas blood line flow . But FakeCatcher , as it ’s known , has produced mixed final result , accord tothe BBC .

AI deepfake technology has the potential to swing elections.

As reproductive AI matures , one scary hypothesis is that mass could deploy deepfakes to set about to swing elections . TheFinancial Times ( FT)reported , for example , that Bangladesh is brace itself for an election in January that will be interrupt by deepfakes . As the U.S. gears up for a presidential election in November 2024 , there ’s a possible action that AI and deepfakes could shift the resultant of this critical right to vote . UC Berkeleyis monitoring AI utilisation in campaigning , for exemplar , andNBC Newsalso reported that many land miss the law or tools to handle any surge in AI - generated disinformation .

Mainstream AI-powered killer robots

Governments around the humanity are progressively contain AI into tools for warfare . The U.S. government announced on Nov. 22 that 47 states had endorse a declaration on theresponsible use of AI in the armed forces — first launched at The Hague in February . Why was such a contract involve ? Because " irresponsible " utilization is a real and terrifying prospect . We ’ve seen , for good example , AI drones allegedly hunt down soldier in Libyawith no human input signal .

AI can recognize patterns , self - learn , make prediction or bring forth recommendations in military context , and an AI arms race is already afoot . In 2024 , it ’s likely we ’ll not only see AI used in weapons systems but also in logistics and decision support systems , as well as enquiry and exploitation . In 2022 , for representative , AI generated40,000 novel , hypothetical chemical weapons . Various offset of the U.S. armed forces haveordered dronesthat can execute target acknowledgment and battle cross better than humans . Israel , too , used AI to rapidly describe target at least 50 times quicker than humans can in the latest Israel - Hamas warfare , harmonise toNPR .

— In a 1st , scientists combine AI with a ' minibrain ' to make intercrossed computer

Governments around the world are incorporating AI into military systems.

— ' bookman of Games ' is the first AI that can subdue different type of games , like chess and salamander

— scientist created AI that could detect alien life — and they ’re not entirely sure how it work

But one of the most feared development area is that of lethal self-governing weapon systems ( LAWS ) — or killer robot . Several conduce scientists and technologists have discourage against killer robots , including Stephen Hawking in 2015andElon Musk in 2017 , but the technology has n’t yet materialized on a mass scale .

That said , some worrying developments suggest this year might be a prison-breaking for killer robots . For instance , inUkraine , Russia allegedly deploy the Zala KYB - UAV poke , which could recognise and onslaught target without human interference , according to a report card fromThe Bulletin of the Atomic Scientists . Australia , too , has developedGhost Shark — an autonomous poor boy system that is set to be produced " at scale of measurement " , according toAustralian Financial Review . The amount state around the creation are drop on AI is also an indicator — with China farm AI expenditure from a combine $ 11.6 million in 2010 to $ 141 million by 2019 , according to Datenna , Reuters reported . This is because , the issue added , China is locked in a race with the U.S. to deploy LAWS . fuse , these ontogenesis suggest we ’re enter a new dawn of AI war .