When you purchase through links on our site , we may earn an affiliate delegation . Here ’s how it works .

Artificial intelligence(AI ) has force its agency into the public consciousness thanks to the Parousia of powerful fresh AI chatbots and image generators . But the field has a foresighted history stretching back to thedawn of figure . Given how fundamental AI could be in changing how we endure in the coming class , empathize the root of this tight - develop field is crucial . Here are 12 of the most important milepost in the chronicle of AI .

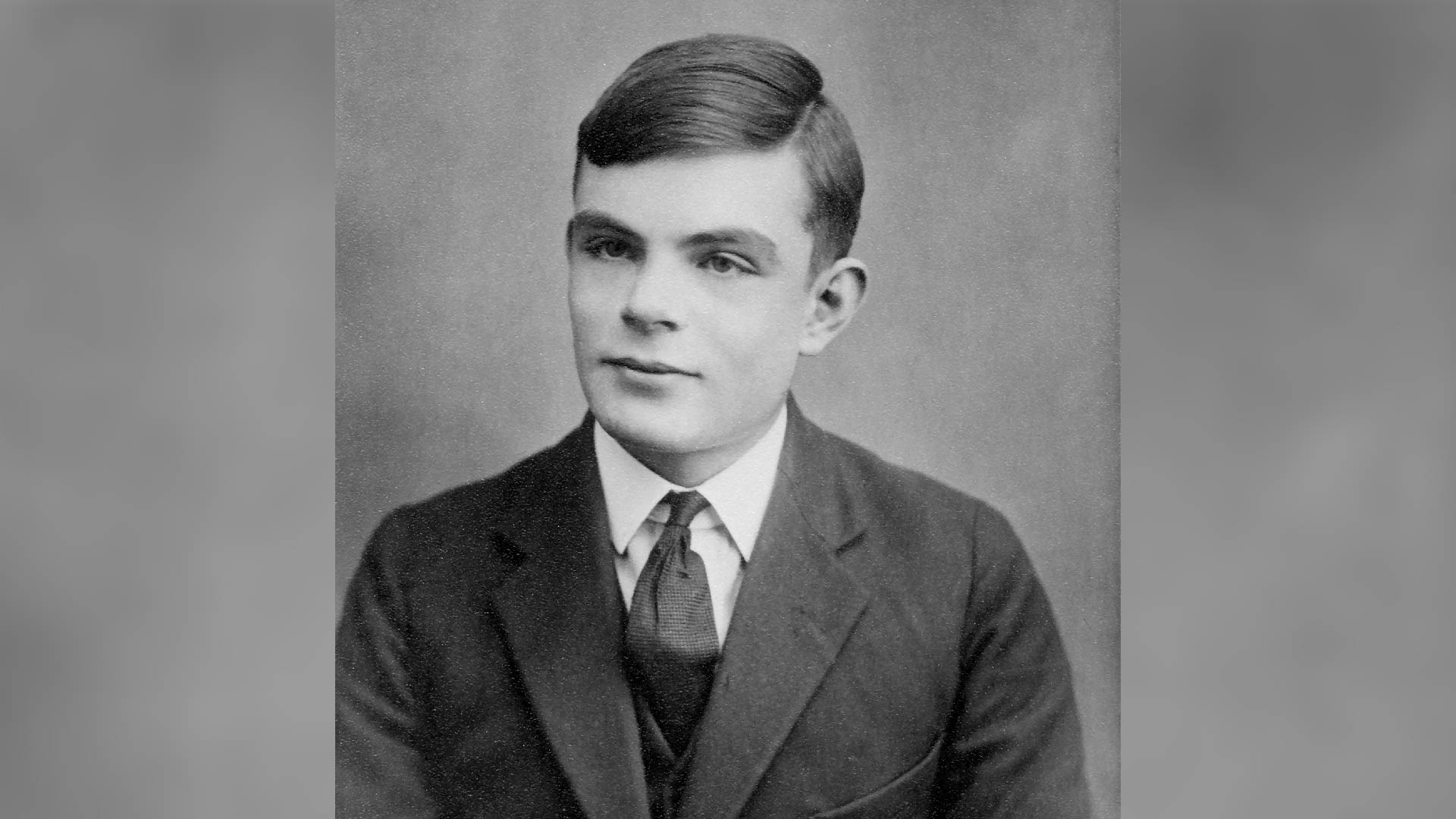

1950 — Alan Turing’s seminal AI paper

noted British computer scientistAlan Turingpublisheda papertitled " Computing Machinery and Intelligence , " which was one of the first elaborate investigations of the question " Can car think ? " .

Answering this question require you to first tackle the challenge of define " machine " and " recollect . " So , instead , he suggest a game : An observer would watch a conversation between a machine and a human and attempt to determine which was which . If they could n’t do so reliably , the simple machine would win the plot . While this did n’t prove a machine was " call back , " the Turing Test — as it came to be known — has been an authoritative yard measure for AI advance ever since .

1956 — The Dartmouth workshop

AI as a scientific study can trace its roots back to theDartmouth Summer Research Project on Artificial Intelligence , retain at Dartmouth College in 1956 . The participant were a who ’s who of influential reckoner scientist , include John McCarthy , Marvin Minsky and Claude Shannon . This was the first time the condition " artificial intelligence agency " was used as the group expend almost two months discuss how machines might copy learning and intelligence . The encounter kick - started serious enquiry on AI and laid the foot for many of the breakthroughs that do in the abide by ten .

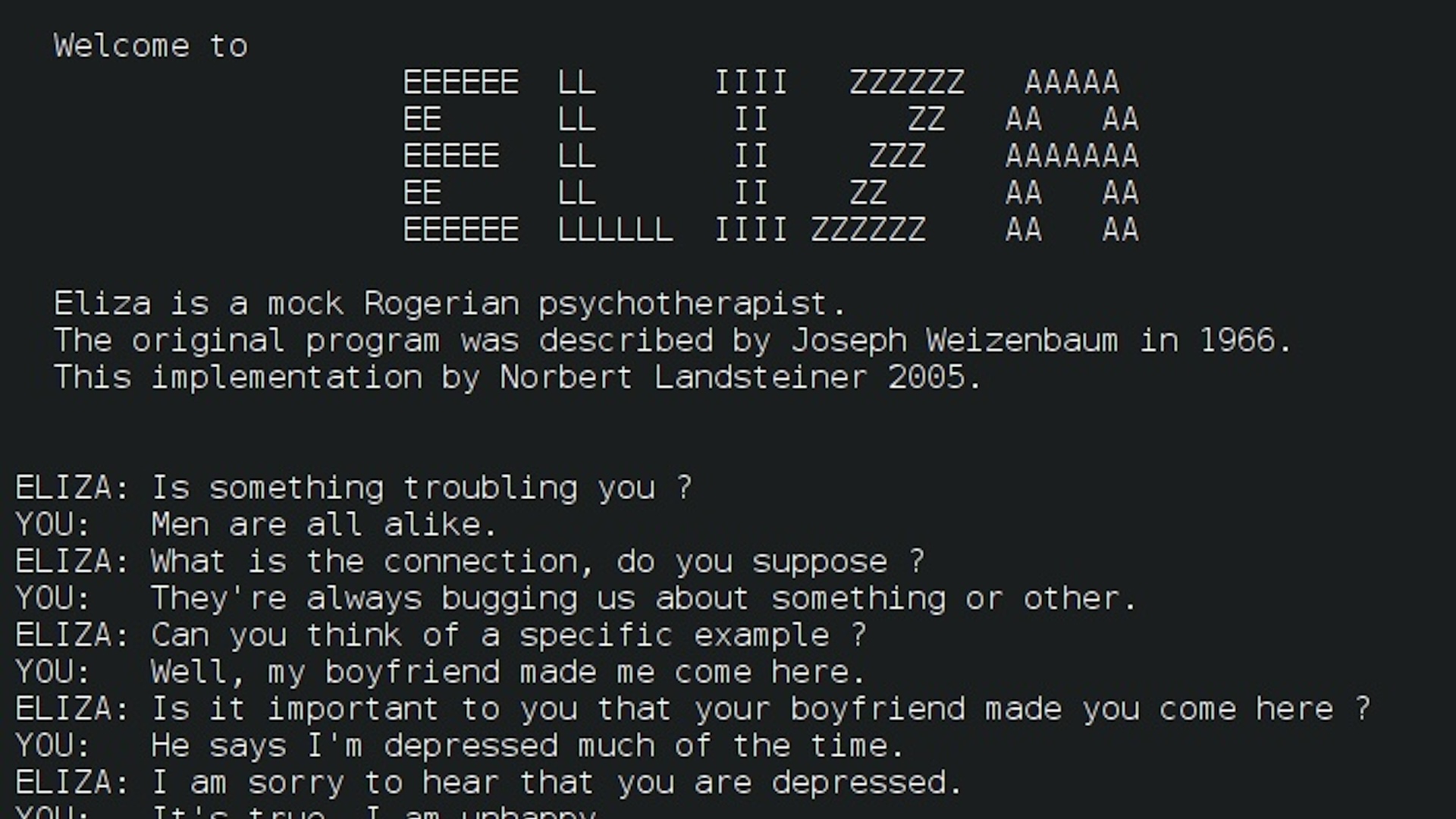

1966 — First AI chatbot

MIT research worker Joseph Weizenbaum unveil the first - ever AI chatbot , known asELIZA . The underlying software was underlying and regurgitated canned responses based on the keywords it discover in the prompting . Nonetheless , when Weizenbaum programmed ELIZA to behave as a psychotherapist , people were reportedly amazed at how convincing the conversations were . The work stimulate growinginterest in natural words processing , include from the U.S. Defense Advanced Research Projects Agency ( DARPA ) , which provided considerable financial backing for early AI research .

1974-1980 — First “AI winter”

It did n’t take long before early enthusiasm for AI began to fade . The 1950s and 1960s had been a rich time for the field , but in their enthusiasm , leading experts made bold claims about what simple machine would be up to of doing in the near future . The technology ’s failure to hold out up to those expectations led to spring up discontent . Ahighly vital reporton the area by British mathematician James Lighthill led the U.K. political science to cut almost all funding for AI inquiry . DARPA also drastically cut back funding around this time , leading to what would become known as the first " AI winter . "

1980 — Flurry of “expert systems”

Despite disillusion with AI in many quartern , research continued — and by the first of the eighties , the technology was starting to trip up the eye of the private sector . In 1980 , researchers at Carnegie Mellon University built anAI system called R1for the Digital Equipment Corporation . The program was an " expert system " — an approach to AI that investigator had been experiment with since the 1960s . These system used logical rules to reason through large databases of specialist knowledge . The program carry through the company one thousand thousand of dollar a year and kicked off a microphone boom in manufacture deployments of expert systems .

1986 — Foundations of deep learning

Most enquiry thus far had focalise on " symbolic " AI , which relied on handcraft logical system and knowledge databases . But since the nascence of the field , there was also a rival flow of enquiry into " connectionist " approaches that were urge on by the brain . This had continued quietly in the background and eventually issue forth to light in the 1980s . Rather than computer programming systems by hand , these technique involved coaxing " stilted neural networks " to memorize rules by training on data . In theory , this would lead to more flexible AI not constrained by the Lord ’s preconceptions , but take nervous internet proved challenging . In 1986 , Geoffrey Hinton , who would later be dubbed one of the " godfather of deep learning , " publisheda paperpopularizing " backpropagation " — the breeding proficiency underpinning most AI systems today .

1987-1993 — Second AI winter

1997 — Deep Blue’s defeat of Garry Kasparov

Despite reprise booms and bout , AI enquiry made steady progress during the 1990s largely out of the public heart . That changed in 1997 , when Deep Blue — an expert organization built by IBM — beat cheat human beings champion Garry Kasparov ina six - game serial . Aptitude in the complex game had long been seen by AI researchers as a cardinal mark of forward motion . Defeating the earth ’s best human player , therefore , was come across as a major milestone and made headline around the public .

2012 — AlexNet ushers in the deep learning era

Despite a fat body of academic piece of work , neural networks were seen as Laputan for real - world program . To be useful , they need to have many layers of neuron , but implementing large networks on conventional data processor hardware was prohibitively inefficient . In 2012 , Alex Krizhevsky , a doctoral pupil of Hinton , won the ImageNet data processor visual sensation rival by a large allowance with a rich - learning model calledAlexNet . The arcanum was to habituate specialized chip foretell graphics processing building block ( GPUs ) that could efficiently run much deeper networks . This sic the stage for the bass - memorise revolution that has powered most AI advances ever since .

2016 — AlphaGo’s defeat of Lee Sedol

While AI had already left chess in its rearview mirror , the much more complex Chinese board secret plan Go had stay a challenge . But in 2016 , Google DeepMind’sAlphaGobeat Lee Sedol , one of the earth ’s greatest Go players , over a five - secret plan serial publication . expert had assumed such a feat was still years away , so the result led to growing excitement around AI ’s progress . This was partially due to the universal - purpose nature of the algorithms underlie AlphaGo , which relied on an approaching called " reenforcement learning . " In this proficiency , AI system efficaciously learn through run and erroneous belief . DeepMind after protract and better the approach to createAlphaZero , which can instruct itself to toy a encompassing assortment of game .

2017 — Invention of the transformer architecture

Despite substantial progress in computer vision and game playing , deep learning was cause slow progress with language job . Then , in 2017 , Google research worker published a novel neural internet architecture called a " transformer , " which could assimilate vast amount of money of data point and make connection between distant information point . This rise in particular useful for the complex task of language mold and made it potential to create AIs that could at the same time tackle a variety of tasks , such as translation , schoolbook genesis and document summarisation . All of today ’s leading AI models rely on this architecture , including image generators like OpenAI’sDALL - E , as well as Google DeepMind ’s revolutionary protein fold modelAlphaFold 2 .

2022 – Launch of ChatGPT

On Nov. 30 , 2022 , OpenAI released a chatbot power by its GPT-3 expectant speech model . Known as " ChatGPT , " the shaft became a worldwide sensation , pull together more than a million users in less than a calendar week and 100 million by the undermentioned month . It was the first time members of the world could interact with the latest AI exemplar — and most were blown away . The inspection and repair is credited with set forth an AI boom that has seen billions of dollars invested in the field and breed numerous imitator from big tech companionship and startup . It has also led to growing uneasiness about the pace of AI progress , promptingan overt letterfrom outstanding tech leader calling for a pause in AI research to permit time to assess the implications of the applied science .